Hashicorp Nomad - Working with Consul Connect

Prerequisite CNI Plugins see Hashicorp Nomad - Working with Consul Connect.

Nomad Agent Consul Integration

In order to use Consul with Nomad, you will need to configure and install Consul on your nodes alongside Nomad. The consul stanza configures the Nomad agent's (Master Node) communication with Consul for service discovery and key-value integration. When configured, tasks can register themselves with Consul, and the Nomad cluster can automatically bootstrap itself:

/etc/nomad.d/server.hcl

# This is an example that is part of Nomad's internal default configuration for Consul integration.

consul {

# The address to the Consul agent.

address = "127.0.0.1:8500"

token = "abcd1234"

grpc_address = "127.0.0.1:8502"

# TLS encryption

ssl = true

ca_file = "/etc/consul.d/tls/consul-agent-ca.pem"

cert_file = "/etc/consul.d/tls/consul-server-consul-0.pem"

key_file = "/etc/consul.d/tls/consul-server-consul-0-key.pem"

verify_ssl = true

# The service name to register the server and client with Consul.

server_service_name = "nomad"

client_service_name = "nomad-client"

# Enables automatically registering the services.

auto_advertise = true

# Enabling the server and client to bootstrap using Consul.

server_auto_join = true

client_auto_join = true

}

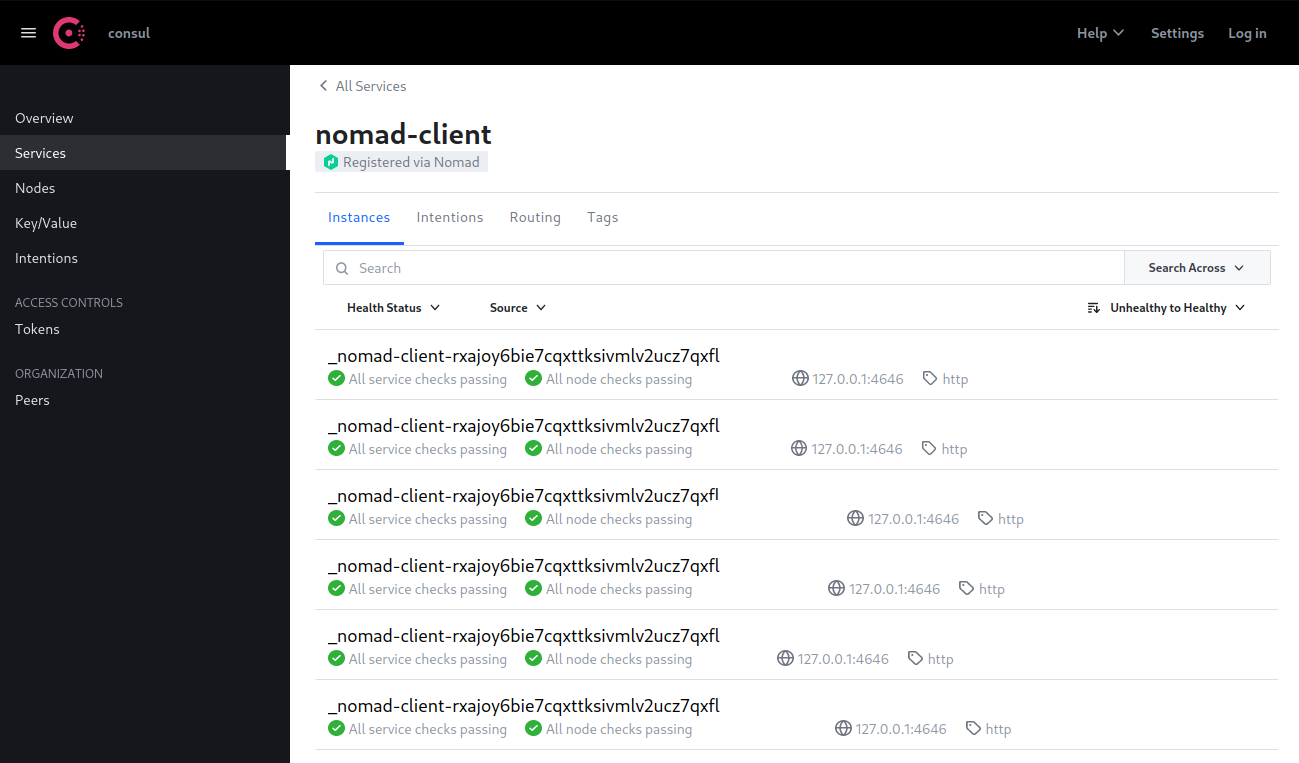

After updating and restarting your Nomad Master service you should be able to see all your Nomad clients and their registered services in Consul:

Consul Namespace

Nomad requires agent:read permissions. In order to use the consul_namespace feature, Nomad will need a token generated in Consul's default namespace. That token should be created with agent:read as well as a namespace block with the other relevant permissions for running Nomad in the intended namespace. The Consul policy below shows an example policy configuration for a Nomad server:

agent_prefix "" {

policy = "read"

}

namespace "nomad-ns" {

acl = "write"

key_prefix "" {

policy = "read"

}

node_prefix "" {

policy = "read"

}

service_prefix "" {

policy = "write"

}

}

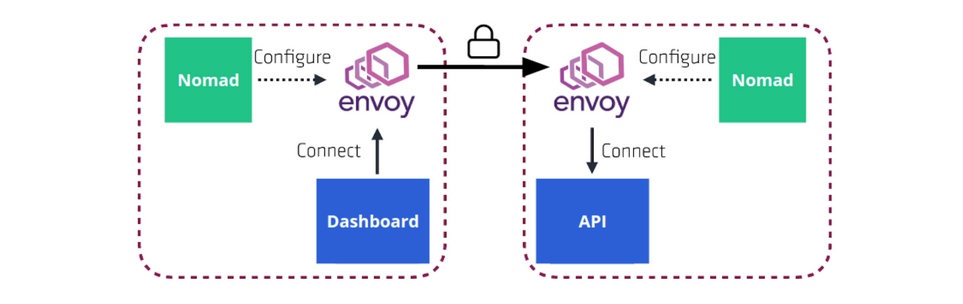

Consul Service Mesh

Consul service mesh provides service-to-service connection authorization and encryption using mutual Transport Layer Security (TLS). Applications can use sidecar proxies in a service mesh configuration to automatically establish TLS connections for inbound and outbound connections.

To support Consul service mesh, Nomad adds a new networking mode for jobs that enables tasks in the same task group to share their networking stack. When service mesh is enabled, Nomad will launch a proxy alongside the application in the job file. The proxy (Envoy) provides secure communication with other applications in the cluster.

We need to enable the gRPC port and set connect to enabled by adding some additional information to our Consul client configurations:

/etc/consul.d/consul.hcl

ports {

grpc_tls = 8502

}

connect {

enabled = true

}

To facilitate cross-Consul datacenter requests of Connect services registered by Nomad, Consul agents will need to be configured with default anonymous ACL tokens with ACL policies of sufficient permissions to read service and node metadata pertaining to those requests.

service_prefix "" { policy = "read" }

node_prefix "" { policy = "read" }

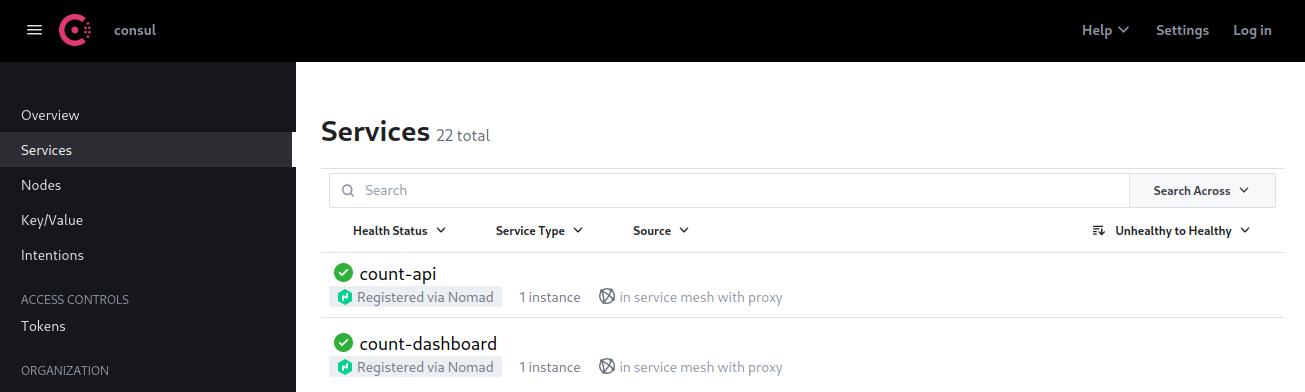

Mesh-enabled Services

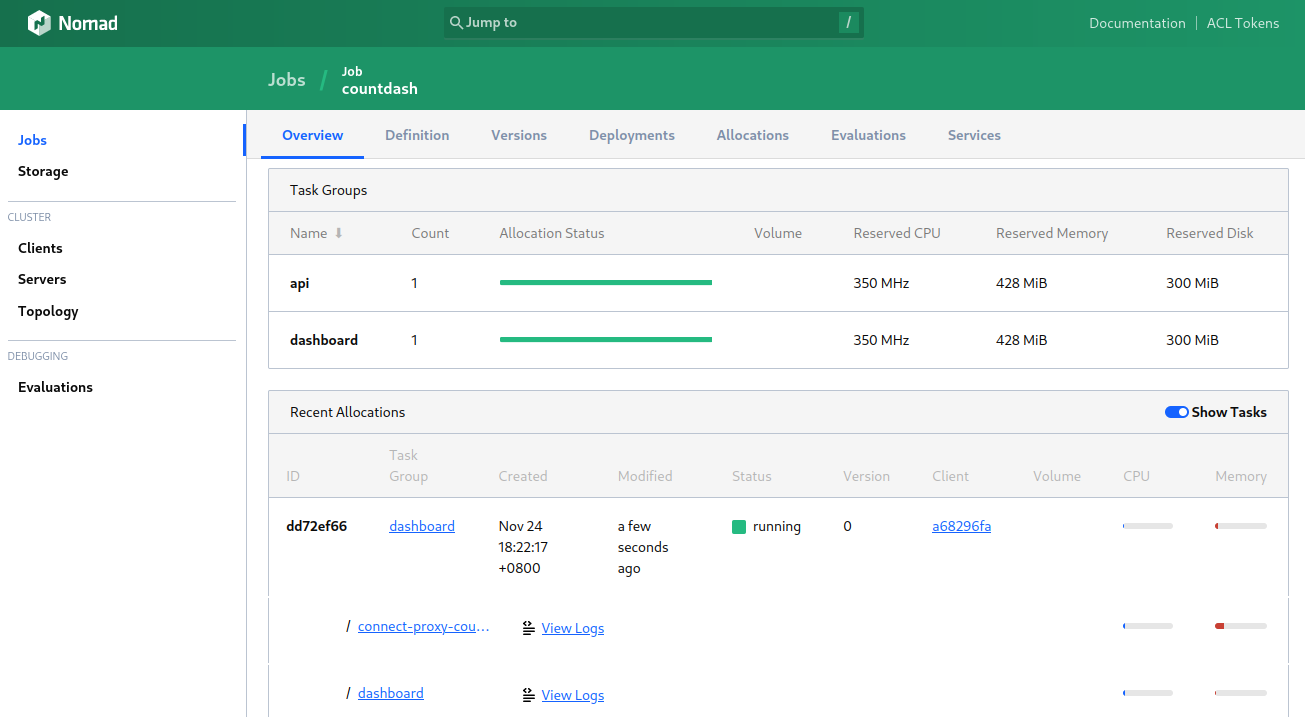

The following job file is an example to enable secure communication between a web dashboard and a backend counting service. The web dashboard and the counting service are managed by Nomad. The dashboard is configured to connect to the counting service via localhost on port 9001. The proxy is managed by Nomad, and handles mTLS communication to the counting service.

servicemesh.tf

job "countdash" {

datacenters = ["dc1"]

group "api" {

network {

mode = "bridge"

}

service {

name = "count-api"

port = "9001"

connect {

sidecar_service {}

}

}

task "web" {

driver = "docker"

config {

image = "hashicorpdev/counter-api:v3"

}

}

}

group "dashboard" {

network {

mode = "bridge"

port "http" {

static = 9002

to = 9002

}

}

service {

name = "count-dashboard"

port = "http"

connect {

sidecar_service {

proxy {

upstreams {

destination_name = "count-api"

local_bind_port = 8080

}

}

}

}

}

task "dashboard" {

driver = "docker"

env {

COUNTING_SERVICE_URL = "http://${NOMAD_UPSTREAM_ADDR_count_api}"

}

config {

image = "hashicorpdev/counter-dashboard:v3"

}

}

}

}

ERROR message:

WARNING: Failed to place all allocations. Task Group "api" (failed to place 1 allocation) Constraint "${attr.consul.grpc} > 0": 1 nodes excluded by filter.

Consul agents running TLS and a version greater than 1.14.0 should set the grpc_tls configuration parameter instead of grpc. But the error message above shows up when grpc is not defined - the following configuration solves this issue (adding grpc back in):

/etc/consul.d/consul.hcl

ports {

grpc = 8503

grpc_tls = 8502

}

connect {

enabled = true

}

API Service

The API service is defined as a task group with a bridge network. Since the API service is only accessible via Consul service mesh, it does not define any ports in its network. The service stanza enables service mesh. The port in the service stanza is the port the API service listens on. The Envoy proxy will automatically route traffic to that port inside the network namespace.:

group "api" {

network {

mode = "bridge"

}

# ...

service {

name = "count-api"

port = "9001"

# ...

connect {

sidecar_service {}

}

}

# ...

}

Web Frontend

The web frontend is defined as a task group with a bridge network and a static forwarded port:

group "dashboard" {

network {

mode = "bridge"

port "http" {

static = 9002

to = 9002

}

}

# ...

}

The to = 9002 parameter forwards that host port to port 9002 inside the network namespace. The web frontend connects to the API service via Consul service mesh. This allows you to connect to the web frontend in a browser by visiting http://<host_ip>:9002 as show below:

The web frontend is configured to communicate with the API service with an environment variable:

env {

COUNTING_SERVICE_URL = "http://${NOMAD_UPSTREAM_ADDR_count_api}"

}