Containerized PyTorch Dev Workflow

I recently looked into dockerrizing my Tensorflow development & deployment workflow. I now want to see if I can do the same with my PyTorch projects.

First of all, you still need to prepare your system so that Docker has access to your Nvidia GPU. This seems to be identical to the Tensorflow setup.

Docker Image

Next, there are official PyTorch Docker Images available for download. Good! But I really started to like using Jupyter notebooks - and there is no version of the official image that has them pre-installed. So let's fix that:

FROM pytorch/pytorch:latest

# Set environment variables

ENV DEBIAN_FRONTEND=noninteractive

# Install system dependencies

RUN apt-get update && \

apt-get install -y \

git \

tini \

python3-pip \

python3-dev \

python3-opencv \

libglib2.0-0

# Upgrade pip

RUN python3 -m pip install --upgrade pip

RUN pip3 install jupyter

# Set the working directory

WORKDIR /opt/app

# Start the notebook

RUN chmod +x /usr/bin/tini

ENTRYPOINT ["/usr/bin/tini", "--"]

CMD ["jupyter", "notebook", "--port=8888", "--no-browser", "--ip=0.0.0.0", "--allow-root"]

Running the Container

Let's build this custom image with:

docker build -t pytorch-jupyter . -f Dockerfile

I can now create the container and mount my working directory into the container WORKDIR to get started:

docker run --gpus all -ti --rm \

-v $(pwd):/opt/app -p 8888:8888 \

--name pytorch-jupyter \

pytorch-jupyter:latest

[C 2023-08-21 08:47:56.598 ServerApp]

To access the server, open this file in a browser:

file:///root/.local/share/jupyter/runtime/jpserver-7-open.html

Or copy and paste one of these URLs:

http://e7f849cdd75e:8888/tree?token=8d72a759100e2c2971c4266bbcb8c6da5f743015eecd5255

http://127.0.0.1:8888/tree?token=8d72a759100e2c2971c4266bbcb8c6da5f743015eecd5255

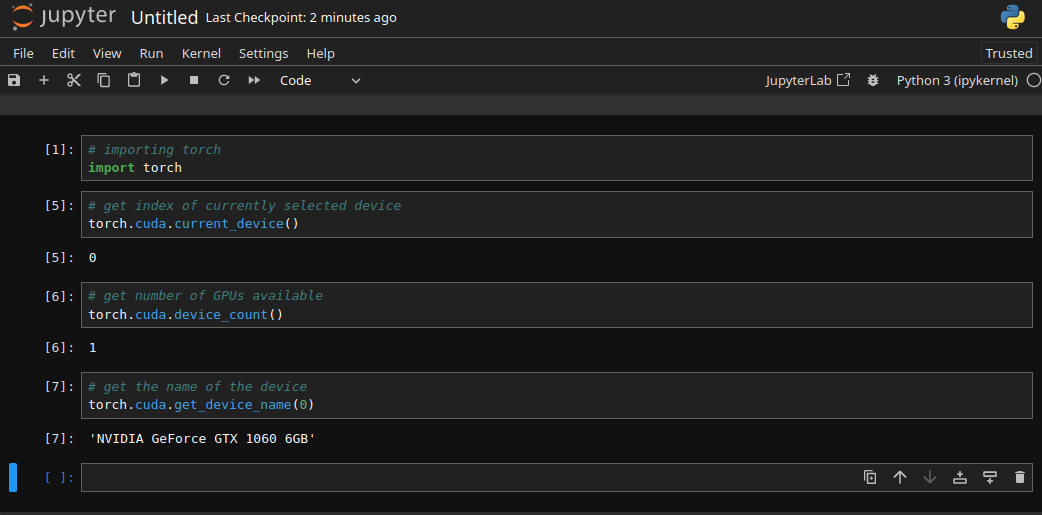

Verify PyTorch

Troubleshooting

ERROR: Unexpected bus error encountered in worker. This might be caused by insufficient shared memory (shm).

Please note that PyTorch uses shared memory to share data between processes, so if torch multiprocessing is used (e.g. for multithreaded data loaders) the default shared memory segment size that container runs with is not enough, and you should increase shared memory size either with --ipc=host or --shm-size command line options to nvidia-docker run.

docker run --ipc=host --gpus all -ti --rm \

-v $(pwd):/opt/app -p 8888:8888 \

--name pytorch-jupyter \

pytorch-jupyter:latest