Elasticsearch & Kibana v8 Index Management

Index Lifecycle

Index Lifecycle Policies

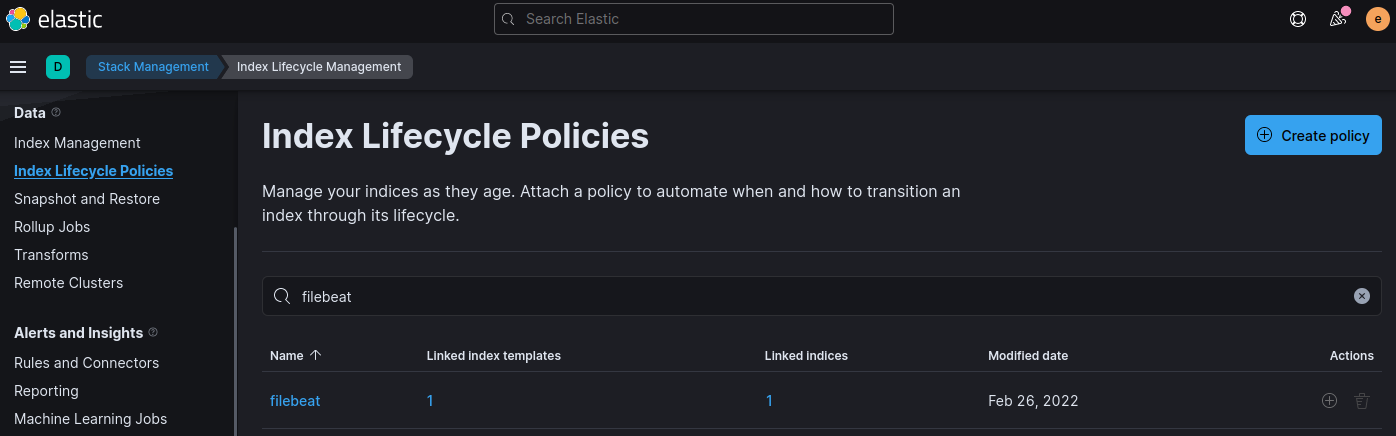

I set up Filebeat on serveral servers now and I can see in Kibana that the Filebeat Index is eating up storage quickly. Elasticsearch allows you to set up 3 stages for the lifecycle of your index - from hot to cold. But for now I only need a way to keep the storage requirements in check. Filebeat already has a simple Lifecycle Policy in the Index Management section of Kibana:

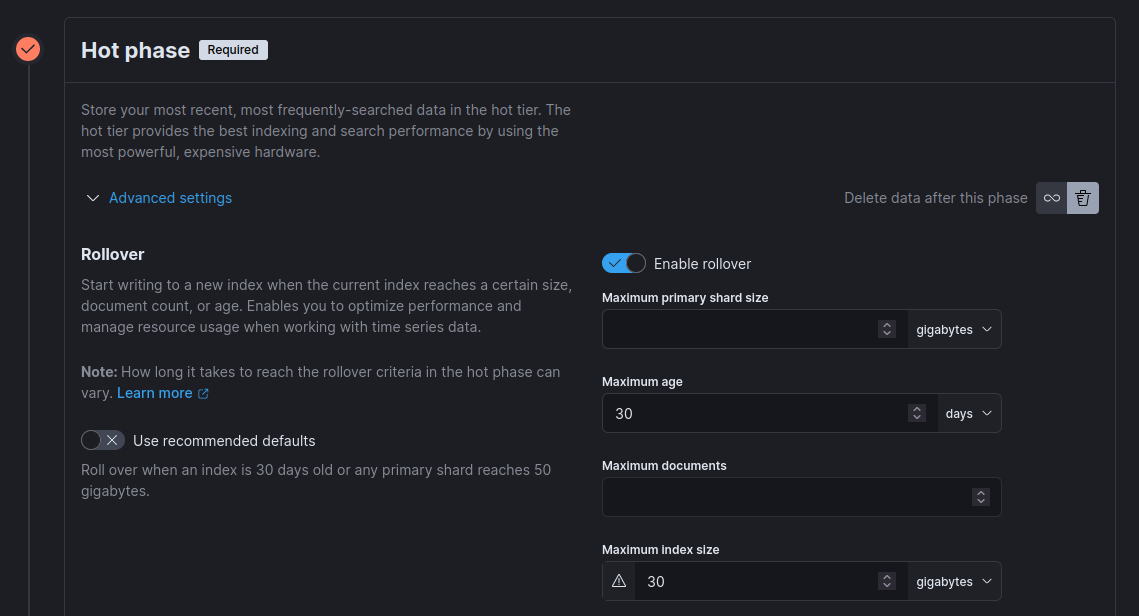

I can edit this policy and set it up to delete all data older than 30 days while setting a hard limit of 30Gb of storage use:

This sends the following request to Elasticsearch:

PUT _ilm/policy/filebeat

{

"policy": {

"phases": {

"hot": {

"min_age": "0ms",

"actions": {

"rollover": {

"max_size": "30gb",

"max_age": "30d"

}

}

},

"delete": {

"min_age": "30d",

"actions": {

"delete": {}

}

}

}

}

}

Index Template

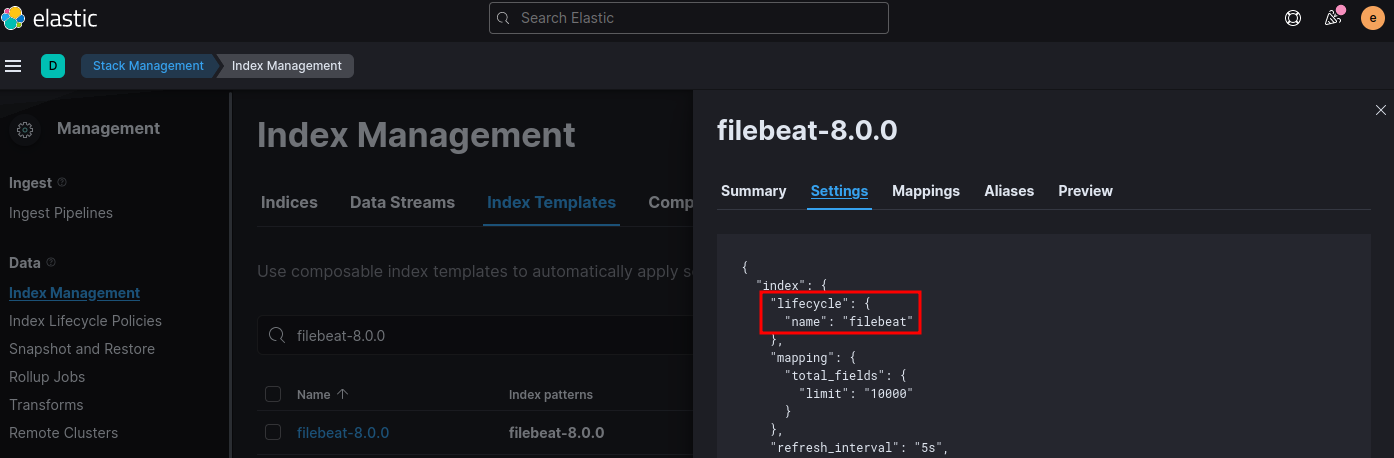

Under Index Management I can now check that the policy is actually used by the Filebeat template:

I can also verify that the policy has been applied in the Kibana Console:

GET filebeat-*/_ilm/explain

{

"indices" : {

".ds-filebeat-8.0.0-2022.02.26-000001" : {

"index" : ".ds-filebeat-8.0.0-2022.02.26-000001",

"managed" : true,

"policy" : "filebeat",

"lifecycle_date_millis" : 1645863853744,

"age" : "4.07d",

"phase" : "hot",

"phase_time_millis" : 1645863854249,

"action" : "rollover",

"action_time_millis" : 1645863855250,

"step" : "check-rollover-ready",

"step_time_millis" : 1645863855250,

"phase_execution" : {

"policy" : "filebeat",

"phase_definition" : {

"min_age" : "0ms",

"actions" : {

"rollover" : {

"max_size" : "30gb",

"max_age" : "30d"

}

}

},

"version" : 2,

"modified_date_in_millis" : 1646215310049

}

}

}

}

Index Lifecycle Management Service

To pause the lifecycle management run the following commands in Kibana:

POST _ilm/stop

{

"acknowledged" : true

}

GET _ilm/status

{

"operation_mode" : "STOPPED"

}

POST _ilm/start

{

"acknowledged" : true

}

GET _ilm/status

{

"operation_mode" : "RUNNING"

}

Snapshots

Prepare Elasticsearch (Docker)

You can use the Snapshot function to create backup of your indices. I am going to configure a file path that Elasticsearch should use to store those backups. First I need to mount an additional volume into my Elasticsearch container:

volumes:

- type: bind

source: ./elasticsearch/config/elasticsearch.yml

target: /usr/share/elasticsearch/config/elasticsearch.yml

read_only: true

- type: volume

source: elasticsearch

target: /usr/share/elasticsearch/data

- type: bind

source: /opt/wiki_elk/snapshots

target: /snapshots

And then edit the elasticsearch.yml file to use this path for snapshots:

## Snapshots

path.repo: ["/snapshots"]

Register a Repository

Bring your Elastic Stack back up and create the /opt/wiki_elk/snapshots folder on your system. Back in Kibana run the following command to register a repository for your index:

PUT /_snapshot/wiki_de

{

"type": "fs",

"settings": {

"location": "/snapshots"

}

}

If you run into write permission errors, chown 1000:1000 /opt/wiki_elk/snapshots and give write permissions for the docker user!

Verify that you were successful by running GET /_snapshot which should return your settings:

{

"wiki_de" : {

"type" : "fs",

"settings" : {

"location" : "/snapshots"

}

}

}

Create a Snapshot of all Indices

Now create your snapshot by running:

PUT /_snapshot/wiki_de/wiki_de_2022_02_03

This will create a snapshot of All Indices:

ls /opt/wiki_elk/snapshots/indices

041lEuh8QQu_aW_-sWoi8g BSpLqApuTTS_Yci5iAF67Q hkL1fTnqSR68lsqn0Cgv-g n9NCIwbUQpaJiHRoWfgRdg

0z3zxa1ERT6KLTrs8V4N5A C1fLeboRTa-2esoWAZTCfg iJgSgZINSryhOvJdmPSrCA NNi4_pPtQDGXTbchY_3C7A

1i46fKrySyueZ6WyBgKfwg C9rflA_jQRmq_wJgZcQYwg _imL71P-QhKw_hdrFWMYgQ oM-RCbEcTNKsiXqBYFO0rg

2So2kNmBSL6F_P-l93UJCQ CIN03A9hQriXJO646eO-Pw JWnzSeZDRyCBKWsMRw3v7w P9IkckqZRMWGInwSqeSC3w

41ADNSqNRv2N9GrkS-z6DA cTQbc6wTQgWE1PUYqb_sug K78LuKaNT0awSpg8a-HHKw PcphEXXOQc-z5y-6yDe-Dg

46PZDBnHTJu3vmZEiNRSWQ cxq6aOE1QH2hNcGDy67c9g KC0DNKtKTymp9eQ1kZ45xA Q2MUewYzRByR0wQ4Gbe3Ow

4A68GgRURD-1vqKSnmbPhg d4lH5dCoQHOGEtsfzAx0og KoI3_6IDRDO0xPhVcrjV9g qwr62LACT0uF1PDhK3pSCQ

-4uo2hDZTb2UUxQDxTPHPg D8XVi8LfRrettJk1Xb6pNg KxySo37lRUeK5tnp9SYbKA SE25Oe2cTr6AjDvvMTs4UQ

5ATJjtIYRBiHH5-F5Ia6ig _Ds-laExQ-a-G8o50xipzA lbRmT2gcSeGH8KPZUZclsA tabkxdbkTJ2WSSno0mCNRQ

6t53QEC7SEeA4z1BgdRJCw EbBxodtSR1Gy85V8Mod8WA LipHTP7_QpWuWBWhjOv7zA U2xc3eC1TDmjfUw4MiUD2w

AQdC4-3nQc6RGllJEVt3-A EF1YCooqRRauQtseBQmeIg lSkbah9JR1OSILG2vtO6NQ UCT3k_k9TTKCGHL5l7u25A

AXTDIVZHQQCgEigJsHHjRg eijD5mVcSRmgt3qmlgZSOw m2zp0iYVQtWeJTOCV6c1MQ Uel1oFILTN6MvP2Mvnr5Ug

b0OktdJsSZaG6-YCKRh7_w fA22AhPYRnaLkYnc78On3A m8TKnNyMTHOq8Gp5maZ9Jw ujLVq7vqTlm-kH2zWsvP4w

b2peYubmRaKyQR22K71zoQ FGNdQj9QRjKXDCP7JbXovg MlWUpb-LSYO-pwGpsu7qug ulF7-27ISI-Ewk0ydQsazA

bE0YNZFXTNCQ5UuqKrz25w G7Lg_g-JSoCN-WhDOPfL3A N3gx6nmdS9KzOZy3ZfhEUg ULZ65qqRSmen8IFW9p-m4Q

bp36fnmiTU-cONB3x6gpGQ HaWEe2YfQz6dzbUeRgr4YQ N9bZIRr0T1ashnx6fcsiNQ -uNT_WwrS-ORmSWjte7peA

You can check the current snapshot in Kibana with:

GET /_snapshot/wiki_de/wiki_de_2022_02_03

{

"snapshots" : [

{

"snapshot" : "wiki_de_2022_02_03",

"uuid" : "_dEhyGmsTt2gzMf4yyVsUw",

"repository" : "wiki_de",

"version_id" : 8000199,

"version" : "8.0.1",

"indices" : [

".kibana_8.0.1_001",

"ilm-history-3-000009",

".monitoring-kibana-7-2022.02.27",

".security-7",

"logs-index_pattern_placeholder",

...

"total" : 1,

"remaining" : 0

}

You can delete the snapshot with:

DELETE /_snapshot/wiki_de/wiki_de_2022_02_03

Create a Snapshot of specific Indices

PUT /_snapshot/wiki

{

"type": "fs",

"settings": {

"location": "/snapshots"

}

}

PUT /_snapshot/wiki/wiki_2022_02_03

{

"indices": ["wiki_de", "wiki_en", "wiki_fr"]

}

Verify that you were successful:

GET /_snapshot/wiki/wiki_2022_02_03

{

"snapshots" : [

{

"snapshot" : "wiki_2022_02_03",

"uuid" : "AWzAEEwUQAm9S40sdcw0ZQ",

"repository" : "wiki",

"version_id" : 8000199,

"version" : "8.0.1",

"indices" : [

".kibana_8.0.1_001",

"wiki_fr",

".transform-notifications-000002",

".kibana_task_manager_8.0.1_001",

"wiki_de",

".kibana_8.0.0_001",

"wiki_en",

".kibana_1",

".geoip_databases",

".kibana_task_manager_8.0.0_001",

],

"data_streams" : [ ],

"include_global_state" : true,

"state" : "SUCCESS",

"start_time" : "2022-03-02T12:24:20.048Z",

"start_time_in_millis" : 1646223860048,

"end_time" : "2022-03-02T12:24:35.265Z",

"end_time_in_millis" : 1646223875265,

"duration_in_millis" : 15217,

"failures" : [ ],

"shards" : {

"total" : 27,

"failed" : 0,

"successful" : 27

},

"feature_states" : [

{

"feature_name" : "kibana",

"indices" : [

".kibana_8.0.0_001",

".kibana_task_manager_8.0.1_001",

".kibana_1",

".kibana_8.0.1_001",

".apm-custom-link",

".kibana_task_manager_1",

".kibana_task_manager_8.0.0_001",

]

},

{

"feature_name" : "geoip",

"indices" : [

".geoip_databases"

]

},

{

"feature_name" : "async_search",

"indices" : [

".async-search"

]

},

{

"feature_name" : "transform",

"indices" : [

".transform-internal-007",

".transform-internal-005"

]

},

{

"feature_name" : "fleet",

"indices" : [

".fleet-enrollment-api-keys-7",

".fleet-policies-7"

]

},

{

"feature_name" : "tasks",

"indices" : [

".tasks"

]

},

{

"feature_name" : "security",

"indices" : [

".security-7"

]

}

]

}

],

"total" : 1,

"remaining" : 0

}

Re-running the snapshot will create incremental updates that can be versioned - e.g. Gitlab.