Hashicorp Nomad Refresher - Jobs

Jobs Specifications

The Nomad job specification defines the schema for Nomad jobs. Nomad jobs are specified in HCL. The general hierarchy for a job is:

job

\_ group

\_ task

Each job file has only a single job, however a job may have multiple groups, and each group may have multiple tasks. Groups contain a set of tasks that are co-located on a machine.

Use the job init command to generate a sample job file (leave out the -short flag to have a commented version):

nomad job init -short redis.nomad

The file redis.nomad will be generated for you:

job "redis" {

datacenters = ["instaryun"]

group "cache" {

network {

port "db" {

to = 6379

}

}

task "redis" {

driver = "docker"

config {

image = "redis:3.2"

ports = ["db"]

}

resources {

cpu = 500

memory = 256

}

}

}

}

Running a Job

You can dry-run the job with:

nomad job plan redis.nomad

+ Job: "redis"

+ Task Group: "cache" (1 create)

+ Task: "redis" (forces create)

Scheduler dry-run:

- All tasks successfully allocated.

Job Modify Index: 0

To submit the job with version verification run:

nomad job run -check-index 0 redis.nomad

==> 2021-08-24T11:45:00+08:00: Monitoring evaluation "edead921"

2021-08-24T11:45:00+08:00: Evaluation triggered by job "redis"

2021-08-24T11:45:00+08:00: Allocation "204deabc" created: node "3d32b138", group "cache"

==> 2021-08-24T11:45:01+08:00: Monitoring evaluation "edead921"

2021-08-24T11:45:01+08:00: Evaluation within deployment: "60b35c44"

2021-08-24T11:45:01+08:00: Evaluation status changed: "pending" -> "complete"

==> 2021-08-24T11:45:01+08:00: Evaluation "edead921" finished with status "complete"

==> 2021-08-24T11:45:01+08:00: Monitoring deployment "60b35c44"

✓ Deployment "60b35c44" successful

2021-08-24T11:45:23+08:00

ID = 60b35c44

Job ID = redis

Job Version = 0

Status = successful

Description = Deployment completed successfully

Deployed

Task Group Desired Placed Healthy Unhealthy Progress Deadline

cache 1 1 1 0 2021-08-24T11:55:22+08:00

The job preps run successfully. Check your the selected minion:

docker ps

CONTAINER ID IMAGE COMMAND PORTS

7095dc48d9e9 redis:3.2 "docker-entrypoint.s…" 192.168.2.111:29853->6379/tcp, 192.168.2.111:29853->6379/udp

Example: Wordpress

I want to use Nomad to setup Wordpress in Docker on my minion server. I will have to download the latest release of Wordpress (with MD5 Checksum verification) and wrap this code into an Apache2 Webserver image. And prepare the database connection with a few environment variables:

nano get_wordpress.nomad

job "get-wordpress" {

datacenters = ["instaryun"]

type = "service"

group "webs" {

count = 1

task "frontend" {

driver = "docker"

artifact {

source = "https://wordpress.org/wordpress-5.8.tar.gz"

destination = "local/wordpress"

options {

checksum = "md5:b46d3968bcf55fb2b6982fc3ef767a01"

}

}

config {

image = "httpd"

}

service {

port = "http"

}

env {

DB_HOST = "nomad-minion"

DB_USER = "web"

DB_PASS = "loremipsum"

}

}

network {

port "http" {

static = 80

}

}

}

}

A dry-run shows me that everything is OK:

nomad job plan get_wordpress.nomad

+ Job: "get-wordpress"

+ Task Group: "webs" (1 create)

+ Task: "frontend" (forces create)

Scheduler dry-run:

- All tasks successfully allocated.

Job Modify Index: 0

nomad job run -check-index 0 get_wordpress.nomad

==> 2021-08-24T13:04:05+08:00: Monitoring evaluation "70277f6e"

2021-08-24T13:04:05+08:00: Evaluation triggered by job "get-wordpress"

==> 2021-08-24T13:04:06+08:00: Monitoring evaluation "70277f6e"

2021-08-24T13:04:06+08:00: Evaluation within deployment: "6da0fd11"

2021-08-24T13:04:06+08:00: Allocation "b470283f" created: node "3d32b138", group "webs"

2021-08-24T13:04:06+08:00: Evaluation status changed: "pending" -> "complete"

==> 2021-08-24T13:04:06+08:00: Evaluation "70277f6e" finished with status "complete"

==> 2021-08-24T13:04:06+08:00: Monitoring deployment "6da0fd11"

✓ Deployment "6da0fd11" successful

2021-08-24T13:05:06+08:00

ID = 6da0fd11

Job ID = get-wordpress

Job Version = 0

Status = successful

Description = Deployment completed successfully

Deployed

Task Group Desired Placed Healthy Unhealthy Progress Deadline

webs 1 1 1 0 2021-08-24T13:15:04+08:00

Verify Job Status

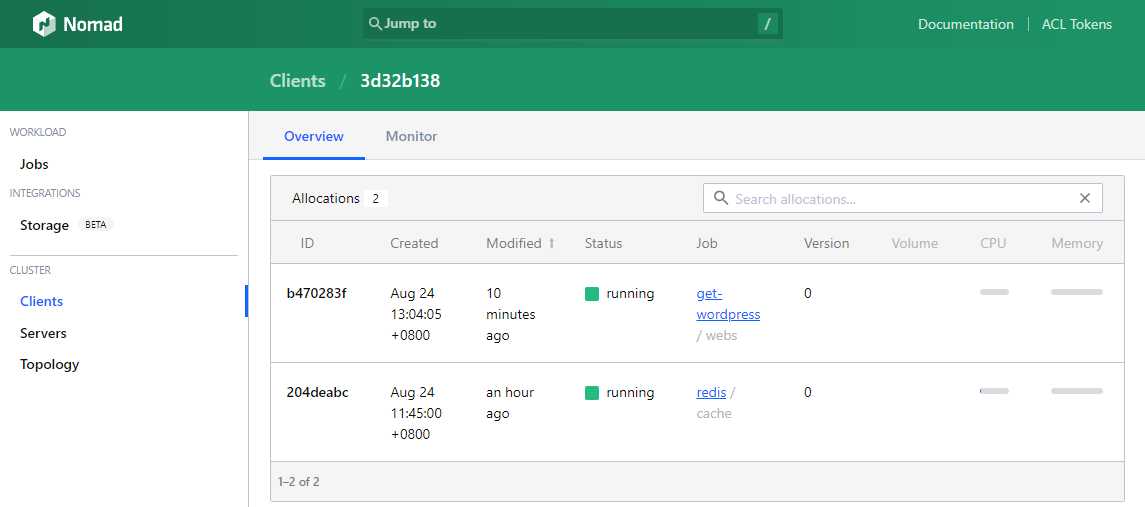

Check the Nomad UI - both jobs are running on our minion:

To get the same information from the Nomad CLI:

nomad job status

nomad job status get-wordpress

The latter gives you the allocation ID of your job that allows you to exec into the corresponding container from your master server:

Allocations

ID Node ID Task Group Version Desired Status Created Modified

b470283f 3d32b138 webs 0 run running 21m37s ago 20m38s ago

You can use it to get into the container and e.g. check if the environment variables we set as part of the Nomad job were actually used in setting up the container:

nomad alloc exec b470283f /bin/bash

root@39b3eb6e2809:/usr/local/apache2# set | grep DB

DB_HOST=nomad-minion

DB_PASS=loremipsum

DB_USER=web

Application Logs

You can get the application logs with the following command - in case of the http-echo container it will return the webserver logs for us:

nomad alloc logs b470283f

2021/08/29 09:48:29 192.168.2.111:8080 192.168.2.112:65011 "GET / HTTP/1.1" 200 55 "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0" 26.3µs

2021/08/29 09:48:29 192.168.2.111:8080 192.168.2.112:65011 "GET /favicon.ico HTTP/1.1" 200 55 "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0" 12.87µs

2021/08/29 09:49:56 192.168.2.111:8080 192.168.2.110:34042 "GET / HTTP/1.1" 200 55 "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.164 Safari/537.36" 27.916µs

2021/08/29 09:49:56 192.168.2.111:8080 192.168.2.110:34042 "GET /favicon.ico HTTP/1.1" 200 55 "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.164 Safari/537.36" 48.44µs

You can also keep the process running to keep an eye on the log while you are working:

nomad alloc logs -f 389b253b

Or just check the last n lines if you are working with a huge log file:

nomad alloc logs -tail -n 10 389b253b