Hashicorp Nomad to set up an OSTicket Helpdesk - Part I

I now have a Docker-Compose File that allows me to provision an instance of the OSTicket Helpdesk with a MariaDB backend. Now I need to get this into my Nomad/Consul cluster.

Data Persistence

The docker-compose.yml adds a volumen mount to the MariaDB container to make sure that the generated database is not destroyed when the container is shut down.

volumes:

- /opt/osticket/db:/var/lib/mysql

In Nomad we will have to define this volume mount in the Nomad client configuration.

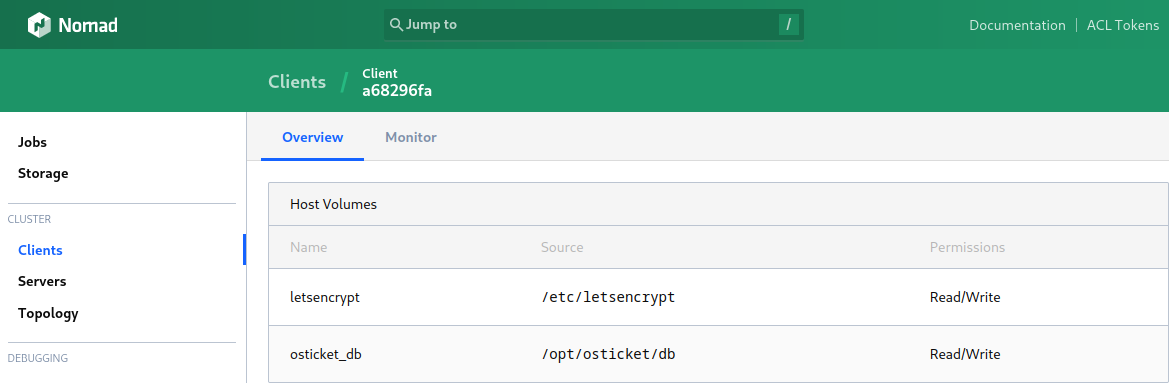

Client Configuration

First we need to create a volume that allows us to persist the data that MariaDB is going to generate. Add the following configs in your client.hcl file [Plugin Stanza | Host Volume Stanza]:

nano /etc/nomad.d/client.hcl

client {

enabled = true

servers = ["myhost:port"]

host_volume "letsencrypt" {

path = "/etc/letsencrypt"

read_only = false

}

host_volume "osticket_db" {

path = "/opt/osticket/db"

read_only = false

}

}

# Docker Configuration

plugin "docker" {

volumes {

enabled = true

selinuxlabel = "z"

}

allow_privileged = false

allow_caps = ["chown", "net_raw"]

}

This client already uses a volume to allow containers access to the Let's Encrypt generated TLS certificates. I can add the database volume below. And make sure that the directory you define here as a volume does exist mkdir -p /opt/osticket/db.

Restart the service service nomad restart and verify that the volume was picked up:

Job Specification

And then in the job specifications, inside the Group Stanza define the volume:

volume "osticket_db" {

type = "host"

read_only = false

source = "osticket_db"

}

and then finally add following in the Task Stanza use the defined volume:

volume_mount {

volume = "osticket_db"

destination = "/var/lib/mysql" #<-- in the container

read_only = false

}

The OSTicket frontend does not need any persistence or volume mounts in general since I already added all customized files to the docker file itself.

Environment Variables

The only thing missing now are the environment variables that allow our two containers - frontend and database - to connect. These have to be placed inside the corresponding Task Stanza:

MariaDB

env {

MYSQL_ROOT_PASSWORD = "secret"

MYSQL_USER = "osticket"

MYSQL_PASSWORD = "secret"

MYSQL_DATABASE = "osticket"

CONTAINER_NAME = "osticket-db"

}

OSTicket

env {

CONTAINER_NAME = "osticket"

MYSQL_USER = "osticket"

MYSQL_HOST = "osticket-db"

MYSQL_PASSWORD = "secret"

MYSQL_DATABASE = "osticket"

}

Run the Job File

nomad plan osticket.tf

+ Job: "osticket"

+ Task Group: "docker" (1 create)

+ Task: "osticket-container" (forces create)

+ Task: "osticket-db-container" (forces create)

Scheduler dry-run:

- All tasks successfully allocated.

Job Modify Index: 0

To submit the job with version verification run:

nomad job run -check-index 0 osticket.tf

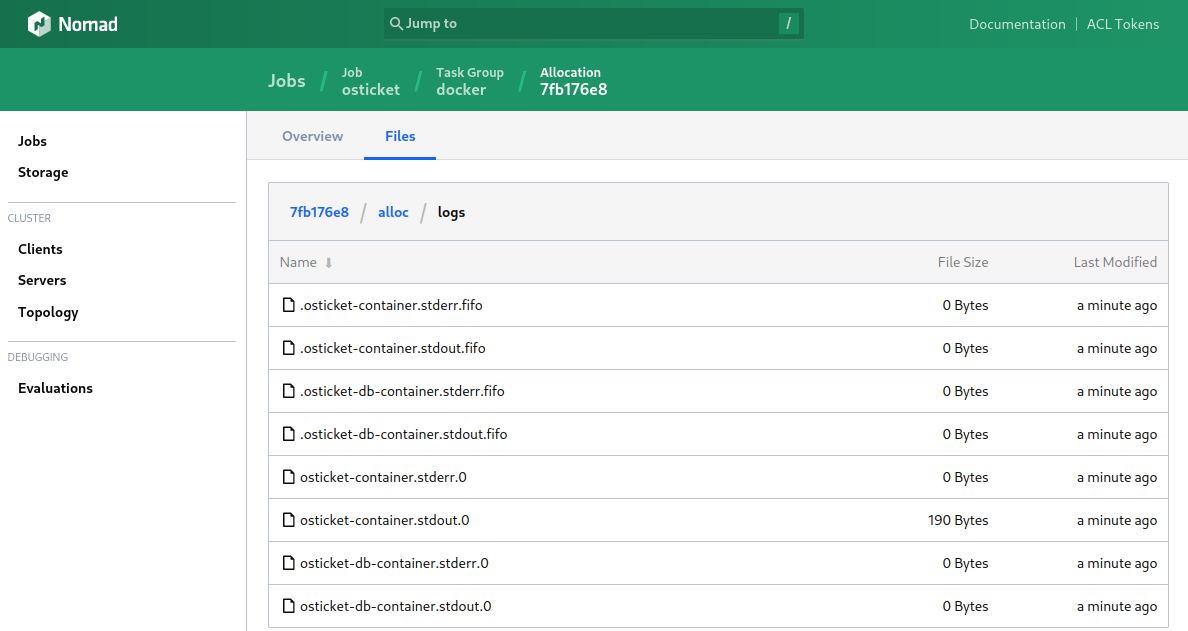

Running the job looks good - the ERROR logs remain empty:

After a little tweaking I now have the following Nomad job file that brings up both services:

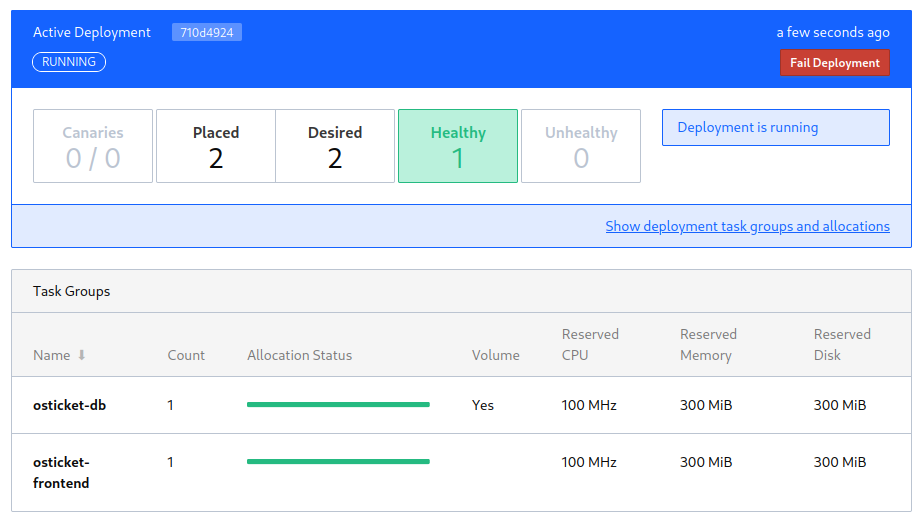

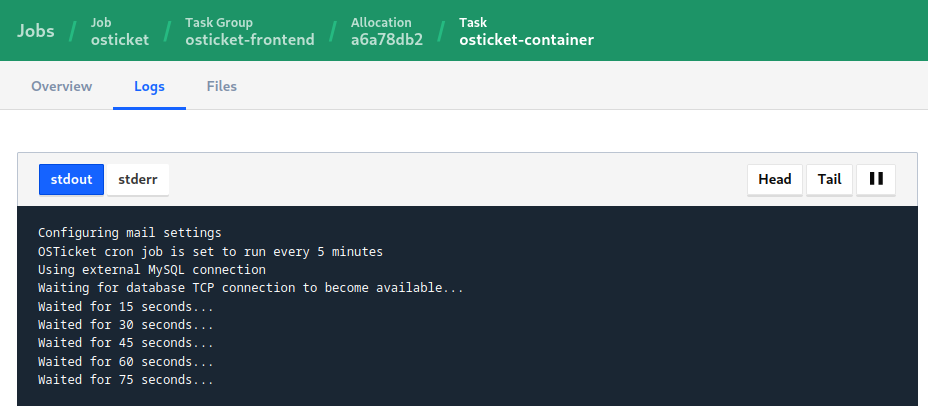

But when I bring the services up I am getting the error message that OSTicket cannot find my database. So eventually the deployment fails because the frontend never reaches a healthy state. So this is what I have to look into next:

Complete Job File (Part I)

job "osticket" {

datacenters = ["mydatacenter"]

type = "service"

group "osticket-db" {

count = 1

network {

mode = "host"

port "tcp" {

static = 3306

to = 3306

}

}

update {

max_parallel = 1

min_healthy_time = "10s"

healthy_deadline = "5m"

progress_deadline = "10m"

auto_revert = true

auto_promote = true

canary = 1

}

volume "osticket_db" {

type = "host"

read_only = false

source = "osticket_db"

}

restart {

attempts = 10

interval = "5m"

delay = "25s"

mode = "delay"

}

task "osticket-db-container" {

driver = "docker"

config {

image = "mariadb:latest"

ports = ["tcp"]

network_mode = "host"

force_pull = false

}

volume_mount {

volume = "osticket_db"

destination = "/var/lib/mysql" #<-- in the container

read_only = false

}

env {

MYSQL_ROOT_PASSWORD = "secret"

MYSQL_USER = "osticket"

MYSQL_PASSWORD = "secret"

MYSQL_DATABASE = "osticket"

# CONTAINER_NAME = "osticket-db"

}

}

}

group "osticket-frontend" {

count = 1

network {

mode = "host"

port "http" {

static = 8080

to = 80

}

}

update {

max_parallel = 1

min_healthy_time = "10s"

healthy_deadline = "5m"

progress_deadline = "10m"

auto_revert = true

auto_promote = true

canary = 1

}

service {

name = "osticket-frontend"

port = "http"

tags = [

"frontend"

]

check {

name = "HTTP Health"

path = "/"

type = "http"

protocol = "http"

interval = "10s"

timeout = "2s"

}

}

task "osticket-container" {

driver = "docker"

config {

image = "my.gitlab.com:12345/server/osticket-docker:latest"

ports = ["http"]

network_mode = "host"

force_pull = false

auth {

username = "myuser"

password = "secret"

}

}

env {

CONTAINER_NAME = "osticket"

MYSQL_USER = "osticket"

MYSQL_HOST = "osticket-db"

MYSQL_PASSWORD = "secret"

MYSQL_DATABASE = "osticket"

}

}

}

}