Hashicorp Nomad with Consul Service Discovery

Following this Dojo task I ended up with a Nomad-deployed Consul server running in dev mode on my Nomad minion. In the final step I wanted to use this service to tell FabioLB what ports the frontend container came up to. Fabio would generate routes for them and start load balancing the incoming traffic to the random ports of those containers... which did not happen.

I went ahead and installed the Consul Master/Minion cluster the regular way and tried it again. This time everything worked as expected.

Continuation of Hashicorp Nomad Dojo

Consul Service Discovery

When two services need to communicate in a Nomad cluster, they need to know where to find each other and that's called Service Discovery. Because Nomad is purely a cluster manager and scheduler, you will need another piece of software to help you with service discovery: Consul.

Use Nomad to Deploy Consul

To deploy Consul we can execute the service directly through Nomad using the Execute Driver. To run a binary you have two options: exec and raw_exec. The difference between these two options is that raw_exec runs the task as the same user as the Nomad process. If we start Nomad as root, then it'd run tasks as root as well, which is not what you'd want. That's why raw_exec should be used with extreme caution and is disabled by default.

Nomad supports downloading http, https, git, hg and S3 artifacts. If these artifacts are archived (zip, tgz, bz2, xz), they are automatically unarchived before the starting the task.

Create a file called consul.nomad on the Nomad master server. In this file you will need to define a job, a group and task - the task will Download the Consul Binary, unzip and move it to the bin directory (you can get the SHA256 sum from the Downloads Listing) and execute Consul in dev mode:

job "consul" {

datacenters = ["instaryun"]

type = "service"

group "consul" {

count = 1

task "consul" {

driver = "exec"

config {

command = "artifacts/consul"

args = ["agent", "-dev"]

}

artifact {

source = "https://releases.hashicorp.com/consul/1.12.2/consul_1.12.2_linux_amd64.zip"

destination = "local/artifacts/consul"

mode = "file"

options {

checksum = "sha256:35f85098f5956ef3aca66ec2d2d2a803d1f3359b4dec13382c6ac895344a1f4c"

}

}

}

}

}

Note that the destination is relative to the work directory of your Nomad process as defined in your Nomad configuration file, e.g.

/opt/nomad/data+ an allocation ID and the job name that created it. The full path to the Consul binary that was created running the job above was in my case:/opt/nomad/data/alloc/346a1839-1e7e-c85f-0f81-7f6b71d64254/consul/artifacts/consul.

Also Note because the binary ends up in a sub directory you will have to give the relative path to it in the command variable

artifacts/consul.

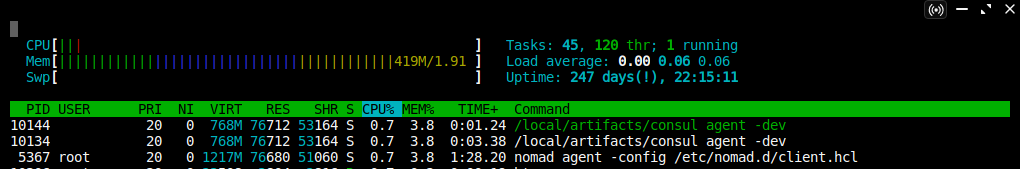

After execution you will see that the service has been successfully deployed:

Additionally, all Consul service ports are bound to localhost:

netstat -tlnp | grep consul

tcp 0 0 127.0.0.1:8300 LISTEN 10134/consul

tcp 0 0 127.0.0.1:8301 LISTEN 10134/consul

tcp 0 0 127.0.0.1:8302 LISTEN 10134/consul

tcp 0 0 127.0.0.1:8500 LISTEN 10134/consul

tcp 0 0 127.0.0.1:8502 LISTEN 10134/consul

tcp 0 0 127.0.0.1:8600 LISTEN 10134/consul

Register your Service with Consul

We can now add a service block to our frontend configuration file that tells Consul how to verify that the service is operational. This can be done by using the randomly assigned HTTP port and send an HTTP request on localhost:

job "frontend" {

datacenters = ["instaryun"]

type = "service"

group "frontend" {

count = 2

scaling {

enabled = true

min = 2

max = 3

}

network {

mode = "host"

port "http" {

to = "8080"

}

}

service {

name = "frontend"

tags = [

"frontend",

"urlprefix-/"

]

port = "http"

check {

name = "Frontend HTTP Healthcheck"

path = "/"

type = "http"

protocol = "http"

interval = "10s"

timeout = "2s"

}

}

task "frontend" {

driver = "docker"

config {

image = "thedojoseries/frontend:latest"

ports = ["http"]

}

}

}

}

Note I am also adding a tag

urlprefix-/that will be used by the load balancer later on for routing. In the example above there is no prefix. The web frontend will be available on the domain root.

After running the app we can use Consul's REST API to check if our frontend service has been registered by running the following queries on the Nomad minion server:

curl localhost:8500/v1/catalog/services

{

"consul": [],

"frontend": [

"frontend",

"urlprefix-/"

]

}

Here we can see the service as well as the tags we provided. If this command returns nothing for you make sure that Consul is already up-and-running before you start your app!

To get the two service ports we can check:

curl 'http://localhost:8500/v1/health/service/frontend?passing'

[

{

...

"Service": {

"ID": "_nomad-task-6b0d87da-cfc7-b281-2d2c-b69c551ec10c-group-frontend-frontend-http",

"Service": "frontend",

"Tags": [

"frontend",

"urlprefix-/"

],

"Address": "my.minion.com",

"TaggedAddresses": {

"lan_ipv4": {

"Address": "my.minion.com",

"Port": 27906

},

"wan_ipv4": {

"Address": "my.minion.com",

"Port": 27906

}

},

"Meta": {

"external-source": "nomad"

},

"Port": 27906,

"Weights": {

"Passing": 1,

"Warning": 1

},

"EnableTagOverride": false,

"Proxy": {

"Mode": "",

"MeshGateway": {},

"Expose": {}

},

"Connect": {},

"CreateIndex": 154,

"ModifyIndex": 154

},

...

"Service": {

"ID": "_nomad-task-c269d3dc-4fa3-a05f-1234-3515d694f61c-group-frontend-frontend-http",

"Service": "frontend",

"Tags": [

"frontend",

"urlprefix-/"

],

"Address": "my.minion.com",

"TaggedAddresses": {

"lan_ipv4": {

"Address": "my.minion.com",

"Port": 24123

},

"wan_ipv4": {

"Address": "my.minion.com",

"Port": 24123

}

},

"Meta": {

"external-source": "nomad"

},

"Port": 24123,

"Weights": {

"Passing": 1,

"Warning": 1

},

"EnableTagOverride": false,

"Proxy": {

"Mode": "",

"MeshGateway": {},

"Expose": {}

},

"Connect": {},

"CreateIndex": 139,

"ModifyIndex": 139

}

...

}

]

And here we see that the two service instances run on port 24123 and 27906 - which can be confirmed by running:

docker ps

CONTAINER ID IMAGE PORTS

eec03c397329 thedojoseries/frontend:latest my.minion.com:24123->8080/tcp

bf06acaf078e thedojoseries/frontend:latest my.minion.com:27906->8080/tcp

Adding the Fabio Load Balancer

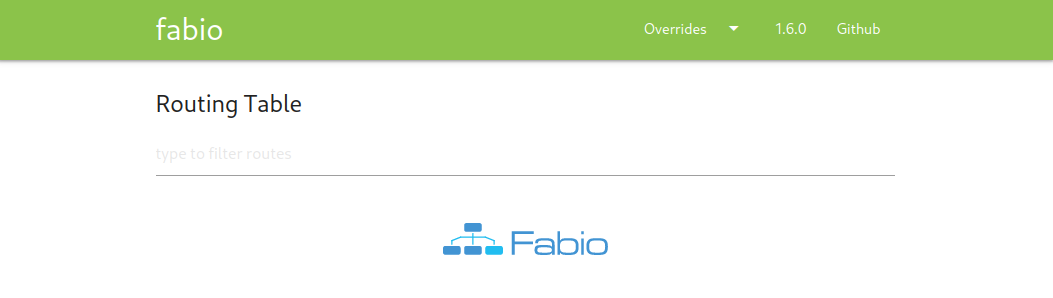

Disclaimer: So far I was unable to register routes with Fabio

We now have two instances of our web service that need to be fed by web traffic using a load balancer. The preferred solution by Hashicorp is Fabio. Fabio is an HTTP and TCP reverse proxy that configures itself with data from Consul. Nomad has 3 types of schedulers: Service, Batch and System. You should configure the job fabio to be of Type System. Read more about Nomad Schedulers.

Define a job called fabio in fabio.nomad:

- Define a group called fabio.

- Define a task called fabio.

- Fabio should be using the Docker driver.

- The image for this container should be fabiolb/fabio.

- Usually, Docker containers run in a network mode called Bridge. In a bridge mode, containers run on a different network stack than the host. Because Fabio needs to be able to communicate easily with Consul, which is running as a process on the host and not as a Docker container, you should configure fabio to run in a network mode called host instead (which will run the container in the same network stack as the host).

- Nomad should allocate 200 MHz of cpu and 128 MB of memory to this task.

- You should allocate two static ports for Fabio:

9999 - Load Balancer&9998 - UI.

- Define a task called fabio.

job "fabio" {

datacenters = ["instaryun"]

type = "system"

group "fabio" {

network {

port "http" {

static = 9998

}

port "lb" {

static = 9999

}

}

task "fabio" {

driver = "docker"

config {

image = "fabiolb/fabio:latest"

network_mode = "host"

ports = ["http", "lb"]

}

resources {

cpu = 200

memory = 128

}

}

}

}

Now check the Fabio log to see if there are any error messages:

nomad status fabio

Allocations

ID Node ID Task Group Version Desired Status Created Modified

f1510fb9 005f708b fabio 0 run running 6m3s ago 5m59s ago

nomad alloc logs -stderr 5606f503

2022/06/07 06:22:55 [WARN] No route for my.mimion.com:9999/

No routes ¯\_(ツ)_/¯