Hashicorp Consul Refresher - Service Discovery

This article is a bit messy... a lot of things changed since the

0.xreleases of Consul and I ran into a few bumps on the way. A lot of things learnt, errors and solution documented. But I will have to write up a clean version of this to have a step-by-step guide for future reference.

- Installation

- Test-Running

- Service Configuration

- Run Consul as a Service

- Service Configuration

- Registering a Service in Nomad

- Default Consul Configuration

- Consul Service Error Message

Nomad schedules workloads of various types across a cluster of generic hosts. Because of this, placement is not known in advance and you will need to use service discovery to connect tasks to other services deployed across your cluster. Nomad integrates with Consul to provide service discovery and monitoring.

Consul allows services to easily register themselves in a central catalog when they start up. When an application or service needs to communicate with another component, the central catalog can be queried using either an API or DNS interface to provide the required addresses.

Installation

Debian11

curl -fsSL https://apt.releases.hashicorp.com/gpg | apt-key add -

apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

apt-get update && apt-get install consul

RHEL8

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://rpm.releases.hashicorp.com/RHEL/hashicorp.repo

sudo yum -y install consul

Consul Commandline Autocompletion

consul -autocomplete-install

complete -C /usr/bin/consul consul

Test-Running

You can start the Consul Agent with configuration flags:

consul agent -datacenter=instaryun -data-dir=/opt/consul -bind '{{ GetInterfaceIP "enp2s0" }}'

To get started I will execute the service in DEV mode on my master:

consul agent -dev -datacenter=instaryun -data-dir=/opt/consul -bind '{{ GetInterfaceIP "enp3s0" }}'

The Consul server GUI will be available on Port 8500 and I can tunnel it through SSH with:

ssh myuser@192.168.2.110 -L8500:localhost:8500

Service Configuration

Again - just like with Nomad - I discovered that the default config is missing on Debian11, while it is located in /etc/consul.d/ on RHEL8. I will list the default consul.hcl config at end of this article.

We can use this configuration file - instead of adding all variables to the consul agent command:

/usr/bin/consul agent -config-file=/etc/consul.d/consul.hcl

/usr/bin/consul agent -config-dir=/etc/consul.d

We can now add the our configuration to /etc/consul.d/consul.hcl:

node_name = "consul-master"

datacenter = "instaryun"

data_dir = "/opt/consul"

# allow all clients

client_addr = "0.0.0.0"

# enable the ui

ui_config{

enabled = true

}

# make this instance the master server

server = true

# add your servers IP

bind_addr = "192.168.2.110"

advertise_addr = "192.168.2.110"

# For testing I only have 1 - later 5 master servers are recommended

bootstrap_expect=1

# I only have 1 other client server. Set it to allow constant retries because it is not yet configured

retry_join = ["192.168.2.111"]

enable_syslog = true

log_level = "INFO"

# https://www.consul.io/docs/agent/options#performance

performance{

raft_multiplier = 1

}

You can test run the configuration file - see if it is throwing us any errors:

/usr/bin/consul agent -config-file=/etc/consul.d/consul.hcl

Or run the Test command:

consul validate /etc/consul.d/consul.hcl

BootstrapExpect is set to 1; this is the same as Bootstrap mode.

bootstrap = true: do not enable unless necessary

Configuration is valid!

Run Consul as a Service

Needed Ports

Consul uses 3 ports:

8500- Consul UI8301/8300- LAN Gossip8600- DNS

I will open those ports in my firewall (except the UI):

FirewallD

sudo firewall-cmd --permanent --zone=public --add-port={8300/tcp,8301/tcp,8600/tcp}

sudo firewall-cmd --reload

sudo firewall-cmd --zone=public --list-ports

ufw

ufw allow 8301,8600/tcp

ufw reload

ufw status verbose

The documentation I found said that the Gossip port is

3001. But when I started Consul up the first time it tried to connect through port3000and the cluster join failed. But the next day Consul started using3001¯\(ツ)/¯

agent.client: RPC failed to server: method=KVS.List server=192.168.2.110:8300 error="rpc error getting client: failed to get conn: dial tcp 192.168.2.111:0->192.168.2.110:8300: connect: no route to host"

Update: See above - happened again. It seems that you need both port

8300and8301.

Service Configuration

Create the SystemD Service file in /etc/systemd/system/consul.service:

[Unit]

Description="HashiCorp Consul"

Documentation=https://www.consul.io/

Requires=network-online.target

After=network-online.target

ConditionFileNotEmpty=/etc/consul.d/consul.hcl

[Service]

Type=notify

User=consul

Group=consul

ExecStart=/usr/bin/consul agent -config-dir=/etc/consul.d/

ExecReload=/bin/kill --signal HUP $MAINPID

KillMode=process

KillSignal=SIGTERM

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

sudo systemctl daemon-reload

sudo service consul start

Problems

In the beginning I ran into a permission error:

Aug 31 14:03:44 nomad-master consul[54505]: ==> failed to setup node ID: open /opt/consul/node-id: permission denied

Aug 31 14:03:44 nomad-master systemd[1]: consul.service: Main process exited, code=exited, status=1/FAILURE

Aug 31 14:03:44 nomad-master systemd[1]: consul.service: Failed with result 'exit-code'.

As a dirty fix I changed the entire data directory to 777 /The deployment guide creates an extra user for consul - I will go this route in the next test run.

chmod -R 777 /opt/consul

Now the service started but is failing because the Consul minion, that I did not configure yet, is not connecting - see Error Log.

So now I added the identical Service file to my Debian11 minion and added the following Configuration /etc/consul.d/consul.hcl:

node_name = "consul-minion"

datacenter = "instaryun"

data_dir = "/opt/consul"

# allow all clients

client_addr = "0.0.0.0"

# disable the ui

ui = false

# make this instance the master server

server = false

# add your servers IP

bind_addr = "192.168.2.111"

advertise_addr = "192.168.2.111"

retry_join = ["192.168.2.110"]

enable_syslog = true

log_level = "INFO"

# https://www.consul.io/docs/agent/options#performance

performance{

raft_multiplier = 1

}

Note that I set server to false, removed the bootstap variable and had to rewrite the ui variable - the latter might be because I am using version Consul v1.8.7 while I am on Consul v1.10.2 on my master server.

As an alternative to retry_join I can also manually join this client into an existing cluster with the join command (or leave):

consul join [IP address or domain of one of your Consul servers]

consul leave

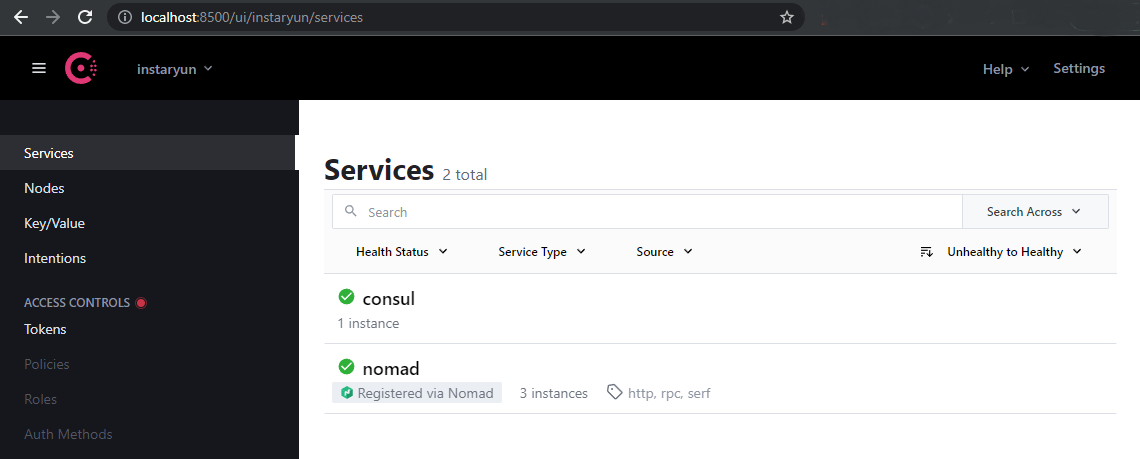

This time both services started successfully! And the UI shows me that both nodes successfully joined the Consul cluster:

Note: The node name is taken from the server hostname instead of the Consul configuration file - hence I am having a Nomad Master instead of the consul-master I defined. To change your servers hostname run the following commands and restart the Consul service:

hostname

hostnamectl set-hostname consul-master

hostname

service consul restart

Now Consul displays the correct node name:

consul operator raft list-peers

Node ID Address State Voter RaftProtocol

consul-master d561f8d4-9606-8c9c-40d4-a5350857801e 192.168.2.110:8300 leader true 3

consul members

Node Address Status Type Build Protocol DC Segment

consul-master 192.168.2.110:8301 alive server 1.10.2 2 instaryun <all>

consul-minion 192.168.2.111:8301 alive client 1.8.7 2 instaryun <default>

Registering a Service in Nomad

There are multiple ways to register a service in Consul. Fortunately Nomad has a first class integration that makes it very simple to add a service stanza to a job file to perform this registration for us. To register our Nomad deployed http-echo application, we simply add a service block to the Nomad job file:

service {

name = "http-echo"

port = "heartbeat"

tags = [

"heartbeat",

"urlprefix-/http-echo",

]

check {

type = "http"

path = "/health"

interval = "2s"

timeout = "2s"

}

}

The http-echo job file now looks like:

cat ~/nomad_jobs/http_echo_gui.nomad

job "http-echo-gui" {

datacenters = ["instaryun"]

group "echo" {

network {

port "heartbeat" {

static = 8080

}

}

count = 1

task "server" {

driver = "docker"

config {

image = "hashicorp/http-echo:latest"

ports = ["heartbeat"]

args = [

"-listen", ":${NOMAD_PORT_heartbeat}",

"-text", "${attr.os.name}: server running on ${NOMAD_IP_heartbeat} with port ${NOMAD_PORT_heartbeat}",

]

}

service {

name = "http-echo"

port = "heartbeat"

tags = [

"heartbeat",

"urlprefix-/http-echo",

]

check {

type = "http"

path = "/health"

interval = "2s"

timeout = "2s"

}

}

}

}

}

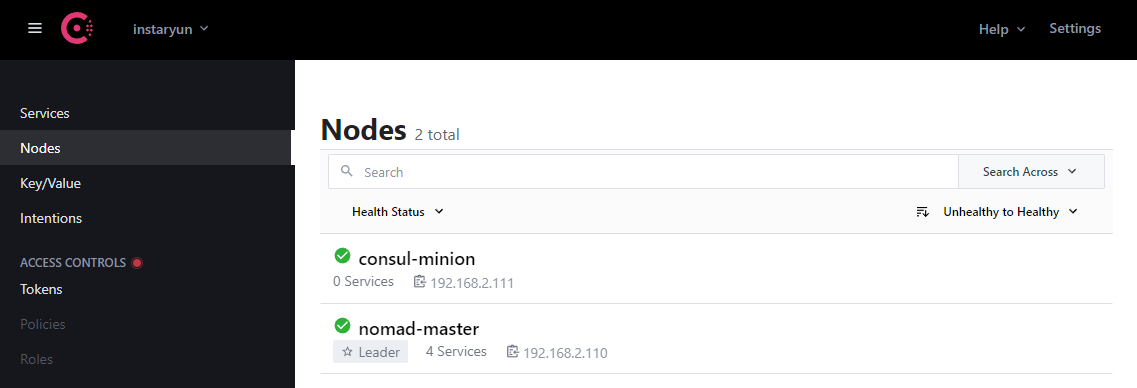

And can be directly updated from the Nomad GUI:

First click on Plan and then Run to schedule the job. Once the task is running, check in Consul if the service http-echo registered itself:

consul catalog services

consul

http-echo

nomad

nomad-client

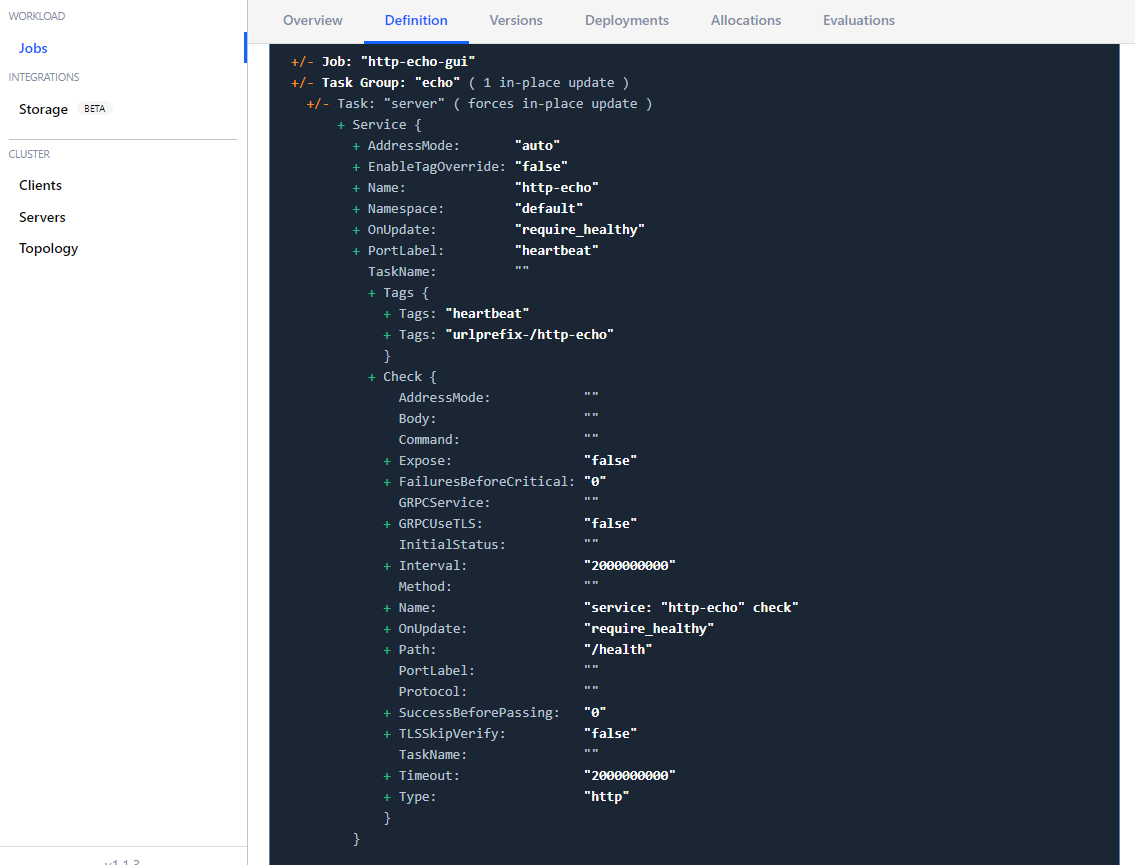

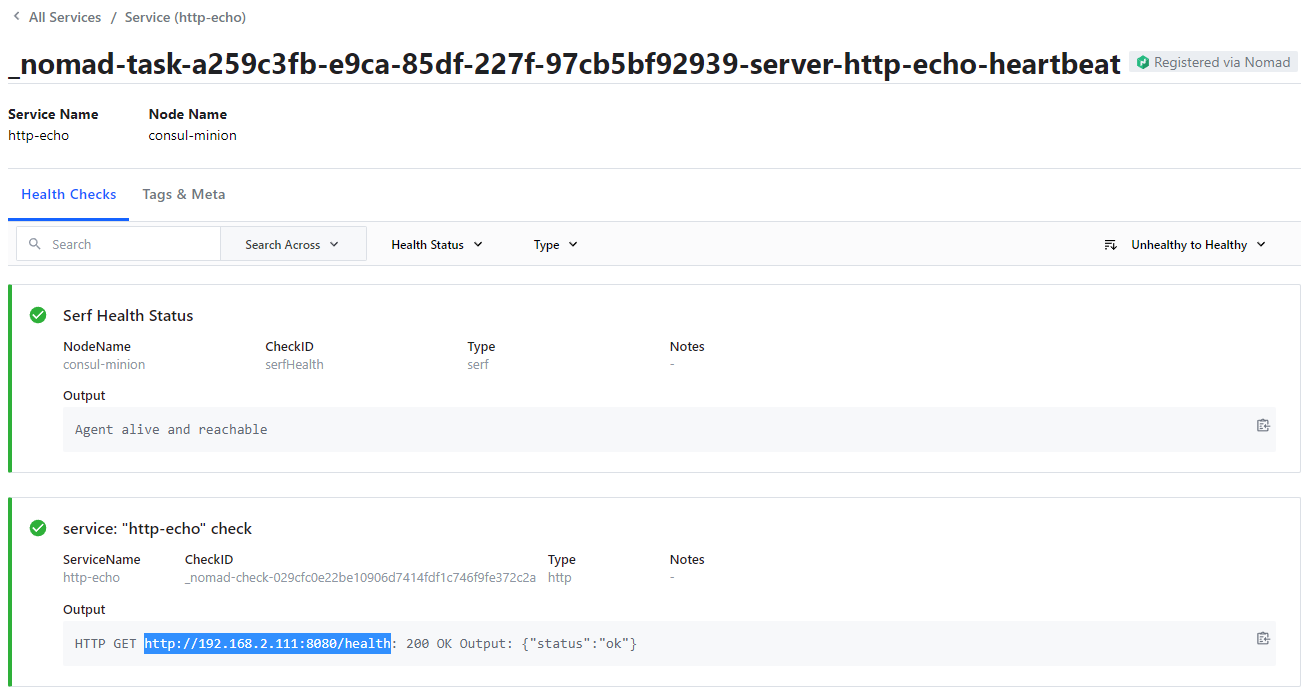

In the Consul UI I can see that the healthcheck URL is working:

You can also test it from your browser with by visiting the URL listed by Consul http://192.168.2.111:8080/health:

{ "status": "ok" }

Default Consul Configuration

From the default package manager installation of Consul v1.10.2 on RHEL8 in /etc/consul.d/consul.hcl:

# Full configuration options can be found at https://www.consul.io/docs/agent/options.html

# datacenter

# This flag controls the datacenter in which the agent is running. If not provided,

# it defaults to "dc1". Consul has first-class support for multiple datacenters, but

# it relies on proper configuration. Nodes in the same datacenter should be on a

# single LAN.

#datacenter = "my-dc-1"

# data_dir

# This flag provides a data directory for the agent to store state. This is required

# for all agents. The directory should be durable across reboots. This is especially

# critical for agents that are running in server mode as they must be able to persist

# cluster state. Additionally, the directory must support the use of filesystem

# locking, meaning some types of mounted folders (e.g. VirtualBox shared folders) may

# not be suitable.

data_dir = "/opt/consul"

# client_addr

# The address to which Consul will bind client interfaces, including the HTTP and DNS

# servers. By default, this is "127.0.0.1", allowing only loopback connections. In

# Consul 1.0 and later this can be set to a space-separated list of addresses to bind

# to, or a go-sockaddr template that can potentially resolve to multiple addresses.

#client_addr = "0.0.0.0"

# ui

# Enables the built-in web UI server and the required HTTP routes. This eliminates

# the need to maintain the Consul web UI files separately from the binary.

# Version 1.10 deprecated ui=true in favor of ui_config.enabled=true

#ui_config{

# enabled = true

#}

# server

# This flag is used to control if an agent is in server or client mode. When provided,

# an agent will act as a Consul server. Each Consul cluster must have at least one

# server and ideally no more than 5 per datacenter. All servers participate in the Raft

# consensus algorithm to ensure that transactions occur in a consistent, linearizable

# manner. Transactions modify cluster state, which is maintained on all server nodes to

# ensure availability in the case of node failure. Server nodes also participate in a

# WAN gossip pool with server nodes in other datacenters. Servers act as gateways to

# other datacenters and forward traffic as appropriate.

#server = true

# Bind addr

# You may use IPv4 or IPv6 but if you have multiple interfaces you must be explicit.

#bind_addr = "[::]" # Listen on all IPv6

#bind_addr = "0.0.0.0" # Listen on all IPv4

#

# Advertise addr - if you want to point clients to a different address than bind or LB.

#advertise_addr = "127.0.0.1"

# Enterprise License

# As of 1.10, Enterprise requires a license_path and does not have a short trial.

#license_path = "/etc/consul.d/consul.hclic"

# bootstrap_expect

# This flag provides the number of expected servers in the datacenter. Either this value

# should not be provided or the value must agree with other servers in the cluster. When

# provided, Consul waits until the specified number of servers are available and then

# bootstraps the cluster. This allows an initial leader to be elected automatically.

# This cannot be used in conjunction with the legacy -bootstrap flag. This flag requires

# -server mode.

#bootstrap_expect=3

# encrypt

# Specifies the secret key to use for encryption of Consul network traffic. This key must

# be 32-bytes that are Base64-encoded. The easiest way to create an encryption key is to

# use consul keygen. All nodes within a cluster must share the same encryption key to

# communicate. The provided key is automatically persisted to the data directory and loaded

# automatically whenever the agent is restarted. This means that to encrypt Consul's gossip

# protocol, this option only needs to be provided once on each agent's initial startup

# sequence. If it is provided after Consul has been initialized with an encryption key,

# then the provided key is ignored and a warning will be displayed.

#encrypt = "..."

# retry_join

# Similar to -join but allows retrying a join until it is successful. Once it joins

# successfully to a member in a list of members it will never attempt to join again.

# Agents will then solely maintain their membership via gossip. This is useful for

# cases where you know the address will eventually be available. This option can be

# specified multiple times to specify multiple agents to join. The value can contain

# IPv4, IPv6, or DNS addresses. In Consul 1.1.0 and later this can be set to a go-sockaddr

# template. If Consul is running on the non-default Serf LAN port, this must be specified

# as well. IPv6 must use the "bracketed" syntax. If multiple values are given, they are

# tried and retried in the order listed until the first succeeds. Here are some examples:

#retry_join = ["consul.domain.internal"]

#retry_join = ["10.0.4.67"]

#retry_join = ["[::1]:8301"]

#retry_join = ["consul.domain.internal", "10.0.4.67"]

# Cloud Auto-join examples:

# More details - https://www.consul.io/docs/agent/cloud-auto-join

#retry_join = ["provider=aws tag_key=... tag_value=..."]

#retry_join = ["provider=azure tag_name=... tag_value=... tenant_id=... client_id=... subscription_id=... secret_access_key=..."]

#retry_join = ["provider=gce project_name=... tag_value=..."]

Consul Service Error Message

see Problems

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [WARN] agent: DEPRECATED Backwards compatibil>Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: Retry join is supported for the>Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: Joining cluster...: cluster=LAN

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: (LAN) joining: lan_addresses=[1>Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: started state syncer

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: Consul agent running!

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [WARN] agent: (LAN) couldn't join: number_of_>Aug 31 14:12:08 nomad-master consul[60088]: * Failed to join 192.168.2.111: dial tcp 192.168.2.111:8301: connec>Aug 31 14:12:08 nomad-master consul[60088]: "

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [WARN] agent: Join cluster failed, will retry>Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.118+0800 [WARN] agent.server.raft: heartbeat timeout r>Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.118+0800 [INFO] agent.server.raft: entering candidate >Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.136+0800 [INFO] agent.server.raft: election won: tally>Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.136+0800 [INFO] agent.server.raft: entering leader sta>Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.136+0800 [INFO] agent.server: cluster leadership acqui>Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.136+0800 [INFO] agent.server: New leader elected: payl>Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.151+0800 [INFO] agent.leader: started routine: routine>Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.151+0800 [INFO] agent.leader: started routine: routine>Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.367+0800 [INFO] agent: Synced node info

Aug 31 14:12:38 nomad-master consul[60088]: 2021-08-31T14:12:38.213+0800 [INFO] agent: (LAN) joining: lan_addresses=[1>Aug 31 14:12:38 nomad-master consul[60088]: 2021-08-31T14:12:38.214+0800 [WARN] agent: (LAN) couldn't join: number_of_>Aug 31 14:12:38 nomad-master consul[60088]: * Failed to join 192.168.2.111: dial tcp 192.168.2.111:8301: connec>Aug 31 14:12:38 nomad-master consul[60088]: "

Aug 31 14:12:38 nomad-master consul[60088]: 2021-08-31T14:12:38.214+0800 [WARN] agent: Join cluster failed, will retry>Aug 31 14:13:08 nomad-master consul[60088]: 2021-08-31T14:13:08.214+0800 [INFO] agent: (LAN) joining: lan_addresses=[1>Aug 31 14:13:08 nomad-master consul[60088]: 2021-08-31T14:13:08.214+0800 [WARN] agent: (LAN) couldn't join: number_of_>Aug 31 14:13:08 nomad-master consul[60088]: * Failed to join 192.168.2.111: dial tcp 192.168.2.111:8301: connec>Aug 31 14:13:08 nomad-master consul[60088]: "

Aug 31 14:13:08 nomad-master consul[60088]: 2021-08-31T14:13:08.214+0800 [WARN] agent: Join cluster failed, will retry>lines 3908-3936/3936 (END)

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [WARN] agent: DEPRECATED Backwards compatibility with pre-1.9 metrics enabled. These metrics will be removed in a future version of Consul. Set `telemetry { disable_compat_1.9 = true }` to disable them.

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: Retry join is supported for the following discovery methods: cluster=LAN discovery_methods="aliyun aws azure digitalocean gce k8s linode mdns os packet scaleway softlayer tencentcloud triton vsphere"

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: Joining cluster...: cluster=LAN

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: (LAN) joining: lan_addresses=[192.168.2.111]

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: started state syncer

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [INFO] agent: Consul agent running!

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [WARN] agent: (LAN) couldn't join: number_of_nodes=0 error="1 error occurred:

Aug 31 14:12:08 nomad-master consul[60088]: * Failed to join 192.168.2.111: dial tcp 192.168.2.111:8301: connect: connection refused

Aug 31 14:12:08 nomad-master consul[60088]: "

Aug 31 14:12:08 nomad-master consul[60088]: 2021-08-31T14:12:08.212+0800 [WARN] agent: Join cluster failed, will retry: cluster=LAN retry_interval=30s error=<nil>

Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.118+0800 [WARN] agent.server.raft: heartbeat timeout reached, starting election: last-leader=

Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.118+0800 [INFO] agent.server.raft: entering candidate state: node="Node at 192.168.2.110:8300 [Candidate]" term=5

Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.136+0800 [INFO] agent.server.raft: election won: tally=1

Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.136+0800 [INFO] agent.server.raft: entering leader state: leader="Node at 192.168.2.110:8300 [Leader]"

Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.136+0800 [INFO] agent.server: cluster leadership acquired

Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.136+0800 [INFO] agent.server: New leader elected: payload=nomad-master

Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.151+0800 [INFO] agent.leader: started routine: routine="federation state anti-entropy"

Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.151+0800 [INFO] agent.leader: started routine: routine="federation state pruning"

Aug 31 14:12:15 nomad-master consul[60088]: 2021-08-31T14:12:15.367+0800 [INFO] agent: Synced node info

Aug 31 14:12:38 nomad-master consul[60088]: 2021-08-31T14:12:38.213+0800 [INFO] agent: (LAN) joining: lan_addresses=[192.168.2.111]

Aug 31 14:12:38 nomad-master consul[60088]: 2021-08-31T14:12:38.214+0800 [WARN] agent: (LAN) couldn't join: number_of_nodes=0 error="1 error occurred:

Aug 31 14:12:38 nomad-master consul[60088]: * Failed to join 192.168.2.111: dial tcp 192.168.2.111:8301: connect: connection refused

Aug 31 14:12:38 nomad-master consul[60088]: "

Aug 31 14:12:38 nomad-master consul[60088]: 2021-08-31T14:12:38.214+0800 [WARN] agent: Join cluster failed, will retry: cluster=LAN retry_interval=30s error=<nil>

Aug 31 14:13:08 nomad-master consul[60088]: 2021-08-31T14:13:08.214+0800 [INFO] agent: (LAN) joining: lan_addresses=[192.168.2.111]

Aug 31 14:13:08 nomad-master consul[60088]: 2021-08-31T14:13:08.214+0800 [WARN] agent: (LAN) couldn't join: number_of_nodes=0 error="1 error occurred:

Aug 31 14:13:08 nomad-master consul[60088]: * Failed to join 192.168.2.111: dial tcp 192.168.2.111:8301: connect: connection refused

Aug 31 14:13:08 nomad-master consul[60088]: "

Aug 31 14:13:08 nomad-master consul[60088]: 2021-08-31T14:13:08.214+0800 [WARN] agent: Join cluster failed, will retry: cluster=LAN retry_interval=30s error=<nil>