Hashicorp Nomad Deployment

Continuation of Hashicorp Nomad Dojo

Deploying a Job to a Multi-Cluster Node

When we now execute the planning and run command for the template job frontend.nomad it will now be deployed to our new minion node instead of the MASTER:

Planning

nomad plan frontend.nomad

+ Job: "frontend"

+ Task Group: "frontend" (1 create)

+ Task: "frontend" (forces create)

Scheduler dry-run:

- All tasks successfully allocated.

Job Modify Index: 0

To submit the job with version verification run:

nomad job run -check-index 0 frontend.nomad

Running

nomad job run -check-index 0 frontend.nomad

==> 2022-06-05T12:02:58+02:00: Monitoring evaluation "1d55c9e3"

2022-06-05T12:02:58+02:00: Evaluation triggered by job "frontend"

==> 2022-06-05T12:02:59+02:00: Monitoring evaluation "1d55c9e3"

2022-06-05T12:02:59+02:00: Evaluation within deployment: "5b251061"

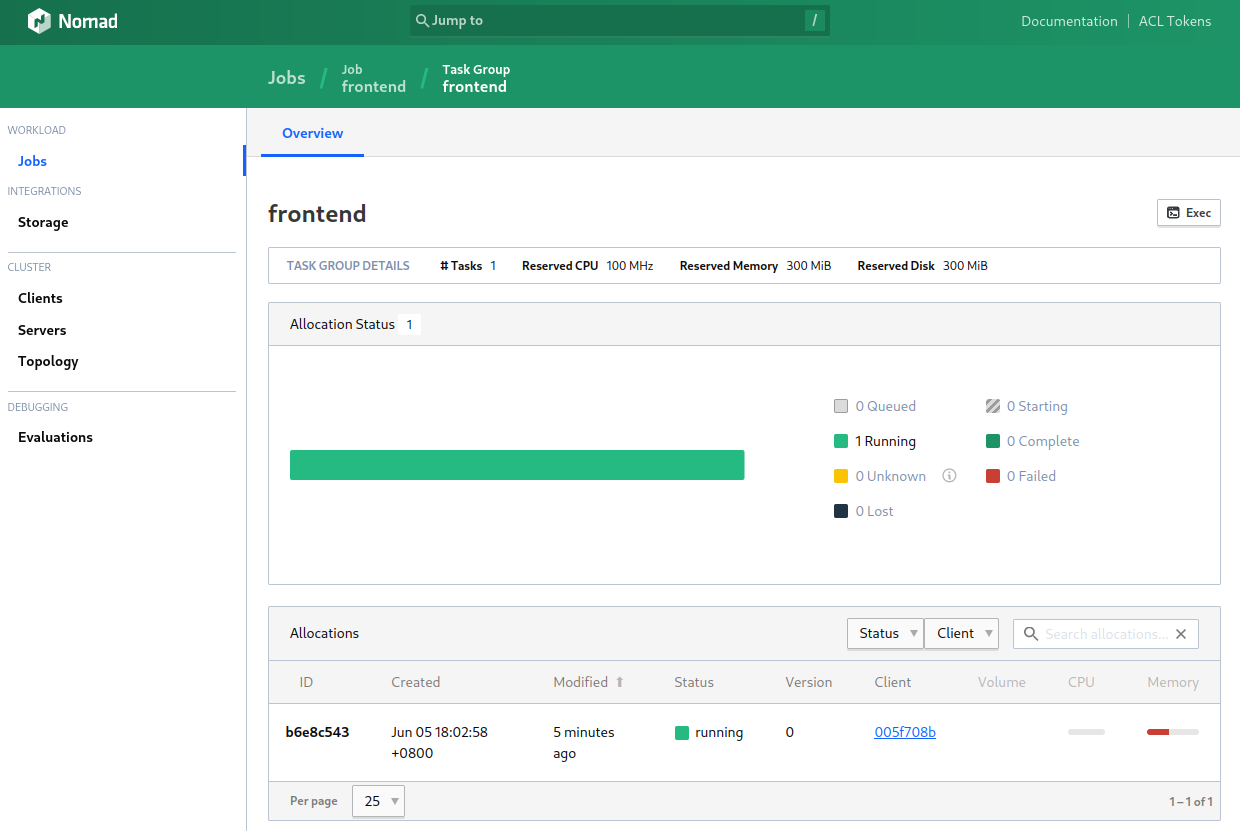

2022-06-05T12:02:59+02:00: Allocation "b6e8c543" created: node "005f708b", group "frontend"

2022-06-05T12:02:59+02:00: Evaluation status changed: "pending" -> "complete"

==> 2022-06-05T12:02:59+02:00: Evaluation "1d55c9e3" finished with status "complete"

==> 2022-06-05T12:02:59+02:00: Monitoring deployment "5b251061"

✓ Deployment "5b251061" successful

Run a docker ps on the MINION and you will see that the docker frontend container has been deployed:

docker ps

CONTAINER ID IMAGE CREATED STATUS

33f1ddc027fa thedojoseries/frontend:latest About a minute ago Up About a minute

Allocating a Static Service Port to the Frontend Container

We now deployed a frontend container based on the thedojoseries/frontend docker image. This container exposes a web service on port 8080 that we have to allocate to our deployment. This can be done by editing the job definition frontend.nomad:

job "frontend" {

datacenters = ["instaryun"]

type = "service"

group "frontend" {

count = 1

task "frontend" {

driver = "docker"

config {

image = "thedojoseries/frontend:latest"

}

resources {

network {

port "http" {

static = 8080

}

}

}

}

}

}

Re-run plan and deploy the new version:

nomad plan frontend.nomad

nomad job run -check-index 103 frontend.nomad

This leads to a deprecation warning for the network resources (I will fix this a few steps further down):

Scheduler dry-run:

- All tasks successfully allocated.

Job Warnings:

1 warning(s):

* Group "frontend" has warnings: 1 error occurred:

* 1 error occurred:

* Task "frontend": task network resources have been deprecated as of Nomad 0.12.0. Please configure networking via group network block.

But running the job does work nomad job run -check-index 210 frontend.nomad:

Testing the Allocation

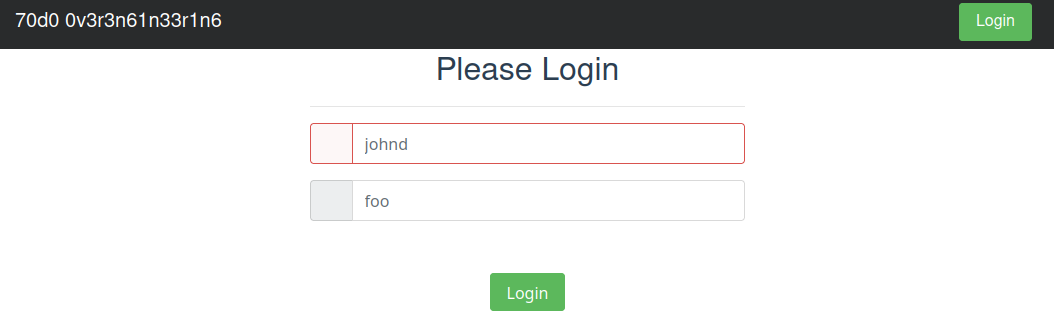

If you run a docker ps on your minion you will see that Nomad bounded the port 8080 to the external IP of your server. This is why I at first failed when trying to access the frontend server on localhost curl localhost:8080. I had to open the port 8080 and then use the external IP instead:

ufw allow 8080/tcp

ufw reload

curl my.minion.com:8080

This returns some HTML/JS code:

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>frontend</title>

</head>

<body>

<div class="container">

<div id="app"></div>

</div>

<script type="text/javascript" src="/app.js"></script></body>

</html>

That my browser displays as:

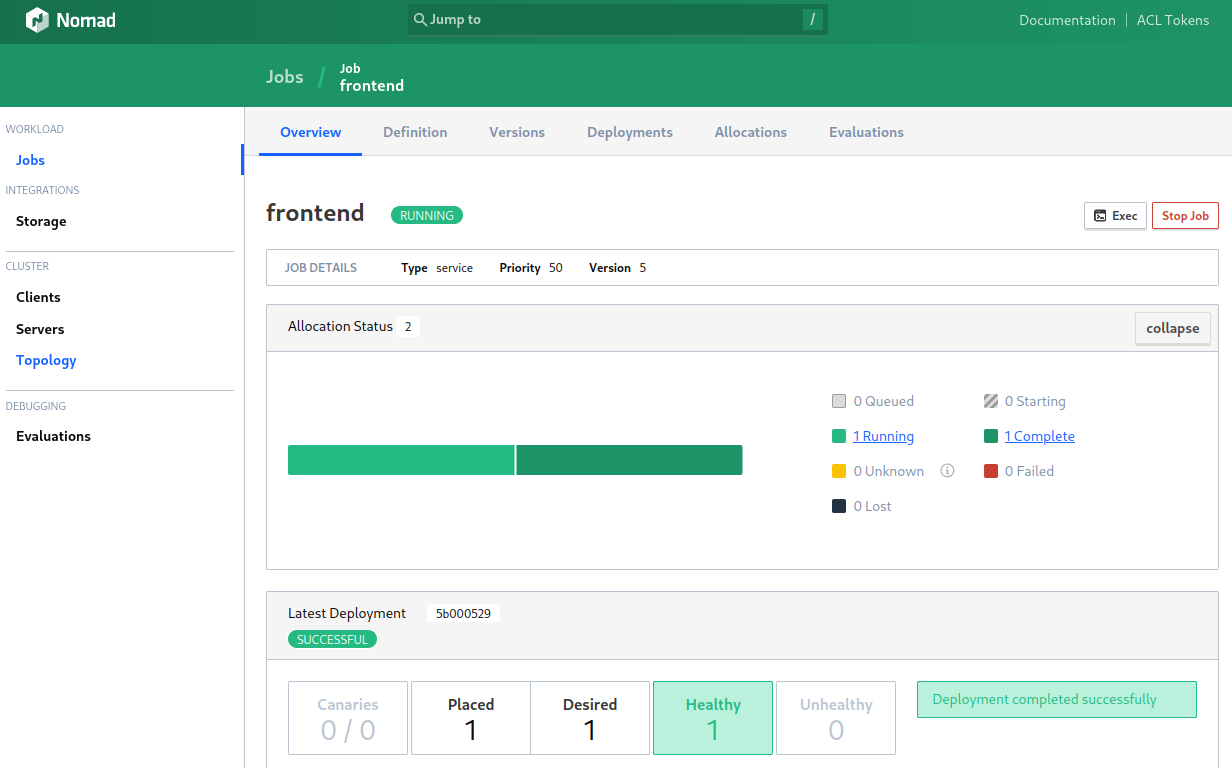

Scaling the Deployment

In Nomad, it's possible to horizontally scale an application, meaning that whenever a condition is true, Nomad will start spinning up more copies of the same application across the cluster so that the load is spread more evenly. What you need to do next is to define a scaling stanza in frontend.nomad using the information below:

enabled=truemin=1max=3policy= (A policy meant to be used by the Nomad Autoscaler)

I do not have the autoscaler running and setting an empty object as policy here returns an error when I try to plan the job:

Error getting job struct: Failed to parse using HCL 2. Use the HCL 1 parser with `nomad run -hcl1`, or address the following issues:

frontend.nomad:12,7-13: Unsupported argument; An argument named "policy" is not expected here. Did you mean to define a block of type "policy"?

So I removed the policy completely and was able to run the nomad plan frontend.nomad command. Without a policy running this command only results in the minimum amount of instances to be started - there is no trigger to extend the deployment. TODO: I will have to look into Autoscaling:

job "frontend" {

datacenters = ["instaryun"]

type = "service"

group "frontend" {

count = 2

scaling {

enabled = true

min = 2

max = 3

}

task "frontend" {

driver = "docker"

config {

image = "thedojoseries/frontend:latest"

}

resources {

network {

port "http" {

static = 8080

}

}

}

}

}

}

Running the job does show an issue here, though. Since the port 8080 is hard coded running the command would end up in a port conflict where the second instance would crash as the port is already assigned to the first. The planning step would notice this issue and stop us from running the job. But since my minion server is configured to use IPv6 and IPv4 Nomad just spreads out the instances to those resources. Also, if I had more than 1 minion Nomad would automatically de-conflict the situation by using different hosts for each instance - love it!

docker ps

CONTAINER ID IMAGE PORTS

7033df07401e thedojoseries/frontend:latest minion_ipv6:8080->8080/tcp

b136c5ddd80e thedojoseries/frontend:latest minion_ipv4:8080->8080/tcp

Dynamic Port Allocation

To prevent this issue from showing up we can assign dynamic ports to our application:

job "frontend" {

datacenters = ["instaryun"]

type = "service"

group "frontend" {

count = 2

scaling {

enabled = true

min = 2

max = 3

}

task "frontend" {

driver = "docker"

config {

image = "thedojoseries/frontend:latest"

}

resources {

network {

port "http" {}

}

}

}

}

}

This Nomad used random ports. Both on the IPv4 interface. But there is another problem. Our web application inside the docker container exposes it's service on port 8080. Which means those dynamic ports are actually going nowhere:

docker ps

CONTAINER ID IMAGE PORTS

6d15e57bd756 thedojoseries/frontend:latest 8080/tcp, minion_ipv4:25500->25500/tcp

029aee103aa7 thedojoseries/frontend:latest 8080/tcp, minion_ipv4:22124->22124/tcp

We can fix this issue with:

job "frontend" {

datacenters = ["instaryun"]

group "frontend" {

count = 2

scaling {

enabled = true

min = 2

max = 3

}

network {

mode = "host"

port "http" {

to = "8080"

}

}

task "app" {

driver = "docker"

config {

image = "thedojoseries/frontend:latest"

ports = ["http"]

}

}

}

}

Now we have two random ports on the outside, bound to the main IPv4 interface. And both are then directed to the internal service port 8080 - yeah!

docker ps

CONTAINER ID IMAGE PORTS

aaa9650a9360 thedojoseries/frontend:latest 8080/tcp, minion_ipv4:29457->8080/tcp

541b6f5caa47 thedojoseries/frontend:latest 8080/tcp, minion_ipv4:23526->8080/tcp