App Deployment with Hashicorp Nomad from Gitlab

Deploy Applications from the Gitlab Docker Registry

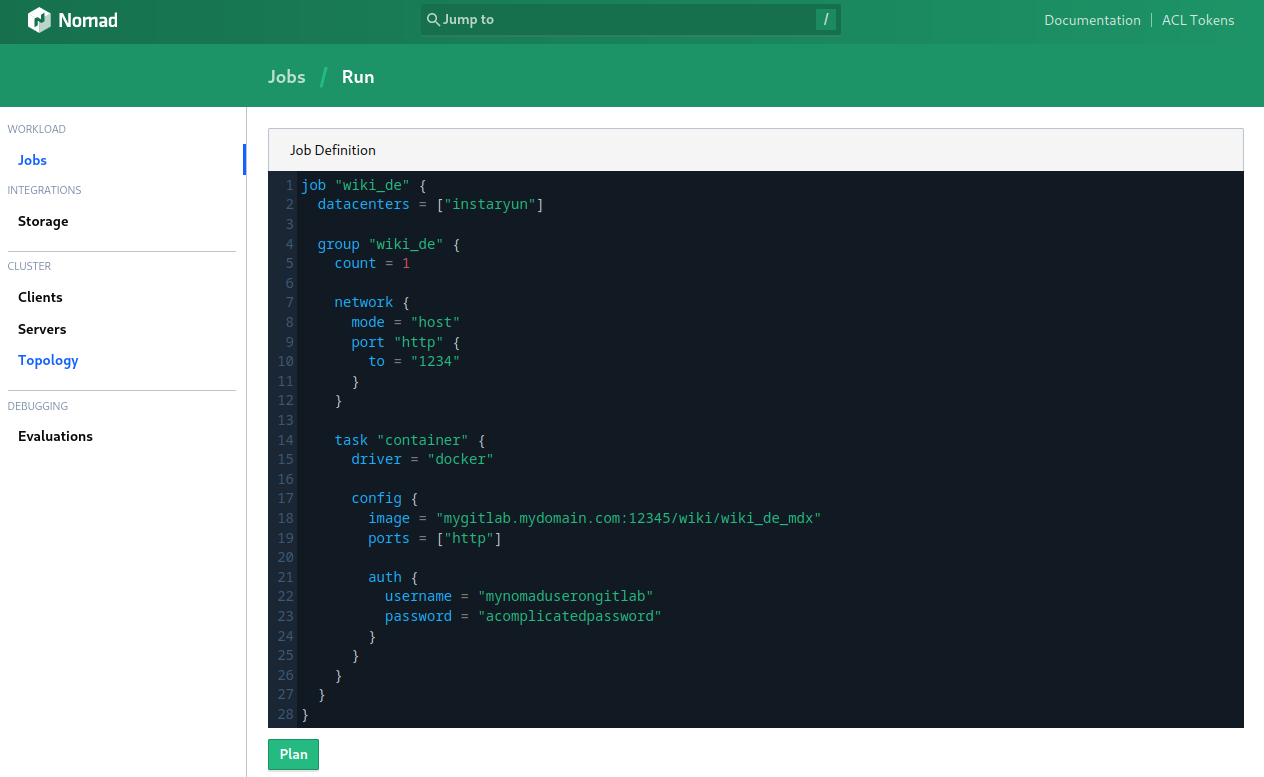

I want to download a Docker image from a private Gitlab Docker Registry and run the container on a dynamic port forwarded to a static port on the inside of the container providing a web frontend:

job "wiki_de" {

datacenters = ["instaryun"]

group "wiki_de" {

count = 1

network {

mode = "host"

port "http" {

to = "1234"

}

}

task "container" {

driver = "docker"

config {

image = "mygitlab.mydomain.com:12345/wiki/wiki_de_mdx"

ports = ["http"]

auth {

username = "mynomaduserongitlab"

password = "acomplicatedpassword"

}

}

}

}

}

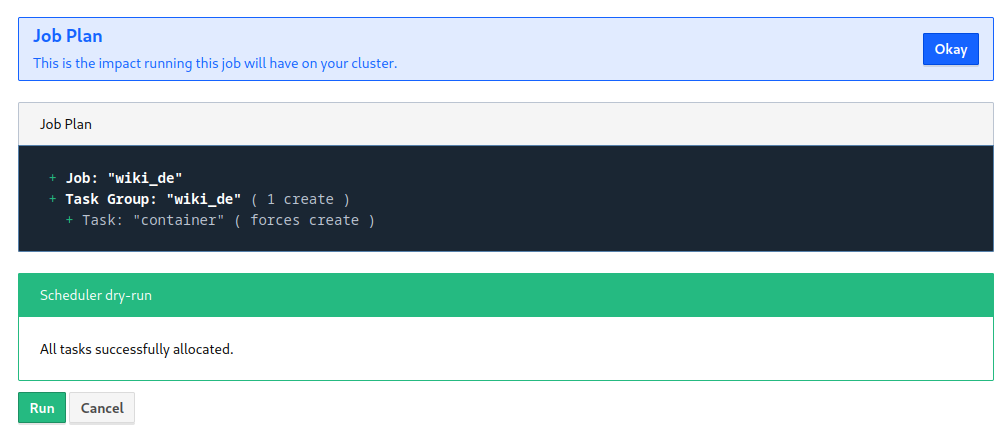

This time I want to use the Nomad web frontend to plan and execute the job:

After clicking on execute I find the UI a bit lacking - you get feedback if something went wrong. But there is no progress or error log. So let's check the CLI:

nomad job status wiki_de

Deployed

Task Group Desired Placed Healthy Unhealthy Progress Deadline

wiki_de 1 1 0 0 2022-06-12T11:23:40+02:00

Allocations

ID Node ID Task Group Version Desired Status Created Modified

a98b7e7d 005f708b wiki_de 0 run running 1m5s ago 1s ago

nomad alloc-status a98b7e7d

Recent Events:

Time Type Description

2022-06-12T11:14:45+02:00 Started Task started by client

2022-06-12T11:13:41+02:00 Driver Downloading image

2022-06-12T11:13:41+02:00 Task Setup Building Task Directory

2022-06-12T11:13:40+02:00 Received Task received by client

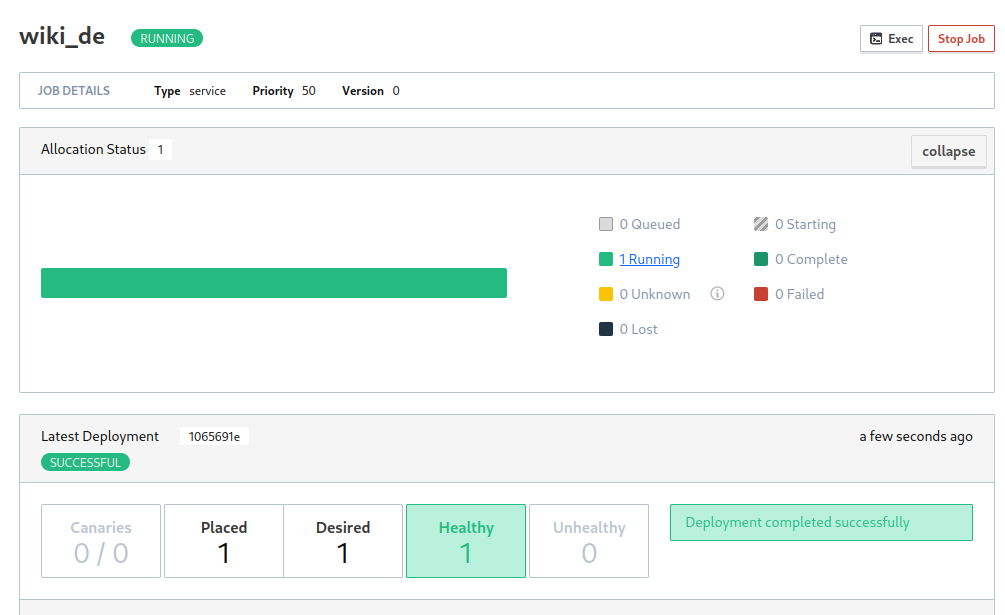

Everything seemed to have worked. Checking the UI confirms that the allocation was successful:

Checking the docker process tells me that the port allocation is in place as well:

docker ps

CONTAINER ID PORTS

ca5e75497442 my.minion.ip:24372->1234/tcp, my.minion.ip:24372->1234/udp

I am able to access my web frontend by running curl http://my.minion.ip:24372.

Add a Healthcheck

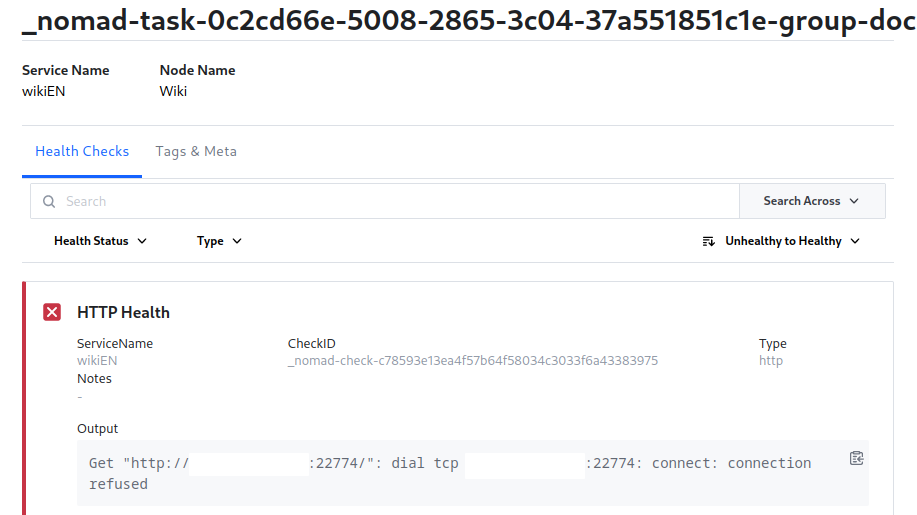

The health check initially failed since - even though I am running the container on my host network - I am still assigning a random port to it and forward it to my HTTP port inside the container:

network {

mode = "host"

port "http" {

to = "1234"

}

}

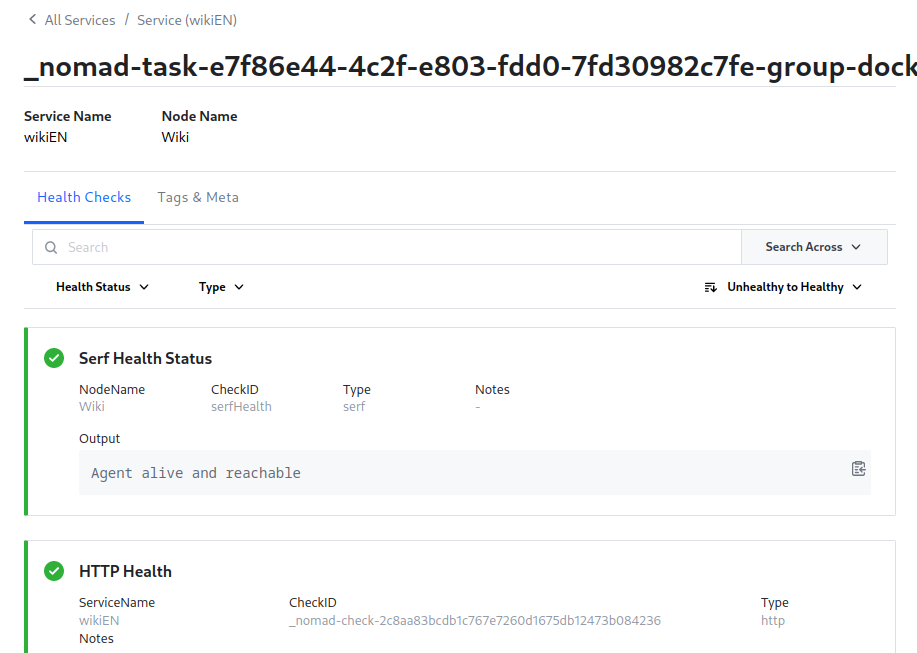

This means that Consul is trying to connect on to the HTTP frontend on this random port instead - that, unfortunately, leads to nothing and makes the health check fail:

I initially left this part in because I plan to use the Consul service discovery to handle routing automatically. But it seems for now I have to add a static port to continue. This is going to cause an issue later on when trying to update the application:

Scheduler dry-run:

- WARNING: Failed to place all allocations.

Task Group "docker" (failed to place 1 allocation):

* Resources exhausted on 1 nodes

* Dimension "network: reserved port collision http=1234" exhausted on 1 nodes

So I first have to manually stop the running allocation and then plan/run the job to update the application...

network {

mode = "host"

port "http" {

static = "1234"

}

}

job "wiki_en" {

datacenters = ["wiki_search"]

type = "service"

group "docker" {

count = 1

network {

mode = "host"

port "http" {

static = "1234"

}

}

service {

name = "wikiEN"

port = "http"

tags = [

"frontend",

"urlprefix-/en/"

]

check {

name = "HTTP Health"

path = "/"

type = "http"

protocol = "http"

interval = "10s"

timeout = "2s"

}

}

task "wiki_en_container" {

driver = "docker"

config {

image = "mygitlab.mydomain.com:12345/wiki/wiki_de_mdx"

ports = ["http"]

network_mode = "host"

auth {

username = "mynomaduserongitlab"

password = "acomplicatedpassword"

}

}

}

}

}

This application provides a web frontend on port 1234. The health check now registers a service with Consul that will check every 10s if the web frontend is available. Once the health check fails Nomad will be triggered to fullfill the requirement of having at least one healthy instance of this app running. We now have a self-healing app! Nice!

But before I run it - let's define some update parameter for the application.

Updating Applications

The Update block below will make sure that only 1 instance of the app is running at a time. It would be better to have count higher than one and a load-balancing service in place - but there are space restraints. So I accept some potential downtime.

The update service makes sure that the application passes the health-check for at least 10s and rolls the application back to the old version if the health-check fails for 2min. Once the application is deemed healthy the canary deployment will be promoted to stable.

To make sure that Nomad always pulls the latest Docker image - this job only going to be used after a new image was committed - you can add the force-pull option:

job "wiki_en" {

datacenters = ["wiki_search"]

type = "service"

group "docker" {

count = 1

network {

mode = "host"

port "http" {

static = "1234"

}

}

update {

max_parallel = 1

min_healthy_time = "10s"

healthy_deadline = "2m"

progress_deadline = "5m"

auto_revert = true

auto_promote = true

canary = 1

}

service {

name = "wikiEN"

port = "http"

tags = [

"frontend",

"urlprefix-/en/"

]

check {

name = "HTTP Health"

path = "/"

type = "http"

protocol = "http"

interval = "10s"

timeout = "2s"

}

}

task "wiki_en_container" {

driver = "docker"

config {

image = "mygitlab.mydomain.com:12345/wiki/wiki_de_mdx"

ports = ["http"]

network_mode = "host"

force_pull = true

auth {

username = "mynomaduserongitlab"

password = "acomplicatedpassword"

}

}

}

}

}

Starting the job I can now see the canary deployment and job promotion once the Consul health check is successful:

Adding a Loadbalancer / App Ingress

Use Git to Download Artifacts

Preparation

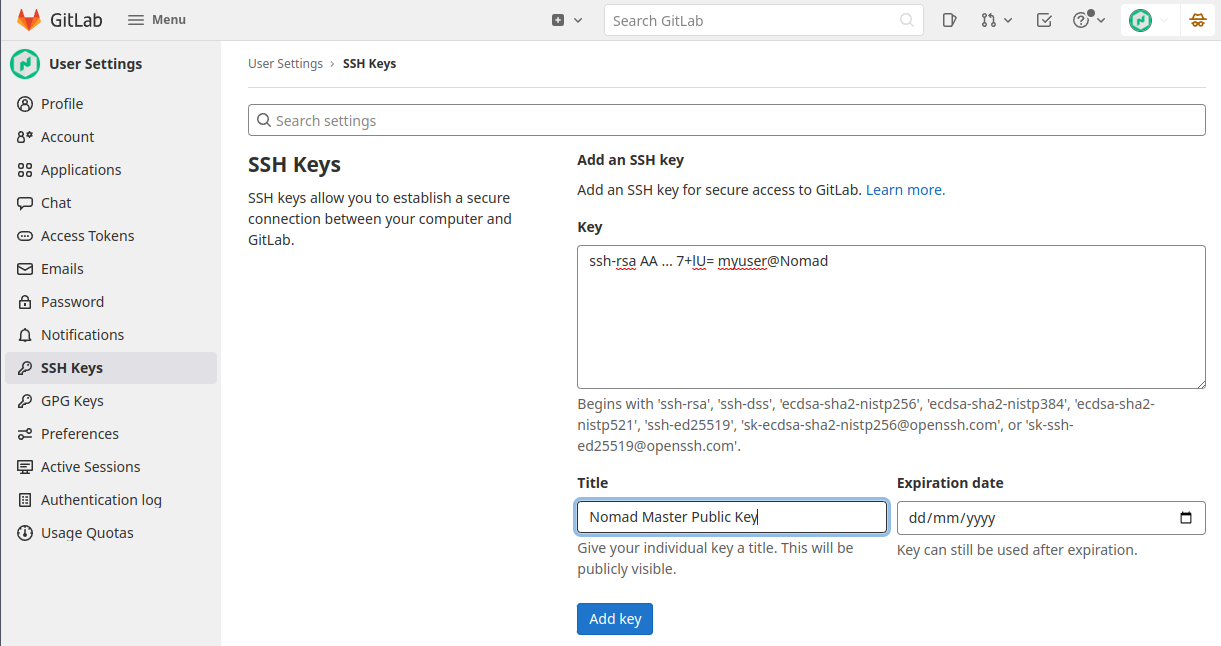

Create your SSH Key

First we need to create the private and public SSH key on the Nomad Minion node:

ssh-keygen -t rsa -b 4096 -f /etc/nomad.d/.ssh/id_rsa

I realized afterwards that the Nomad process is executed by the

rootuser on each minion. Only the master node uses thenomaduser. This means this key could be placed in the root home dir. But I am going to add a default SSH config parameter that will make sure that this key is used - no matter where it is placed. And having everything neatly placed inside thenomad.ddir is maybe not a bad idea. This might even be source controlled and used in provisioning new instances of each Nomad Minion.

This will create the RSA and RSA public key - place them inside your Nomad users home directory. If you used the following command before to create the user useradd --system --home /etc/nomad.d --shell /bin/false nomad this directory will be /etc/nomad.d:

id_rsa

-----BEGIN OPENSSH PRIVATE KEY-----

bYWQ=fDSAFe4 ... 5sdgfdDFSfszgf

-----END OPENSSH PRIVATE KEY-----

id_rsa.pub

ssh-rsa AA ... 7+lU= myuser@Nomad

Make sure those files can be used by the Nomad user chown nomad:nomad /etc/nomad.d/*:

chmod 400 /etc/nomad.d/.ssh/id_rsa

ls -la /etc/nomad.d/.ssh

total 16

drwxr-xr-x 2 root root 4096 Jun 8 12:18 .

drwxr-xr-x 4 nomad nomad 4096 Jun 8 12:18 ..

-r-------- 7 nomad nomad 3.2K Jun 13 07:23 id_rsa

-rw-r--r-- 7 nomad nomad 744 Jun 13 07:23 id_rsa.pub

Make sure that the Nomad user's known hosts file is populated:

ssh-keyscan my.gitlab.address.com | sudo tee -a /etc/nomad.d/.ssh/known_hosts

Make sure that SSH uses the correct public key when connecting to your Gitlab server by adding the following configuration:

nano /etc/ssh/ssh_config

Host my.gitlab.address.com

Preferredauthentications publickey

IdentityFile /etc/nomad.d/.ssh/id_rsa

ssh -T git@my.gitlab.address.com

Welcome to GitLab, @nomaduser!

Configuring Gitlab

Create a Nomad User in Gitlab and add the Public key:

Test the Connection

runuser -u nomad -- mkdir /etc/nomad.d/test

cd /etc/nomad.d/test

runuser -u nomad -- git clone git@my.gitlab.address.com/group/repo.git

The repository should be downloaded into your test directory without having to type in a password - then you are good to go!

Create a Nomad Job

For testing I am going to download some HTML code from a private Gitlab repository and execute a small terminal Node.js web server called httpster to serve those files:

httpster -p 8080 -d /home/somedir/public_html

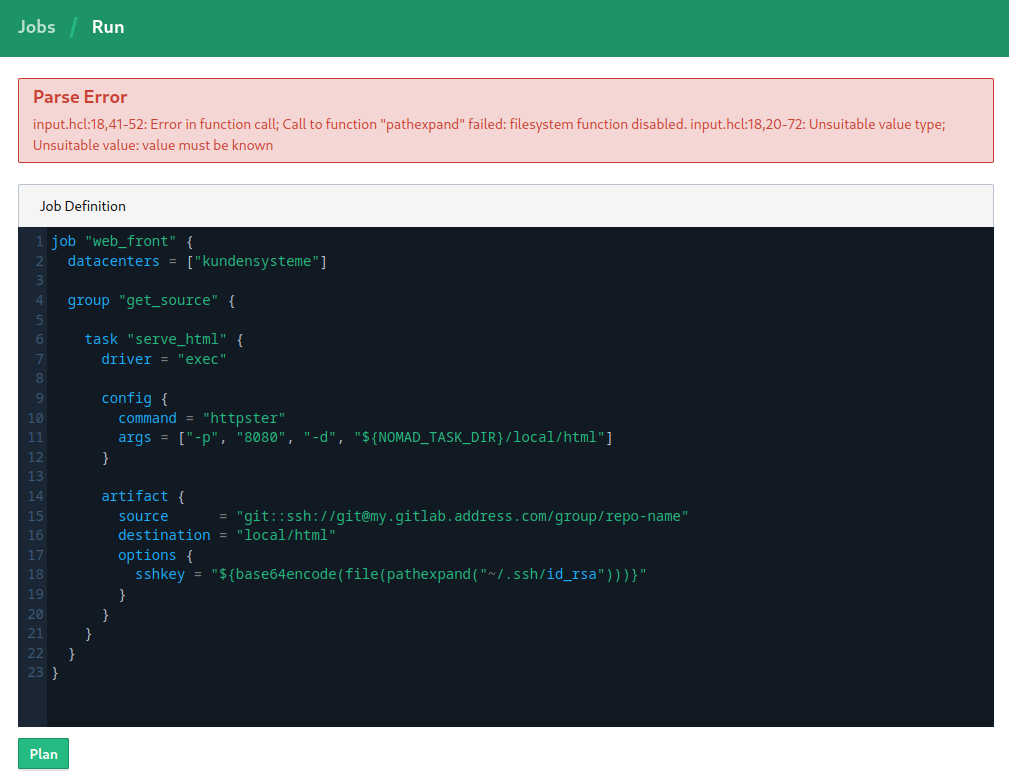

So now we can plan and run our Nomad job from the Nomad UI:

job "web_front" {

datacenters = ["kundensysteme"]

group "web" {

task "httpster" {

driver = "exec"

config {

command = "httpster"

args = ["-p", "8080", "-d", "${NOMAD_TASK_DIR}/html"]

}

artifact {

source = "git::git@my.gitlab.address.com/group/repo.git"

destination = "${NOMAD_TASK_DIR}/html"

options {

sshkey = "${base64encode(file(pathexpand("~/.ssh/id_rsa")))}"

depth = 1

}

}

resources {

cpu = 128

memory = 128

}

}

}

}

But it seems that you cannot access the servers filesystem when using the Nomad webUI:

Parse Error: input.hcl:18,41-52: Error in function call; Call to function "pathexpand" failed: filesystem function disabled. input.hcl:18,20-72: Unsuitable value type; Unsuitable value: value must be known

So let's create this job file on our Nomad master and execute it using the Nomad CLI:

nomad plan test_artifacts.nomad

+/- Job: "web_front"

+/- Stop: "true" => "false"

+/- Task Group: "web" (1 create)

+/- Task: "httpster" (forces create/destroy update)

+/- Artifact {

GetterMode: "any"

GetterOptions[sshkey]: "ADgfdt...tf325sd"

GetterSource: "git::ssh://git@my.gitlab.address.com/group/repo.git"

RelativeDest: "local/html"

}

Scheduler dry-run:

- All tasks successfully allocated.

To submit the job with version verification run:

nomad job run -check-index 8150 test_artifacts.nomad

nomad job run -check-index 8150 test_artifacts.nomad

==> 2022-06-13T08:24:57+02:00: Monitoring evaluation "19cbcc30"

2022-06-13T08:24:57+02:00: Evaluation triggered by job "web_front"

==> 2022-06-13T08:24:58+02:00: Monitoring evaluation "19cbcc30"

2022-06-13T08:24:58+02:00: Evaluation within deployment: "cb210d05"

2022-06-13T08:24:58+02:00: Allocation "ab8494c7" created: node "005f708b", group "web"

2022-06-13T08:24:58+02:00: Evaluation status changed: "pending" -> "complete"

==> 2022-06-13T08:24:58+02:00: Evaluation "19cbcc30" finished with status "complete"

==> 2022-06-13T08:24:58+02:00: Monitoring deployment "cb210d05"

✓ Deployment "cb210d05" successful

2022-06-13T08:25:14+02:00

ID = cb210d05

Job ID = web_front

Job Version = 9

Status = successful

Description = Deployment completed successfully

Deployed

Task Group Desired Placed Healthy Unhealthy Progress Deadline

web 1 1 1 0 2022-06-13T08:35:12+02:00

nomad job status web_front

Deployed

Task Group Desired Placed Healthy Unhealthy Progress Deadline

web 1 1 0 0 2022-06-13T07:15:19+02:00

Allocations

ID Node ID Task Group Version Desired Status Created Modified

11526379 005f708b web 1 run pending 7s ago 4s ago

nomad alloc-status a6323ccc

Task "httpster" is "running"

Task Resources

CPU Memory Disk Addresses

0/128 MHz 13 MiB/128 MiB 300 MiB

Recent Events:

Time Type Description

2022-06-13T08:25:02+02:00 Started Task started by client

2022-06-13T08:25:01+02:00 Downloading Artifacts Client is downloading artifacts

2022-06-13T08:24:58+02:00 Task Setup Building Task Directory

2022-06-13T08:24:58+02:00 Received Task received by client

I can verify that the web server is running:

netstat -tlnp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp6 0 0 :::8080 :::* LISTEN 7002/node

curl localhost:8080

<!DOCTYPE html>

<html>

<head>

<meta charset='utf-8'>

...

It works!