Hashicorp Nomad Refresher - Job Specifications

Job Specifications

artifact

The artifact stanza instructs Nomad to fetch and unpack a remote resource, such as a file, tarball, or binary. Nomad downloads artifacts using the popular go-getter library, which permits downloading artifacts from a variety of locations using a URL as the input source.

Placement: job/group/task/artifact

task "server" {

artifact {

source = "https://example.com/file.tar.gz"

destination = "local/some-directory"

options {

checksum = "md5:df6a4178aec9fbdc1d6d7e3634d1bc33"

}

}

}

constraint

The constraint allows restricting the set of eligible nodes. Constraints may filter on attributes or client metadata.

Placement: job/constraint, job/group/constraint or job/group/task/constraint

job "docs" {

# All tasks in this job must run on linux.

constraint {

attribute = "${attr.kernel.name}"

value = "linux"

}

group "example" {

# All groups in this job should be scheduled on different hosts.

constraint {

operator = "distinct_hosts"

value = "true"

}

task "server" {

# All tasks must run where "my_custom_value" is greater than 3.

constraint {

attribute = "${meta.my_custom_value}"

operator = ">"

value = "3"

}

}

}

}

env

The env stanza configures a list of environment variables to populate the task's environment before starting.

Placement: job/group/task/env

task "server" {

env {

my_key = "my-value"

}

}

The "parameters" for the env stanza can be any key-value. The keys and values are both of type string, but they can be specified as other types. Values will be stored as their string representation. No type information is preserved.

Interpolation

This example shows using Nomad interpolation to populate environment variables.

env {

NODE_CLASS = "${node.class}"

}

lifecycle

The lifecycle stanza is used to express task dependencies in Nomad by configuring when a task is run within the lifecycle of a task group.

Placement: job/group/task/lifecycle

hook (string: <required>): Specifies when a task should be run within the lifecycle of a group. The following hooks are available:

- prestart: Will be started immediately. The main tasks will not start until all prestart tasks with sidecar = false have completed successfully.

- poststart: Will be started once all main tasks are running.

- poststop: Will be started once all main tasks have stopped successfully or exhausted their failure retries.

- sidecar (bool: false): Controls whether a task is ephemeral or long-lived within the task group. If a lifecycle task is ephemeral (

sidecar = false), the task will not be restarted after it completes successfully. If a lifecycle task is long-lived (sidecar = true) and terminates, it will be restarted as long as the allocation is running.

In the following example, the init task will block the main task from starting until the upstream database service is listening on the expected port:

task "wait-for-db" {

lifecycle {

hook = "prestart"

sidecar = false

}

driver = "exec"

config {

command = "sh"

args = ["-c", "while ! nc -z db.service.local.consul 8080; do sleep 1; done"]

}

}

task "main-app" {

...

}

Companion or sidecar tasks run alongside the main task to perform an auxiliary task. Common examples include proxies and log shippers.

task "fluentd" {

lifecycle {

hook = "poststart"

sidecar = true

}

driver = "docker"

config {

image = "fluentd/fluentd"

}

template {

destination = "local/fluentd.conf"

data = ...

}

}

task "main-app" {

...

}

The example below shows a chatbot which posts a notification when the main tasks have stopped:

task "main-app" {

...

}

task "announce" {

lifecycle {

hook = "poststop"

}

driver = "docker"

config {

image = "alpine/httpie"

command = "http"

args = [

"POST",

"https://hooks.slack.com/services/T00000000/B00000000/XXXXXXXXXXXXXXXXXXXXXXXX",

"text='All done!'"

]

}

}

network

The network stanza specifies the networking requirements for the task group, including the network mode and port allocations. Note that this only applies to services that want to listen on a port. Batch jobs or services that only make outbound connections do not need to allocate ports, since they will use any available interface to make an outbound connection.

Placement: job/group/network

job "docs" {

group "example" {

network {

port "http" {}

port "https" {}

port "lb" {

static = 8889

}

}

}

}

When the network stanza is defined with bridge as the networking mode, all tasks in the task group share the same network namespace. This is a prerequisite for Consul Connect. Tasks running within a network namespace are not visible to applications outside the namespace on the same host. This allows Connect-enabled applications to bind only to localhost within the shared network stack, and use the proxy for ingress and egress traffic.

To use Bridge Mode, you must have the reference CNI plugins installed at the location specified by the client's cni_path configuration. These plugins are used to create the bridge network and configure the appropriate iptables rules.

Network Mode

mode (string: "host") - Mode of the network. The following modes are available:

- none - Task group will have an isolated network without any network interfaces.

- bridge - Task group will have an isolated network namespace with an interface that is bridged with the host. Note that bridge networking is only currently supported for the docker, exec, raw_exec, and java task drivers.

- host - Each task will join the host network namespace and a shared network namespace is not created.

- cni/cni network name - Task group will have an isolated network namespace with the network configured by CNI.

Port Parameters

- static (int: nil): Specifies the static TCP/UDP port to allocate. If omitted, a dynamic port is chosen.

- to (string:nil): Applicable when using "bridge" mode to configure port to map to inside the task's network namespace. Omitting this field or setting it to -1 sets the mapped port equal to the dynamic port allocated by the scheduler.

- host_network (string:nil): Designates the host network name to use when allocating the port. When port mapping the host port will only forward traffic to the matched host network address. The label assigned to the port is used to identify the port in service discovery, and used in the name of the environment variable that indicates which port your application should bind to.`

This example specifies a dynamic port allocation for the port labelled http. Dynamic ports are allocated in a range from 20000 to 32000.

group "example" {

network {

port "http" {}

}

}

Most services run in your cluster should use dynamic ports. This means that the port will be allocated dynamically by the scheduler, and your service will have to read an environment variable to know which port to bind to at startup.

This example specifies a static port allocation for the port labeled lb. Static ports bind your job to a specific port on the host they' are placed on.

network {

port "lb" {

static = 6539

}

}

Some drivers (e.g. Docker) allow you to map ports. A mapped port means that your application can listen on a fixed port (it does not need to read the environment variable) and the dynamic port will be mapped to the port in your container or virtual machine:

group "app" {

network {

port "http" {

to = 8080

}

}

task "example" {

driver = "docker"

config {

ports = ["http"]

}

}

}

The above example is for the Docker driver. The service is listening on port 8080 inside the container. The driver will automatically map the dynamic port to this service.

When the task is started, it is passed an additional environment variable named NOMAD_HOST_PORT_http which indicates the host port that the HTTP service is bound to.

Example: Run a Webservice

Create the Job File

The hashicorp/http-echo Docker container is a simple application that renders an HTML page containing the arguments passed to the http-echo process such as “Hello Nomad!”. The process listens on a port such as 5467 provided by another argument:

job "http-echo-test" {

datacenters = ["instaryun"]

group "echo" {

network {

port "lb" {

static = 8080

}

}

count = 1

task "server" {

driver = "docker"

config {

image = "hashicorp/http-echo:latest"

ports = ["lb"]

args = [

"-listen", ":8080",

"-text", "${attr.os.name}",

]

}

}

}

}

In this file, we will create a job called http-echo, set the driver to use docker and pass the necessary text and port arguments to the container. As we need network access to the container to display the resulting webpage, we define the network section to require a network with port 8080 open from the host machine to the container.

Run the Job (CLI)

nomad job plan http_echo.nomad

nomad job run -check-index 0 http_echo.nomad

Check that the service is running:

docker ps

CONTAINER ID IMAGE COMMAND PORTS

4d5c5749de31 hashicorp/http-echo:latest "/http-echo -listen …" 5678/tcp, 192.168.2.111:8080->8080/tcp, 192.168.2.111:8080->8080/udp

nomad job status

ID Type Priority Status Submit Date

http-echo-test service 50 running 2021-08-27T12:31:57+08:00

Make sure that port 8080 is open in your firewall and try out the URL:

curl 192.168.2.111:8080

debian

You can stop the job with:

nomad stop http-echo-test

Run the Job (GUI)

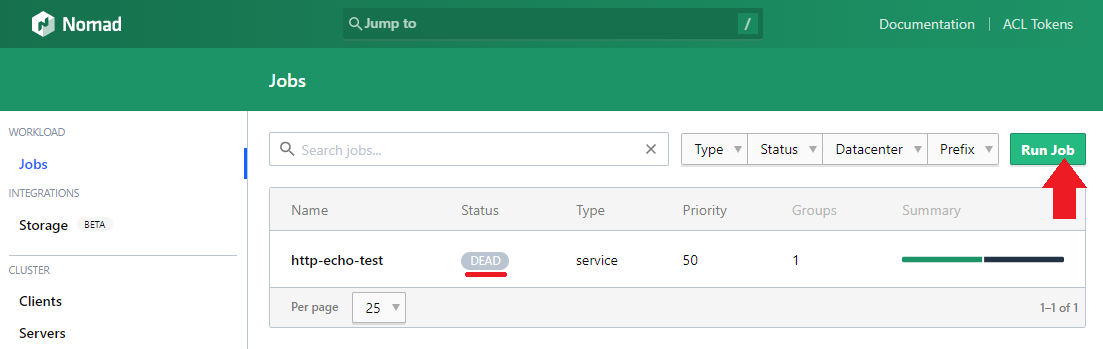

When you open the Nomad GUI you will see that the job was successfully stopped. Click on Run Job to start a new one:

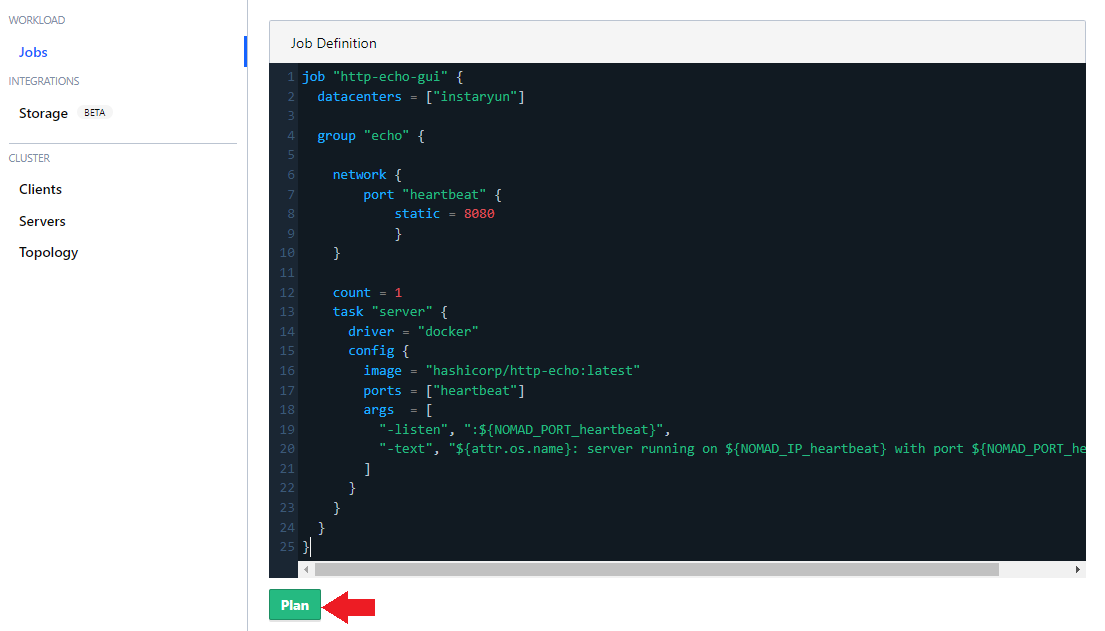

Copy in the job description and hit Plan to allocate your application on a minion server in your specified datacenter:

job "http-echo-gui" {

datacenters = ["instaryun"]

group "echo" {

network {

port "heartbeat" {

static = 8080

}

}

count = 1

task "server" {

driver = "docker"

config {

image = "hashicorp/http-echo:latest"

ports = ["heartbeat"]

args = [

"-listen", ":${NOMAD_PORT_heartbeat}",

"-text", "${attr.os.name}: server running on ${NOMAD_IP_heartbeat} with port ${NOMAD_PORT_heartbeat}",

]

}

}

}

}

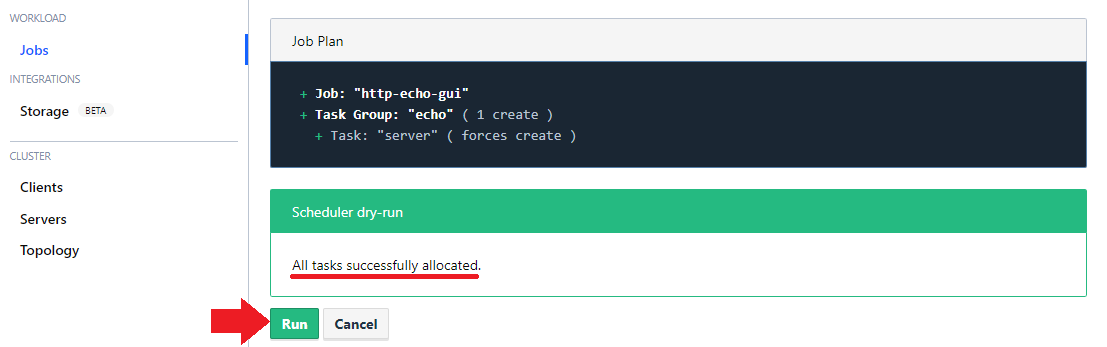

And hit Run to deploy your application:

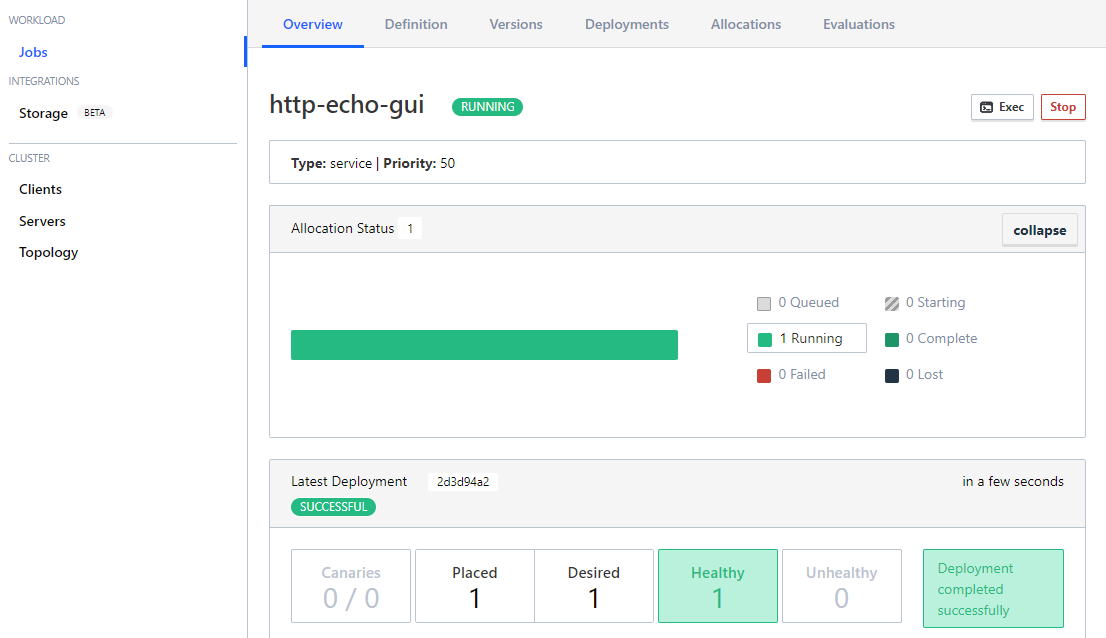

And wait for your app to come online:

And verify that the service is running: