Elasticsearch

Data Persistence

Client Configuration

First we need to create a volume that allows us to persist the data ingested by Elasticsearch. Add the following configs in your client.hcl file [Plugin Stanza | Host Volume Stanza]:

nano /etc/nomad.d/client.hcl

client {

enabled = true

servers = ["myhost:port"]

host_volume "letsencrypt" {

path = "/etc/letsencrypt"

read_only = true

}

host_volume "es_data" {

path = "/opt/es_data"

read_only = false

}

}

# Docker Configuration

plugin "docker" {

volumes {

enabled = true

}

}

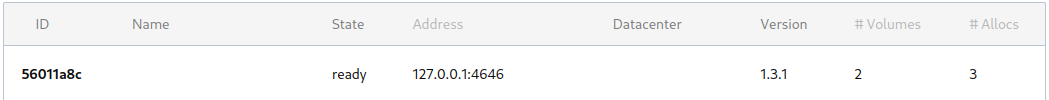

Restart the service service nomad restart and verify that the volume was picked up (I already created the directory before restarting the service - I am not sure if this is necessary):

Job Specification

And then in the job specifications, inside the Group Stanza define the volume:

volume "es_data" {

type = "host"

read_only = false

source = "es_data"

}

and then finally add following in the Task Stanza use the defined volume:

volume_mount {

volume = "es_data"

destination = "/usr/share/elasticsearch/data" #<-- in the container

read_only = false

}

Nomad Job

Docker-Compose

I have been using a docker-compose.yml file before to set up a ELK cluster. The Elasticsearch part of looks like:

services:

elasticsearch:

container_name: elasticsearch

restart: always

build:

context: elasticsearch/

args:

ELK_VERSION: $ELK_VERSION

volumes:

- type: bind

source: ./elasticsearch/config/elasticsearch.yml

target: /usr/share/elasticsearch/config/elasticsearch.yml

read_only: true

- type: volume

source: elasticsearch

target: /usr/share/elasticsearch/data

- type: bind

source: /opt/wiki_elk/snapshots

target: /snapshots

# ports:

# - "9200:9200"

# - "9300:9300"

environment:

# ES_JAVA_OPTS: "-Xmx256m -Xms256m"

ES_JAVA_OPTS: '-Xms2g -Xmx2g'

ELASTIC_PASSWORD: 'supersecretpassword'

# Use single node discovery in order to disable production mode and avoid bootstrap checks

# see https://www.elastic.co/guide/en/elasticsearch/reference/current/bootstrap-checks.html

discovery.type: single-node

networks:

- wikinet

And the elasticsearch.yml that is included in the image during the build process is:

---

## Default Elasticsearch configuration from Elasticsearch base image.

## https://github.com/elastic/elasticsearch/blob/master/distribution/docker/src/docker/config/elasticsearch.yml

#

cluster.name: "docker-cluster"

# network.host: _site_

network.host: 0.0.0.0

## X-Pack settings

## see https://www.elastic.co/guide/en/elasticsearch/reference/current/setup-xpack.html

#

# xpack.license.self_generated.type: trial

xpack.license.self_generated.type: basic

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

xpack.security.authc:

anonymous:

username: anonymous_user

roles: search_agent

authz_exception: true

## CORS

http.cors.enabled : true

http.cors.allow-origin: "*"

http.cors.allow-methods: OPTIONS, HEAD, GET, POST, PUT, DELETE

http.cors.allow-credentials: true

http.cors.allow-headers: X-Requested-With, X-Auth-Token, Content-Type, Content-Length, Authorization, Access-Control-Allow-Headers, Accept

## Snapshots

path.repo: ["/snapshots"]

Job Specification

job "wiki_elastic" {

datacenters = ["wiki_search"]

group "elasticsearch" {

count = 1

network {

port "http" {

static = 9200

}

port "tcp" {

static = 9300

}

}

service {

name = "elasticsearch"

}

volume "es_data" {

type = "host"

read_only = false

source = "es_data"

}

task "elastic_container" {

driver = "docker"

kill_timeout = "600s"

kill_signal = "SIGTERM"

env {

ES_JAVA_OPTS = "-Xms2g -Xmx2g"

ELASTIC_PASSWORD = "mysecretpassword"

discovery.type=single-node

}

template {

data = <<EOH

network.host: 0.0.0.0

xpack.license.self_generated.type: basic

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

xpack.security.authc:

anonymous:

username: anonymous_user

roles: search_agent

authz_exception: true

http.cors.enabled : true

http.cors.allow-origin: "*"

http.cors.allow-methods: OPTIONS, HEAD, GET, POST, PUT, DELETE

http.cors.allow-credentials: true

http.cors.allow-headers: X-Requested-With, X-Auth-Token, Content-Type, Content-Length, Authorization, Access-Control-Allow-Headers, Accept

path.repo: ["/snapshots"]

EOH

destination = "local/elastic/elasticsearch.yml"

}

volume_mount {

volume = "es_data"

destination = "/usr/share/elasticsearch/data" #<-- in the container

read_only = false

}

config {

network_mode = "host"

image = "docker.elastic.co/elasticsearch/elasticsearch:8.3.2"

command = "elasticsearch"

ports = ["http","tcp"]

volumes = [

"local/elastic/snapshots:/snapshots",

"local/elastic/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml",

]

args = [

"-Ecluster.name=wiki_elastic",

"-Ediscovery.type=single-node"

]

ulimit {

memlock = "-1"

nofile = "65536"

nproc = "8192"

}

}

resources {

cpu = 1000

memory = 4096

}

}

}

}

Run the Job File

Elasticsearch Error Messages

java.lang.IllegalStateException: failed to obtain node locks, tried [/usr/share/elasticsearch/data]; maybe these locations are not writable

Adjust write permission on volume mount:

chmod -R 775 /opt/es_data

chown 1000:1000 -R /opt/es_data

bootstrap check failure [1] of [1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

Insert the new entry into the /etc/sysctl.conf file with the required parameter:

vm.max_map_count = 262144

And run the following command to change the current state of kernel:

sysctl -w vm.max_map_count=262144

Restart Docker to take note:

systemctl restart docker

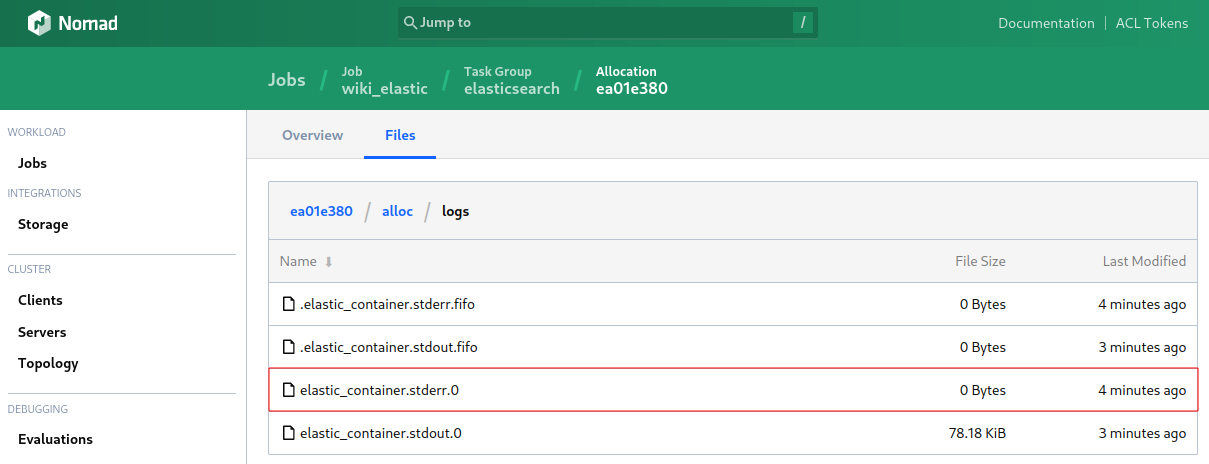

Restarting the job and this time it looks good! The container is running and the Elasticsearch ERROR log is quiet:

docker ps

docker.elastic.co/elasticsearch/elasticsearch:8.3.2 Up 2 minutes elastic_container-ea01e380-f381-2ac6-d88d-84e6cdf223a2

Adding Update Parameter

I want to add the Update Stanza:

update {

max_parallel = 1

health_check = "checks"

min_healthy_time = "180s"

healthy_deadline = "5m"

progress_deadline = "10m"

}

But this time I am not going to add the force-pull parameter to the docker service. As I am only going to update this service when a new version of Elasticsearch is being released.

Adding Consul Service Discovery

service {

check {

name = "rest-http"

type = "http"

port = "http"

path = "/"

interval = "30s"

timeout = "4s"

header {

Authorization = ["Basic ZWxhc3RpYzpjaGFuZ2VtZQ=="]

}

}

}

Here I am getting an error message for the HTTP Rest health check in Consul:

HTTP GET http://my.elasticsearch:9200/: 403 Forbidden Output: {"error":{"root_cause":[{"type":"security_exception","reason":"action [cluster:monitor/main] is unauthorized for user [anonymous_user] with roles [search_agent], this action is granted by the cluster privileges [monitor,manage,all]"}],"type":"security_exception","reason":"action [cluster:monitor/main] is unauthorized for user [anonymous_user] with roles [search_agent], this action is granted by the cluster privileges [monitor,manage,all]"},"status":403}

But why do I have to provide a user authentication? Is being turned down because of an invalid login not proof that the HTTP service is running? You can add the authentication headers like (s. below) But I think I will change the path to an index that can be read without authentication later:

service {

check {

name = "rest-http"

type = "http"

port = "http"

path = "/"

interval = "30s"

timeout = "4s"

header {

Authorization = ["Basic ZWxhc3RpYzpjaGFuZ2VtZQ=="]

}

}

}

You can also combine the HTTP with an TCP Check:

service {

name = "elasticsearch"

check {

name = "transport-tcp"

port = "tcp"

type = "tcp"

interval = "30s"

timeout = "4s"

}

# check {

# name = "rest-http"

# type = "http"

# port = "http"

# path = "/"

# interval = "30s"

# timeout = "4s"

# }

}

Complete Job File

job "wiki_elastic" {

type = "service"

datacenters = ["wiki_search"]

update {

max_parallel = 1

health_check = "checks"

min_healthy_time = "180s"

healthy_deadline = "5m"

progress_deadline = "10m"

auto_revert = true

auto_promote = true

canary = 1

}

group "elasticsearch" {

count = 1

network {

port "http" {

static = 9200

}

port "tcp" {

static = 9300

}

}

volume "es_data" {

type = "host"

read_only = false

source = "es_data"

}

task "elastic_container" {

driver = "docker"

kill_timeout = "600s"

kill_signal = "SIGTERM"

env {

ES_JAVA_OPTS = "-Xms2g -Xmx2g"

ELASTIC_PASSWORD = "mysecretpassword"

}

template {

data = <<EOH

network.host: 0.0.0.0

cluster.name: wiki_elastic

discovery.type: single-node

xpack.license.self_generated.type: basic

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

xpack.security.authc:

anonymous:

username: anonymous_user

roles: search_agent

authz_exception: true

http.cors.enabled : true

http.cors.allow-origin: "*"

http.cors.allow-methods: OPTIONS, HEAD, GET, POST, PUT, DELETE

http.cors.allow-credentials: true

http.cors.allow-headers: X-Requested-With, X-Auth-Token, Content-Type, Content-Length, Authorization, Access-Control-Allow-Headers, Accept

path.repo: ["/snapshots"]

EOH

destination = "local/elastic/elasticsearch.yml"

}

volume_mount {

volume = "es_data"

destination = "/usr/share/elasticsearch/data" #<-- in the container

read_only = false

}

config {

network_mode = "host"

image = "docker.elastic.co/elasticsearch/elasticsearch:8.3.2"

command = "elasticsearch"

ports = ["http","tcp"]

volumes = [

"local/elastic/snapshots:/snapshots",

"local/elastic/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml",

]

args = [

"-Ecluster.name=wiki_elastic",

"-Ediscovery.type=single-node"

]

ulimit {

memlock = "-1"

nofile = "65536"

nproc = "8192"

}

}

service {

name = "elasticsearch"

check {

name = "transport-tcp"

port = "tcp"

type = "tcp"

interval = "30s"

timeout = "4s"

}

# check {

# name = "rest-http"

# type = "http"

# port = "http"

# path = "/"

# interval = "30s"

# timeout = "4s"

# header {

# Authorization = ["Basic ZWxhc3RpYzpjaGFuZ2VtZQ=="]

# }

# }

}

resources {

cpu = 1000

memory = 4096

}

}

}

}