Hashicorp Nomad for NGINX Load-balancing

Load Balancing with NGINX

You can use Nomad's template stanza to configure NGINX so that it can dynamically update its load balancer configuration to scale along with your services. The main use case for NGINX in this scenario is to distribute incoming HTTP(S) and TCP requests from the Internet to front-end services that can handle these requests.

Demo Web App

webapp.tf

job "demo_webapp" {

datacenters = ["dc1"]

group "demo" {

count = 3

network {

port "http" {

to = -1

}

}

service {

name = "demo-webapp"

port = "http"

check {

type = "http"

path = "/"

interval = "2s"

timeout = "2s"

}

}

task "server" {

env {

PORT = "${NOMAD_PORT_http}"

NODE_IP = "${NOMAD_IP_http}"

}

driver = "docker"

config {

image = "hashicorp/demo-webapp-lb-guide"

ports = ["http"]

}

}

}

}

nomad plan webapp.tf [±master ●]

+ Job: "demo_webapp"

+ Task Group: "demo" (3 create)

+ Task: "server" (forces create)

Scheduler dry-run:

- All tasks successfully allocated.

Job Modify Index: 0

To submit the job with version verification run:

nomad job run -check-index 0 webapp.tf

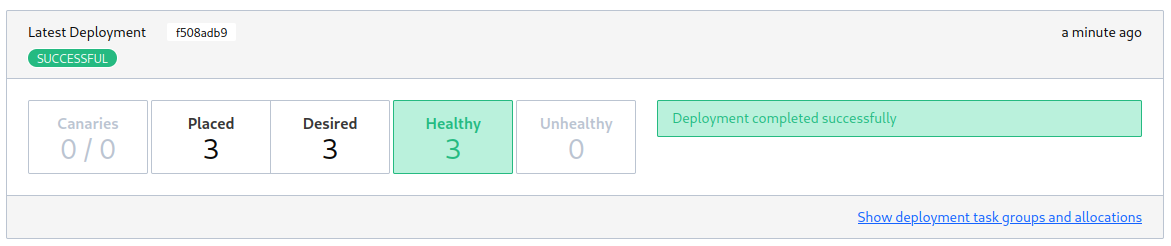

The 3 instances have now been deployed to the host system exposing random port:

docker ps

CONTAINER ID IMAGE PORTS

17288d176334 hashicorp/demo-webapp-lb-guide server-ip:30809->30809/tcp

17c426f00330 hashicorp/demo-webapp-lb-guide server-ip:24931->24931/tcp

3f863e8dc822 hashicorp/demo-webapp-lb-guide server-ip:27252->27252/tcp

NGINX Load Balancer

This NGINX instance balances requests across the deployed instances of the web application:

nginx.tf

job "nginx" {

datacenters = ["dc1"]

group "nginx" {

count = 1

network {

port "http" {

static = 80

}

}

service {

name = "nginx"

port = "http"

}

task "nginx" {

driver = "docker"

config {

image = "nginx:alpine"

network_mode = "host"

volumes = [

"local:/etc/nginx/conf.d",

]

}

template {

data = <<EOF

upstream backend {

{{ range service "demo-webapp" }}

server {{ .Address }}:{{ .Port }};

{{ else }}server 127.0.0.1:65535; # force a 502

{{ end }}

}

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

EOF

destination = "local/load-balancer.conf"

change_mode = "signal"

change_signal = "SIGHUP"

}

}

}

}

NOTE I kept getting a 502 Bad Gateway error from NGINX when following the official tutorial. This happened because - unlike with Docker-Compose where I would add all container to the same virtual network and use the internal DNS service to connect them - here the container are unable to "see" each other directly. Communication always has to leave the local environment using the WAN IP of my host server - which is where my firewall sabotaged my efforts. I am not sure what the recommended way is to handle this issue - don't you use firewalls, or should I try to dynamically configure it using Nomads

exec_rawdriver? I solved the issue for now by lifting the Load-Balancer onto my host network stack usingnetwork_mode = "host".

This configuration uses Nomad's template to populate the load balancer configuration for NGINX. It uses Consul to get the address and port of services named demo-webapp, which are created in the demo web application's job specification. It then makes those services available over static port of 8080 from the load balancer.

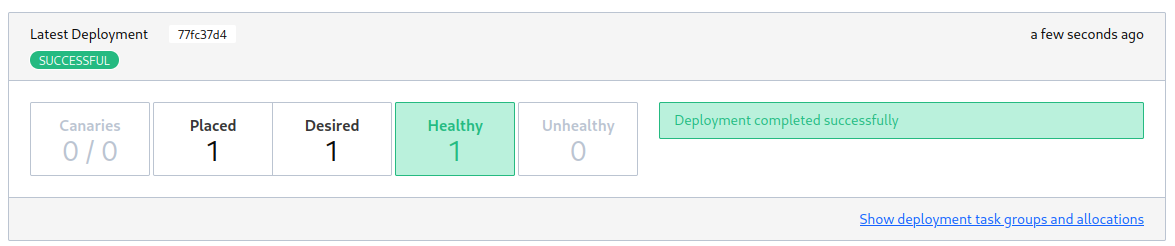

nomad plan nginx.tf [±master ●]

+ Job: "nginx"

+ Task Group: "nginx" (1 create)

+ Task: "nginx" (forces create)

Scheduler dry-run:

- All tasks successfully allocated.

Job Modify Index: 0

To submit the job with version verification run:

nomad job run -check-index 0 nginx.tf

Testing the round-robin load-balancing:

curl server-ip

Welcome! You are on node server-ip:25447

curl server-ip

Welcome! You are on node server-ip:29597

curl server-ip

Welcome! You are on node server-ip:29709

curl server-ip

Welcome! You are on node server-ip:25447