Secure Timeserver - Deploying a NTS Server using Hashicorp Nomad

From Compose to Nomad

I now have a timeserver with NTS support that I can deploy using Docker-Compose:

version: '3.9'

services:

chrony:

build: .

image: cturra/ntp:latest

container_name: chrony

restart: unless-stopped

volumes:

- type: bind

source: /opt/docker-ntp/assets/startup.sh

target: /opt/startup.sh

- type: bind

source: /etc/letsencrypt/live/my.server.domain/fullchain.pem

target: /opt/fullchain.pem

- type: bind

source: /etc/letsencrypt/live/my.server.domain/privkey.pem

target: /opt/privkey.pem

ports:

- 123:123/udp

- 4460:4460/tcp

environment:

- NTP_SERVERS=0.de.pool.ntp.org,time.cloudflare.com,time1.google.com

- LOG_LEVEL=0

Installation

First I need to add the new Timeserver to my Nomad & Consul Cluster. So let's install the Nomad and Consul Clients using the Debian package manager:

curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add -

sudo apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

sudo apt update && sudo apt install nomad consul

Hashicorp Nomad

Add Client Configuration in /etc/nomad.d/client.hcl:

## https://www.nomadproject.io/docs/agent/configuration

name = "nts"

datacenter = "chronyNTS"

data_dir = "/opt/nomad/data"

bind_addr = "0.0.0.0"

advertise {

http = "127.0.0.1"

rpc = "my.client-server.ip"

serf = "my.client-server.ip"

}

ports {

http = http.port.as.configured.in.your.cluster

rpc = rpc.port.as.configured.in.your.cluster

serf = serf.port.as.configured.in.your.cluster

}

client {

enabled = true

servers = ["my.nomad.master:port"]

host_volume "letsencrypt" {

path = "/etc/letsencrypt"

read_only = false

}

}

server {

enabled = false

}

acl {

enabled = true

}

# Require TLS

tls {

http = true

rpc = true

ca_file = "/etc/nomad.d/tls/nomad-ca.pem"

cert_file = "/etc/nomad.d/tls/client.pem"

key_file = "/etc/nomad.d/tls/client-key.pem"

verify_server_hostname = true

verify_https_client = true

}

# Docker Configuration

plugin "docker" {

volumes {

enabled = true

selinuxlabel = "z"

}

allow_privileged = false

allow_caps = ["chown", "net_raw"]

}

## https://www.nomadproject.io/docs/agent/configuration/index.html#log_level

## [WARN|INFO|DEBUG]

log_level = "WARN"

log_rotate_duration = "30d"

log_rotate_max_files = 12

Make sure to copy your TLS configuration (ca-cert, client-cert and client-key) to /etc/nomad.d/tls and that the data directory /opt/nomad/data is available. It is very important to add the Docker Plugin configuration and enable volumes here. This way we can now point a host_volume to the Let's Encrypt certificates we need to mount into our container.

Hashicorp Consul

Add Client Configuration in /etc/consul.d/consul.hcl:

# Full configuration options can be found at https://www.consul.io/docs/agent/config

datacenter = "consul"

data_dir = "/opt/consul"

client_addr = "127.0.0.1"

server = false

bind_addr = "my.client-server.ip"

encrypt = "mysecretencryptionkey"

retry_join = ["my.nomad.master"]

# TLS configuration

tls {

defaults {

ca_file = "/etc/consul.d/tls/consul-agent-ca.pem"

}

internal_rpc {

verify_server_hostname = true

}

}

auto_encrypt {

tls = true

}

# ACL configuration

acl {

enabled = true

default_policy = "deny"

enable_token_persistence = true

}

# Performance

performance {

raft_multiplier = 1

}

ports {

grpc = port.as.configured.in.your.cluster

dns = port.as.configured.in.your.cluster

http = port.as.configured.in.your.cluster

https = port.as.configured.in.your.cluster

serf_lan = port.as.configured.in.your.cluster

serf_wan = port.as.configured.in.your.cluster

server = port.as.configured.in.your.cluster

}

Make sure to copy your TLS configuration (ca_file) to /etc/consul.d/tls and that the data directory /opt/consul is available.

Creating the Nomad Job File

chrony_nts.nomad

ERROR: This file is still has an issue - the container is run with "network_mode=host" which causes an issue with the mounted TLS certificate. And there was another issue with the

mountconfiguration itself. You can find corrected job file at the end of this article!

job "chrony_nts" {

datacenters = ["chronyNTS"]

type = "service"

group "docker" {

# Only start 1 instance

count = 1

# Set static ports

network {

mode = "host"

port "ntp" {

static = "123"

}

port "nts" {

static = "4460"

}

}

# Do canary updates

update {

max_parallel = 1

min_healthy_time = "10s"

healthy_deadline = "2m"

progress_deadline = "5m"

auto_revert = true

auto_promote = true

canary = 1

}

# Register the service with Consul

service {

name = "NTS"

port = "nts"

check {

name = "NTS Service"

type = "tcp"

interval = "10s"

timeout = "1s"

}

}

# Add the let's encrypt volume

# volume "letsencrypt" {

# type = "host"

# read_only = false

# source = "letsencrypt"

# }

task "chrony_container" {

driver = "docker"

# Docker environment variables

env {

NTP_SERVERS = "0.de.pool.ntp.org,time.cloudflare.com,time1.google.com"

LOG_LEVEL = "1"

}

# Mount the let's encrypt certificates

# volume_mount {

# volume = "letsencrypt"

# destination = "/opt/letsencrypt"

# read_only = false

# }

config {

image = "my.gitlab.com:12345/chrony-nts:latest"

ports = ["ntp", "nts"]

network_mode = "host"

force_pull = true

mount {

type = "bind"

target = "/opt/letsencrypt"

source = "/etc/letsencrypt/live"

readonly = false

bind_options {

propagation = "rshared"

}

auth {

username = "mygitlabuser"

password = "password"

}

}

}

}

}

Note that the volume is now mounted to

/opt/letsencryptinside the container. So we have to update thestartup.shscript for Chrony and rebuild the container:

# final bits for the config file

{

echo

echo "driftfile /var/lib/chrony/chrony.drift"

echo "makestep 0.1 3"

echo "rtcsync"

echo

echo "ntsserverkey /opt/letsencrypt/live/my.server.com/privkey.pem"

echo "ntsservercert /opt/letsencrypt/live/my.server.com/fullchain.pem"

echo "ntsprocesses 3"

echo "maxntsconnections 512"

echo "ntsdumpdir /var/lib/chrony"

echo

echo "allow all"

} >> ${CHRONY_CONF_FILE}

Testing

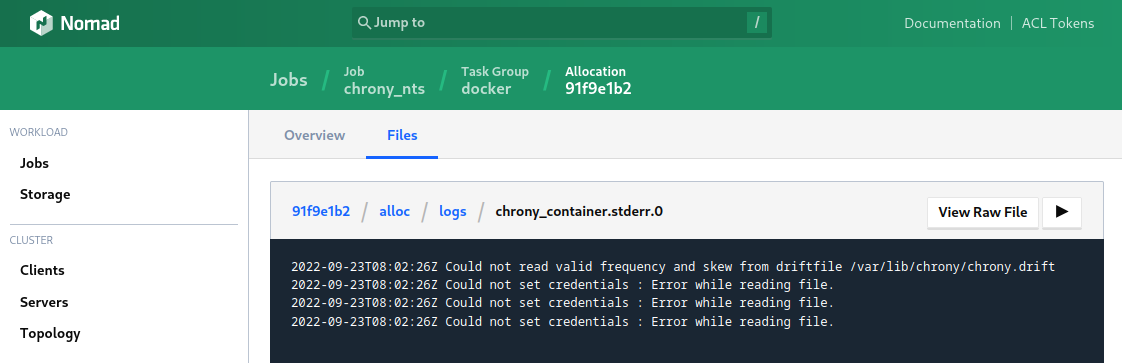

Great so let's nomad plan chrony_nts.nomad and run the job. It starts up but my old friend is back:

2022-09-22T13:18:50Z Could not set credentials : Error while reading file.

2022-09-22T13:18:50Z Could not set credentials : Error while reading file.

2022-09-22T13:18:50Z Could not set credentials : Error while reading file.

The Let's Encrypt certificates cannot be read by Chrony and the NTS connection fails with a bad handshake (but NTP works fine):

chronyd -Q -t 3 'server my.server.com iburst nts maxsamples 1'

2022-09-23T06:33:34Z chronyd version 4.2 starting (+CMDMON +NTP +REFCLOCK +RTC +PRIVDROP -SCFILTER +SIGND +ASYNCDNS +NTS +SECHASH +IPV6 -DEBUG)

2022-09-23T06:33:34Z Disabled control of system clock

2022-09-23T06:33:34Z TLS handshake with my.server.ip:4460 (my.server.domain) failed : The TLS connection was non-properly terminated.

Debugging

The run command returns an allocation ID that I can use to further investigate the issue:

nomad job run /etc/nomad.d/jobs/chrony_nts.nomad

==> 2022-09-23T09:40:52+02:00: Monitoring evaluation "a7738da8"

2022-09-23T09:40:52+02:00: Evaluation triggered by job "chrony_nts"

2022-09-23T09:40:52+02:00: Evaluation within deployment: "da9c6ebc"

2022-09-23T09:40:52+02:00: Allocation "68db9b76" created: node "a68296fa", group "docker"

2022-09-23T09:40:52+02:00: Evaluation status changed: "pending" -> "complete"

==> 2022-09-23T09:40:52+02:00: Evaluation "a7738da8" finished with status "complete"

==> 2022-09-23T09:40:52+02:00: Monitoring deployment "da9c6ebc"

Here the allocation ID is 68db9b76 that I can now use with the alloc command - and at the end of the return I find the issue for the failing deployment:

nomad alloc status 68db9b76

...

Recent Events:

Time Type Description

2022-09-23T09:40:56+02:00 Alloc Unhealthy Unhealthy because of failed task

2022-09-23T09:40:52+02:00 Not Restarting Error was unrecoverable

2022-09-23T09:40:52+02:00 Driver Failure Failed to create container configuration for image "my.gitlab.com/chrony-nts:latest": volumes are not enabled; cannot mount volume: "letsencrypt"

2022-09-23T09:40:52+02:00 Driver Downloading image

2022-09-23T09:40:52+02:00 Task Setup Building Task Directory

2022-09-23T09:40:52+02:00 Received Task received by client

Solution

- Define

volume_mountin thetask, but outside theconfigdirectory. - Do not run container in

network_mode=host

Now the volume is mounted correctly, the certificate can be read and NTS is working:

docker exec -ti chrony_container-74e8b0ba-f7b3-b0ef-d1a7-90efe309535c /bin/ash

chronyd -Q -t 3 'server my.server.com iburst nts maxsamples 1'

2022-09-23T08:54:27Z chronyd version 4.2 starting (+CMDMON +NTP +REFCLOCK +RTC +PRIVDROP -SCFILTER +SIGND +ASYNCDNS +NTS +SECHASH +IPV6 -DEBUG)

2022-09-23T08:54:27Z Disabled control of system clock

2022-09-23T08:54:29Z System clock wrong by -0.000708 seconds (ignored)

2022-09-23T08:54:29Z chronyd exiting

I can see an accepted NTS-KE connection and an authenticated NTP packet send:

chronyc serverstats

NTP packets received : 1

NTP packets dropped : 0

Command packets received : 12

Command packets dropped : 0

Client log records dropped : 0

NTS-KE connections accepted: 36

NTS-KE connections dropped : 0

Authenticated NTP packets : 1

Interleaved NTP packets : 0

NTP timestamps held : 0

NTP timestamp span : 0

Final Nomad Job File

job "chrony_nts" {

datacenters = ["chronyNTS"]

type = "service"

group "docker" {

count = 1

network {

port "ntp" {

static = "123"

}

port "nts" {

static = "4460"

}

}

update {

max_parallel = 1

min_healthy_time = "10s"

healthy_deadline = "2m"

progress_deadline = "5m"

auto_revert = true

auto_promote = true

canary = 1

}

service {

name = "NTS"

port = "nts"

check {

name = "NTS Service"

type = "tcp"

interval = "10s"

timeout = "1s"

}

}

volume "letsencrypt" {

type = "host"

read_only = false

source = "letsencrypt"

}

task "chrony_container" {

driver = "docker"

volume_mount {

volume = "letsencrypt"

destination = "/opt/letsencrypt"

read_only = false

}

env {

NTP_SERVERS = "0.de.pool.ntp.org,time.cloudflare.com,time1.google.com"

LOG_LEVEL = "1"

}

config {

image = "my.gitlab.com:12345/chrony-nts:latest"

ports = ["ntp", "nts"]

network_mode = "default"

force_pull = true

auth {

username = "mygitlabuser"

password = "password"

}

}

}

}

}