Tensorflow Transfer Learning

Sharing Inteligence

Transfer learning is a machine learning technique in which intelligence (i.e.: weights) from a base artificial neural network is being transferred to a new network as a starting point to perform a specific task. This can dramatically reduce the computational time required compared to starting from scratch.

A pre-trained ResNet50 model that has been trained on ImageNet which is an open source repository of images. The feature maps that has been previously trained will be augmented with a new classifier (new Dense layers).

Fine tuning can be performed by unfreezing the top layers (base) and slowly training the entire network so an improved performance can be achieved.

Cats and Dogs

We are going to take the ResNet50 model and re-train it to help distinguishing photos of cats and dogs from the Cats and Dogs dataset on kaggle.com. Here we have two possible strategies:

Conservative

- Freeze the trained CNN weights from the first layer

- Add a new dense layer with randomly initialized weights

Dynamic

- Initialize the CNN network with the pre-trained weights

- Use a small learning rate to prevent aggressive changes

Importing the Model

Just like the CIFAR-10 dataset we can download the ResNet50 model and pre-trained weights directly through Keras:

model = tf.keras.applications.ResNet50(weights = 'imagenet', include_top = True)

Keras models and datasets will be saved to

/home/myuser/.kerason Linux.

Testrun the unmodified ResNet50 Model

We can directly use the model and test it on some images. Note that the model was trained on colour images with 224x224 resolution and expects your images to be exactly that:

ValueError: Input 0 of layer "resnet50" is incompatible with the layer: expected shape=(None, 224, 224, 3), found shape=(32, 32, 3)

# eval the un-modified model

# resnet50 expects image to be of shape (1, 224, 224, 3)

sample_image= tf.keras.preprocessing.image.load_img(r'./test_images/cat.png', target_size = (224, 224))

sample_image = np.expand_dims(sample_image, axis = 0)

print('image shape: ',np.shape(sample_image))

# keras offers resnet50 preprocess preset we can use

# image will be processed identically to training images

preprocessed_image = tf.keras.applications.resnet50.preprocess_input(sample_image)

# run prediction

predictions = model.predict(preprocessed_image)

# use keras resnet50 prediction decoder to return top5 predictions

print('predictions:', tf.keras.applications.resnet50.decode_predictions(predictions, top = 5)[0])

The test image return the following prediction:

./test_images/cat.png

predictions: [('n02123159', 'tiger_cat', 0.39868173), ('n02123045', 'tabby', 0.32775873), ('n02124075', 'Egyptian_cat', 0.26495087), ('n02127052', 'lynx', 0.0049893092), ('n04409515', 'tennis_ball', 0.00035099618)]

./test_images/ship.jpg

predictions: [('n04147183', 'schooner', 0.5001772), ('n03947888', 'pirate', 0.35976425), ('n04612504', 'yawl', 0.12959233), ('n03662601', 'lifeboat', 0.0024379618), ('n09428293', 'seashore', 0.0018175767)]

./test_images/truck.jpg

predictions: [('n03345487', 'fire_engine', 0.8839737), ('n04461696', 'tow_truck', 0.028128117), ('n03344393', 'fireboat', 0.019656133), ('n03126707', 'crane', 0.0156197725), ('n03594945', 'jeep', 0.014000045)]

./test_images/bird.jpg

predictions: [('n01601694', 'water_ouzel', 0.37704965), ('n01795545', 'black_grouse', 0.19283505), ('n01582220', 'magpie', 0.14304504), ('n01580077', 'jay', 0.04652224), ('n01797886', 'ruffed_grouse', 0.025602208)]

Building the Model

ResNet50 contains 1000 different classes of which we only need 2 - we want our model to be able to distinguish between cats and dogs. So we do not need all the very specific training that happened in ResNet's dense layers. We are only interested in the general feature detection capabilities of it's convolution layers. So we can cut of the "Top" of the model and then replace it with our own dense layers that we can initialize with random weights and then freshly train with the cats&dogs dataset:

# load only the convolution layers / general feature detection of resnet50

base_model = tf.keras.applications.ResNet50(weights = 'imagenet', include_top = False)

Now that we extracted the general feature detection from ResNet50 we can add our own - fresh - dense top layers and build the new model:

# take base model convolution layers from resnet

x = base_model.output

# compress incoming feature maps from resnet layers

x = tf.keras.layers.GlobalAveragePooling2D()(x)

# and add fresh top of dense layers

# each node will distinguish between 1024 or 512 features

x = tf.keras.layers.Dense(1024, activation = 'relu')(x)

x = tf.keras.layers.Dense(1024, activation = 'relu')(x)

x = tf.keras.layers.Dense(1024, activation = 'relu')(x)

x = tf.keras.layers.Dense(512, activation = 'relu')(x)

# the final layer breaks everything down to a binary decision - cat or dog

predictions = tf.keras.layers.Dense(2, activation = 'softmax')(x)

# create new model from both components

model = tf.keras.models.Model(inputs = base_model.input, outputs = predictions)

Now I want to use the Conservative approach defined above and freeze all the layers that were brought in from ResNet50. If we print out the layers we see that there are 174 layers in total that need to be conserved (set un-trainable):

for i, layer in enumerate(base_model.layers):

print(i, layer.name)

This will output all layers of the ResNet50 based layers:

0 input_2

1 conv1_pad

2 conv1_conv

3 conv1_bn

4 conv1_relu

...

173 conv5_block3_add

174 conv5_block3_out

Lock those layers to freeze all the weights that have been applied training against the ImageNet dataset to preserve the general feature detection:

# lock all resnet layers 1-174

for layer in model.layers[:175]:

layer.trainable = False

# the new dense layers have to be trainable

for layer in model.layers[175:]:

layer.trainable = True

To make the resulting model more specific to your use-case set

layer.trainable = Truefor layer1-174and use a small training rate to preserve the general weight distribution.

Now we have to point Keras to our Cats and Dogs dataset - specifically to the training_set folder that contains two folder cats and dogs with corresponding images. We can use the ImageDataGenerator with a set of ResNet50 specific training preprocessing functions - all readily provided by Keras:

train_datagen = tf.keras.preprocessing.image.ImageDataGenerator(preprocessing_function= tf.keras.applications.resnet50.preprocess_input)

train_generator = train_datagen.flow_from_directory('./data/training_set/',

target_size = (224, 224),

color_mode = 'rgb',

batch_size = 32,

class_mode = 'categorical',

shuffle = True)

Building the Model

Now that we defined the mode we need to compile:

# compile the new model

model.compile(optimizer = 'Adam', loss = 'categorical_crossentropy', metrics = ['accuracy'])

Train the Model

Since we let ResNet50 do most of the work and simply transferred it's "intelligence" we can start with a small training run of 5 epochs and see how well our new model can fit our dataset:

# and train it on your dataset

history = model.fit(train_generator, steps_per_epoch=train_generator.n//train_generator.batch_size, epochs = 5)

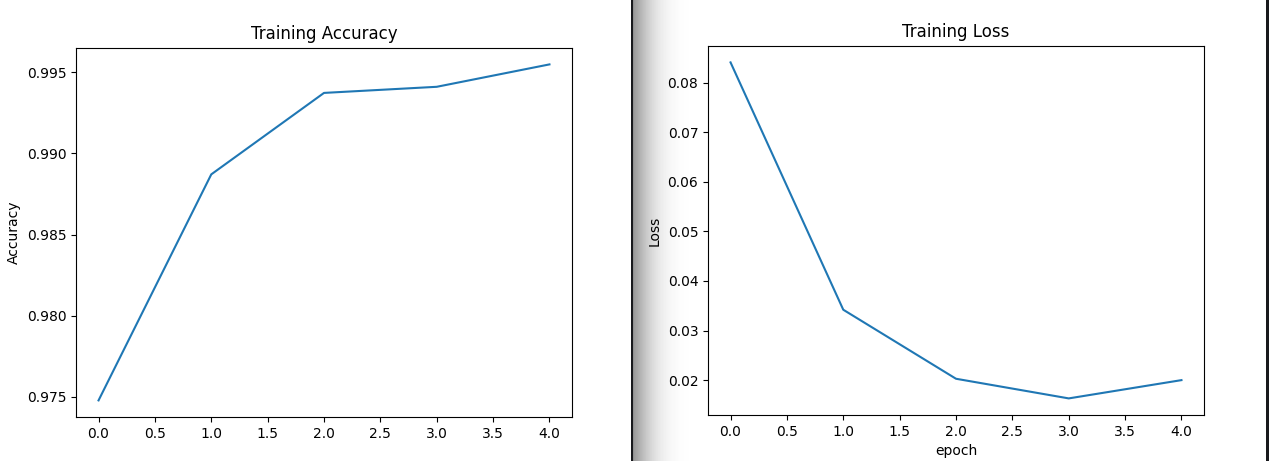

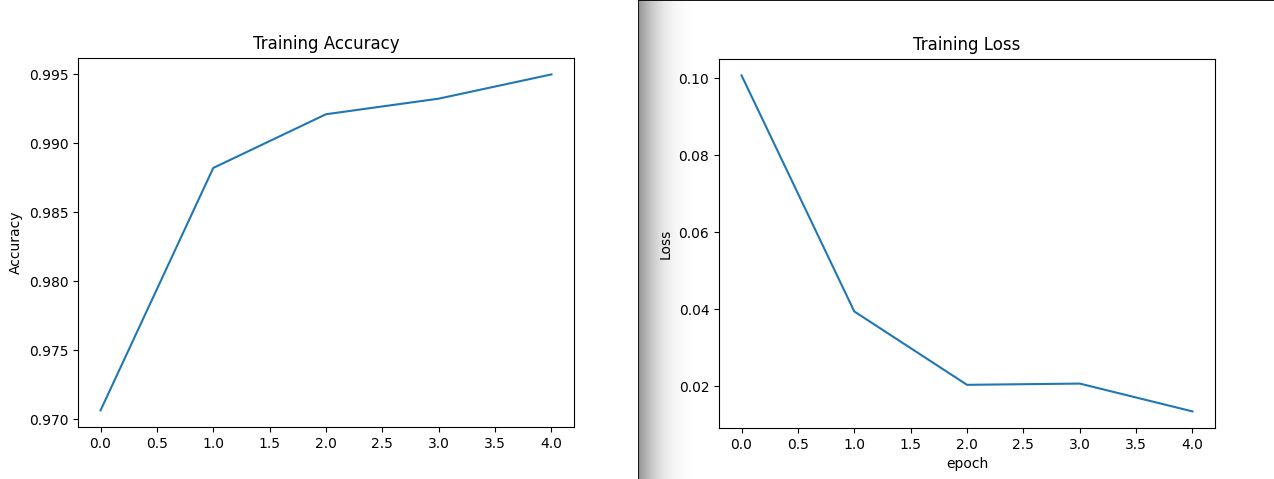

After the 5th epoch I end up at an accuracy of almost 100% which often means that we are dealing with some overfitting:

Epoch 5/5

250/250 [==============================] - 38s 150ms/step - loss: 0.0134 - accuracy: 0.9950

So the next step is to assess our trained models capability to work it's way through the set of test images that are provided with the dataset.

Evaluating the Model

Plotting the loss and accuracy of the training run:

# evaluating the model - accuracy & loss

acc = history.history['accuracy']

loss = history.history['loss']

## plot accuracy

plt.figure()

plt.plot(acc, label='Training Accuracy')

plt.ylabel('Accuracy')

plt.title('Training Accuracy')

## plot loss

plt.figure()

plt.plot(loss, label='Training Loss')

plt.ylabel('Loss')

plt.title('Training Loss')

plt.xlabel('epoch')

plt.show()

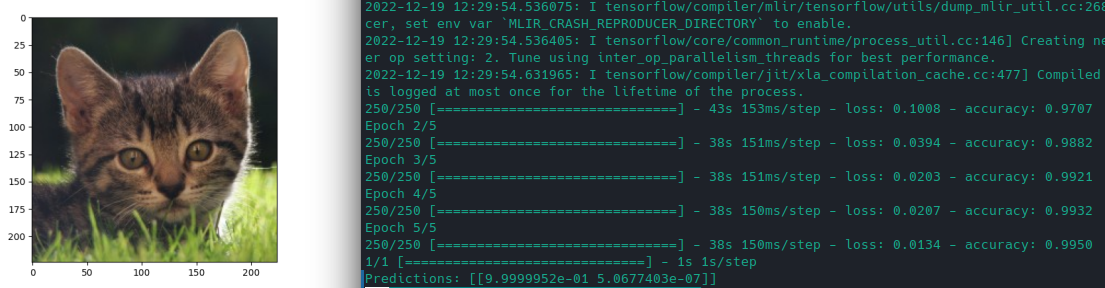

And we can use a test image - that is not contained in the training set - to verify that our model is performing well:

# take a sample image for testing

Sample_Image= tf.keras.preprocessing.image.load_img(r'./test_images/cat.png', target_size = (224, 224))

plt.imshow(Sample_Image)

plt.show()

## pre-process for resnet

Sample_Image = tf.keras.preprocessing.image.img_to_array(Sample_Image)

np.shape(Sample_Image)

Sample_Image = np.expand_dims(Sample_Image, axis = 0)

## run prediction

Sample_Image = tf.keras.applications.resnet50.preprocess_input(Sample_Image)

predictions = model.predict(Sample_Image)

print('Predictions:', predictions)

The prediction values that are printed out here are the probability that the image belongs to the classes [cat, dog] - and the values I am getting here are 99.99% to 5e-7%

Predictions: [[9.9999952e-01 5.0677403e-07]

So there is a chance that this is actually a dog...

Update :: Binary Crossentropy

When dealing with binary problems (2 classes) you should use Binary Crossentropy for the loss function. Categorical Crossentropy is used for multiple classes:

model.compile(optimizer = 'Adam', loss = 'binary_crossentropy', metrics = ['accuracy'])

Since the results were already almost perfect I do not see much of a difference here (re-running with categorical crossentropy sometime also results in 100% certainties):

Predictions: [[1.000000e+00 3.776364e-12]]