YOLOv8 Image Classifier

Using YOLOv8 to classify an entire image into one of a set of predefined classes.

- YOLOv8 Image Classifier

| Model | size (pixels) | acc top1 | acc top5 | Speed CPU ONNX (ms) | Speed A100 TensorRT (ms) | params (M) | FLOPs (B) at 640 |

|---|---|---|---|---|---|---|---|

| YOLOv8n-cls | 224 | 66.6 | 87.0 | 12.9 | 0.31 | 2.7 | 4.3 |

| YOLOv8s-cls | 224 | 72.3 | 91.1 | 23.4 | 0.35 | 6.4 | 13.5 |

| YOLOv8m-cls | 224 | 76.4 | 93.2 | 85.4 | 0.62 | 17.0 | 42.7 |

| YOLOv8l-cls | 224 | 78.0 | 94.1 | 163.0 | 0.87 | 37.5 | 99.7 |

| YOLOv8x-cls | 224 | 78.4 | 94.3 | 232.0 | 1.01 | 57.4 | 154.8 |

Docker Environment

docker run --gpus all -ti --rm \

-v $(pwd):/opt/app -p 8888:8888 \

--name pytorch-jupyter \

pytorch-jupyter:latest

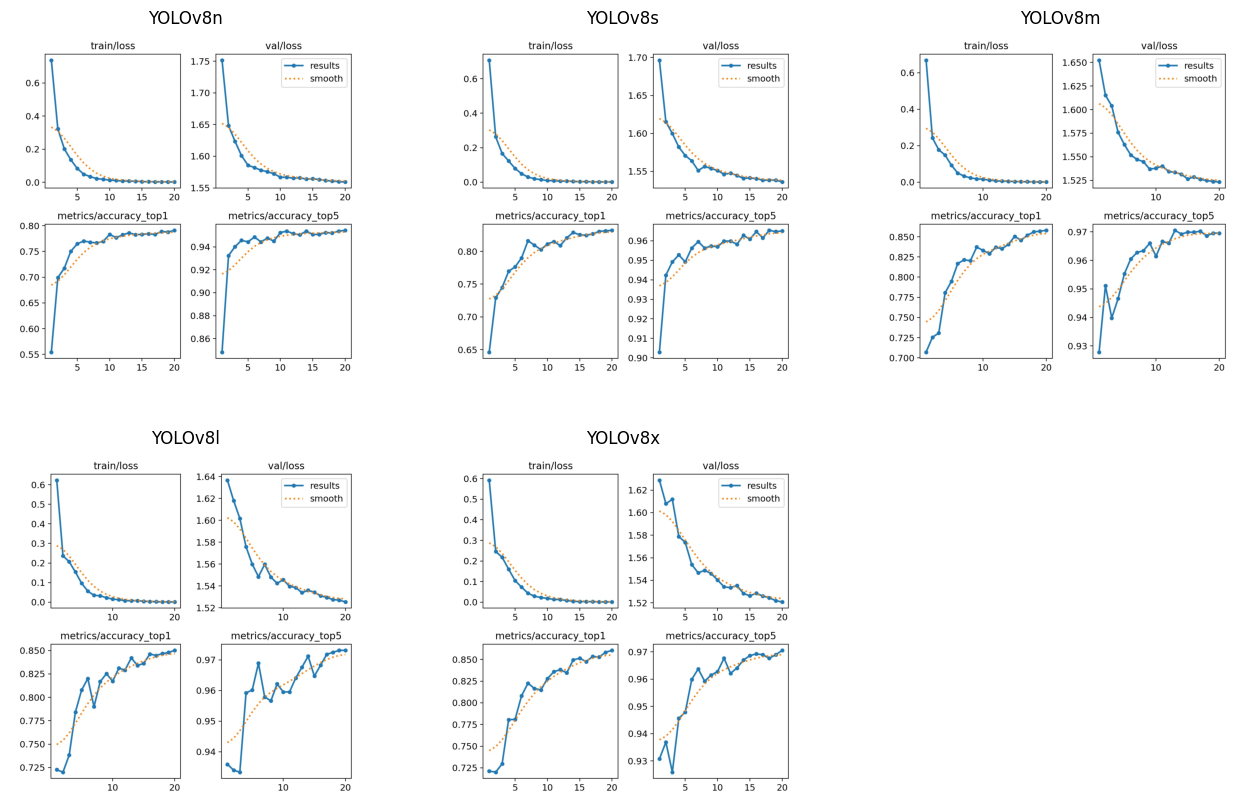

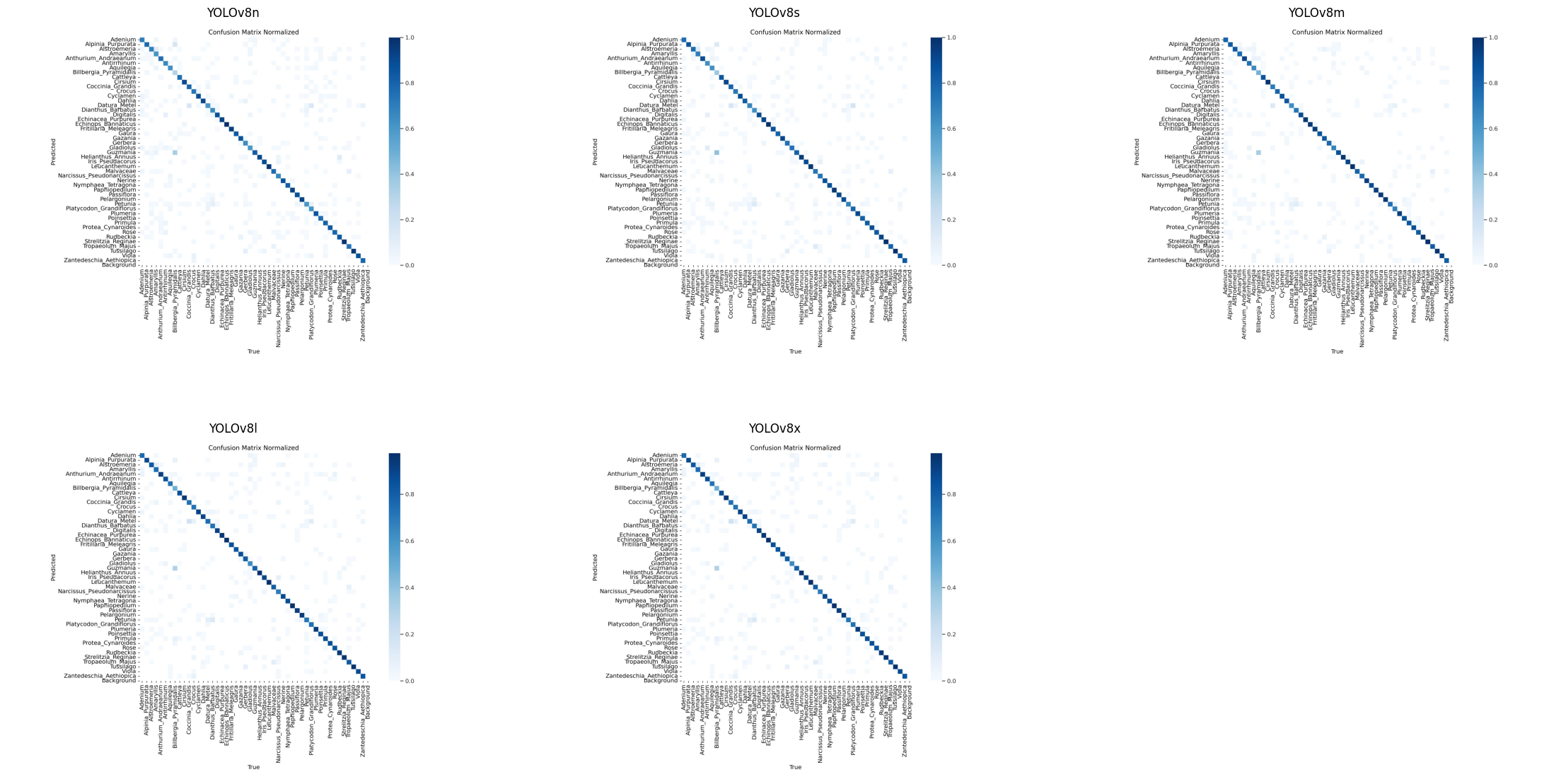

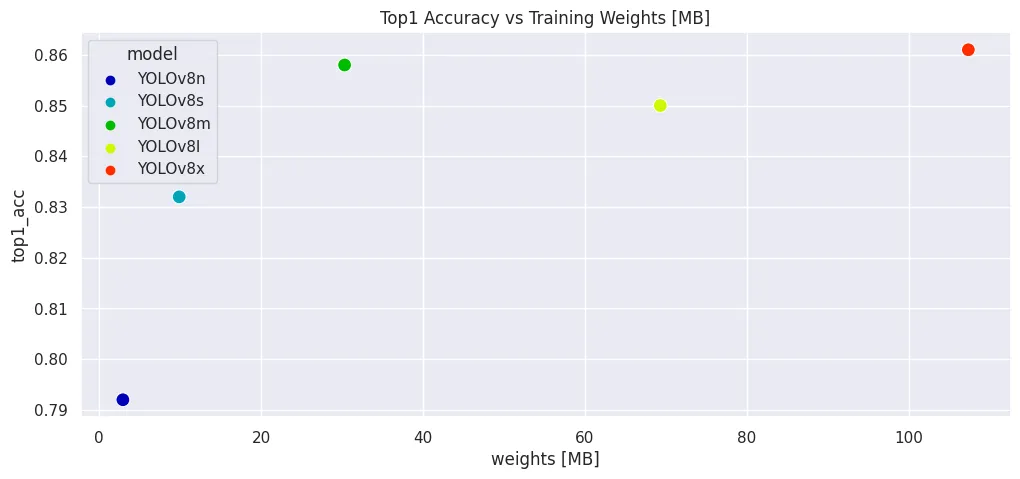

Training Results

| top1_acc | top5_acc | validation [it/s] | inference [ms] | prediction preprocess [ms] | prediction inference [ms] | prediction postprocess [ms] | weights [MB] | weights (ONNX) [MB] | weights (TensorRT) [MB] | |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv8n | 0.792 | 0.954 | 86.50 | 0.2 | 3.600000 | 2.733333 | 0.033333 | 2.935699 | 5.731391 | 7.929402 |

| YOLOv8s | 0.832 | 0.965 | 91.72 | 0.2 | 5.333333 | 4.500000 | 0.100000 | 9.890900 | 19.618174 | 26.030774 |

| YOLOv8m | 0.858 | 0.969 | 87.90 | 0.4 | 0.466667 | 5.000000 | 0.066667 | 30.324455 | 60.397784 | 63.700307 |

| YOLOv8l | 0.850 | 0.973 | 72.36 | 0.8 | 0.500000 | 7.300000 | 0.066667 | 69.331619 | 138.311538 | 166.345273 |

| YOLOv8x | 0.861 | 0.971 | 53.22 | 1.1 | 2.166667 | 14.400000 | 0.100000 | 107.382278 | 214.371352 | 225.140141 |

Model Export Options

| Format | format Argument | Model | Metadata | Arguments |

|---|---|---|---|---|

| PyTorch | - | yolov8n-cls.pt | ✅ | - |

| TorchScript | torchscript | yolov8n-cls.torchscript | ✅ | imgsz, optimize |

| ONNX | onnx | yolov8n-cls.onnx | ✅ | imgsz, half, dynamic, simplify, opset |

| OpenVINO | openvino | yolov8n-cls_openvino_model/ | ✅ | imgsz, half |

| TensorRT | engine | yolov8n-cls.engine | ✅ | imgsz, half, dynamic, simplify, workspace |

| CoreML | coreml | yolov8n-cls.mlpackage | ✅ | imgsz, half, int8, nms |

| TF SavedModel | saved_model | yolov8n-cls_saved_model/ | ✅ | imgsz, keras |

| TF GraphDef | pb | yolov8n-cls.pb | ❌ | imgsz |

| TF Lite | tflite | yolov8n-cls.tflite | ✅ | imgsz, half, int8 |

| TF Edge TPU | edgetpu | yolov8n-cls_edgetpu.tflite | ✅ | imgsz |

| TF.js | tfjs | yolov8n-cls_web_model/ | ✅ | imgsz |

| PaddlePaddle | paddle | yolov8n-cls_paddle_model/ | ✅ | imgsz |

| ncnn | ncnn | yolov8n-cls_ncnn_model/ | ✅ | imgsz, half |

!pip install nvidia-tensorrt sng4onnx onnx_graphsurgeon onnx onnxsim onnxruntime-gpu # tensorflow tflite_support onnx2tf

from ultralytics import YOLO

| Model | size (pixels) | acc top1 | acc top5 | Speed CPU ONNX (ms) | Speed A100 TensorRT (ms) | params (M) | FLOPs (B) at 640 |

|---|---|---|---|---|---|---|---|

| YOLOv8n-cls | 224 | 66.6 | 87.0 | 12.9 | 0.31 | 2.7 | 4.3 |

| YOLOv8s-cls | 224 | 72.3 | 91.1 | 23.4 | 0.35 | 6.4 | 13.5 |

| YOLOv8m-cls | 224 | 76.4 | 93.2 | 85.4 | 0.62 | 17.0 | 42.7 |

| YOLOv8l-cls | 224 | 78.0 | 94.1 | 163.0 | 0.87 | 37.5 | 99.7 |

| YOLOv8x-cls | 224 | 78.4 | 94.3 | 232.0 | 1.01 | 57.4 | 154.8 |

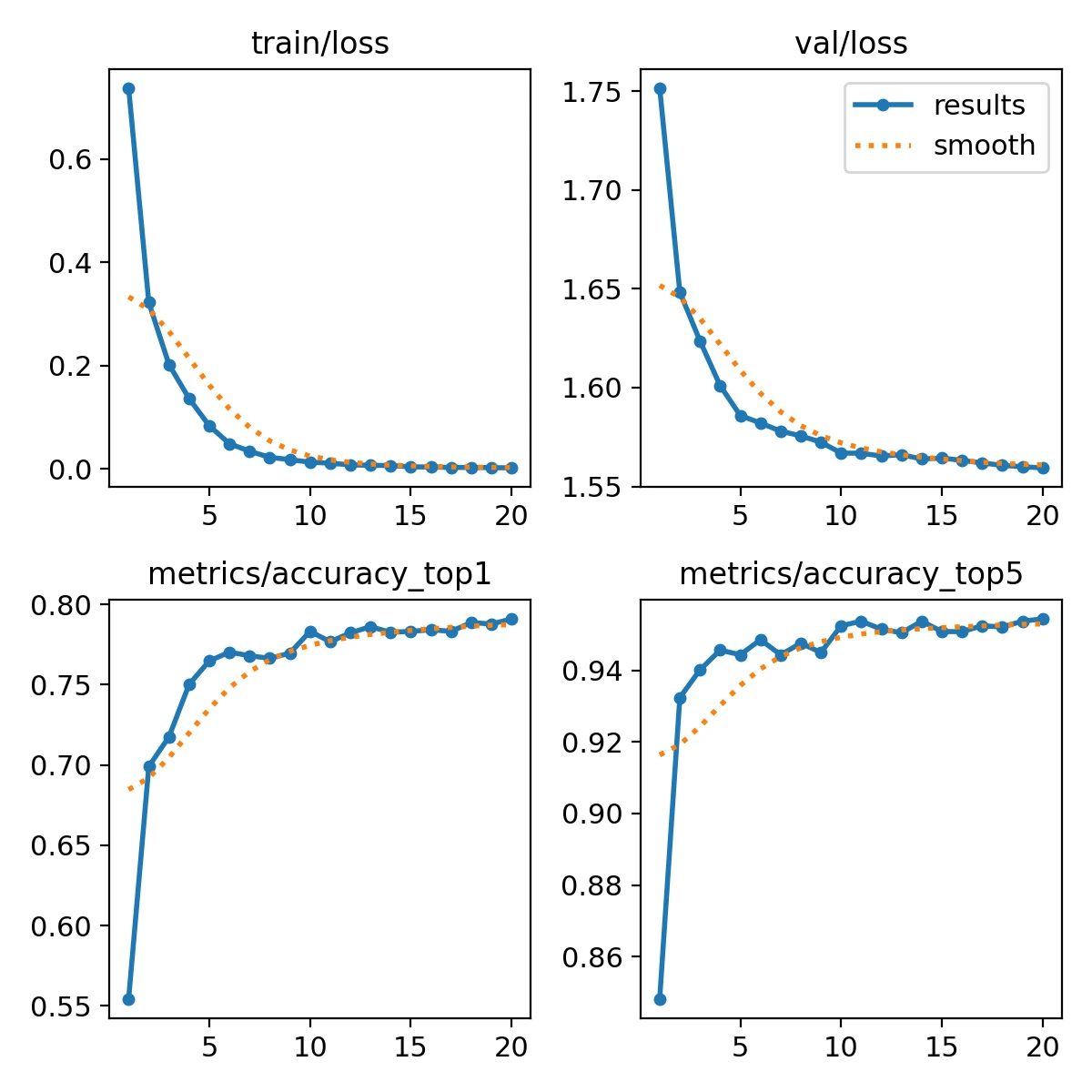

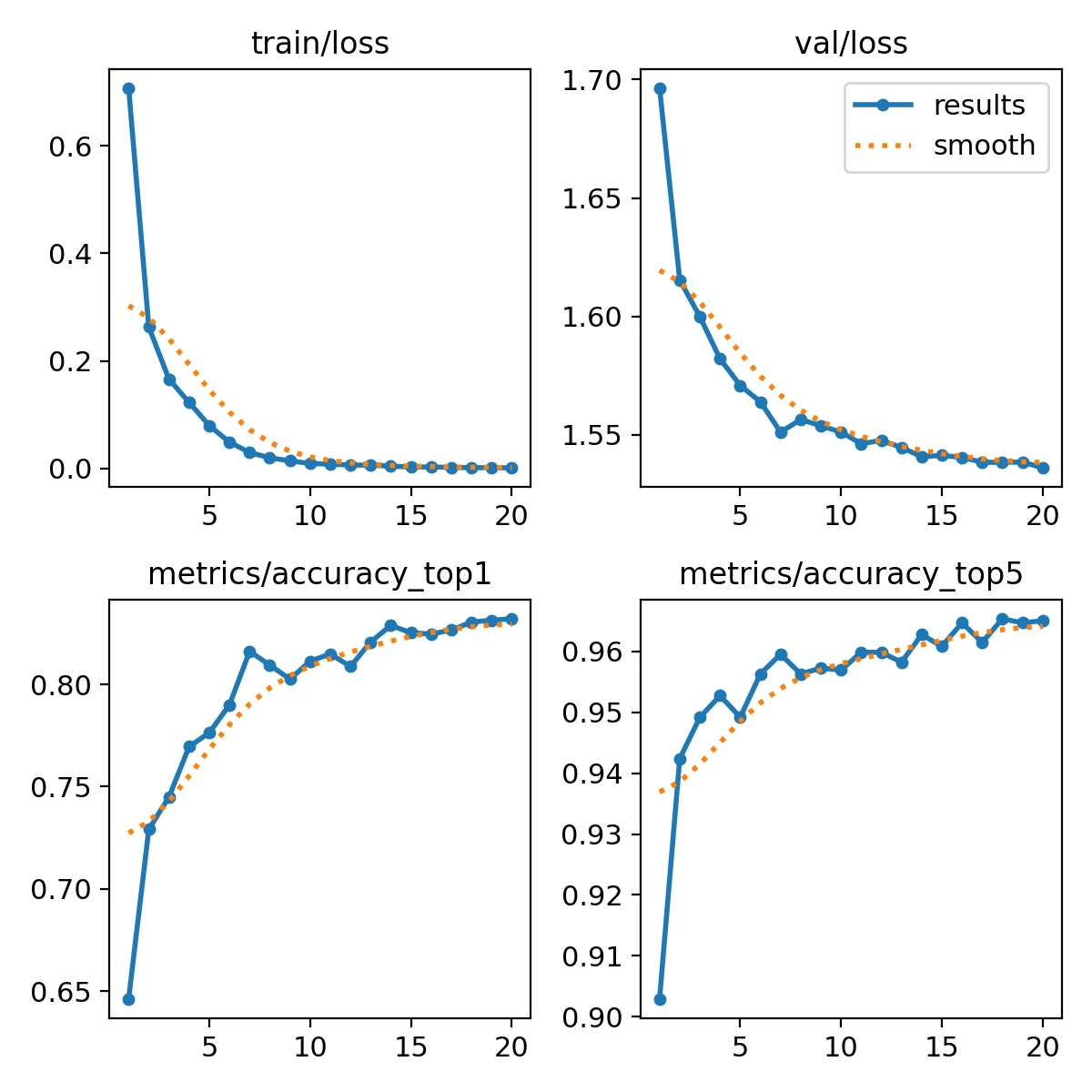

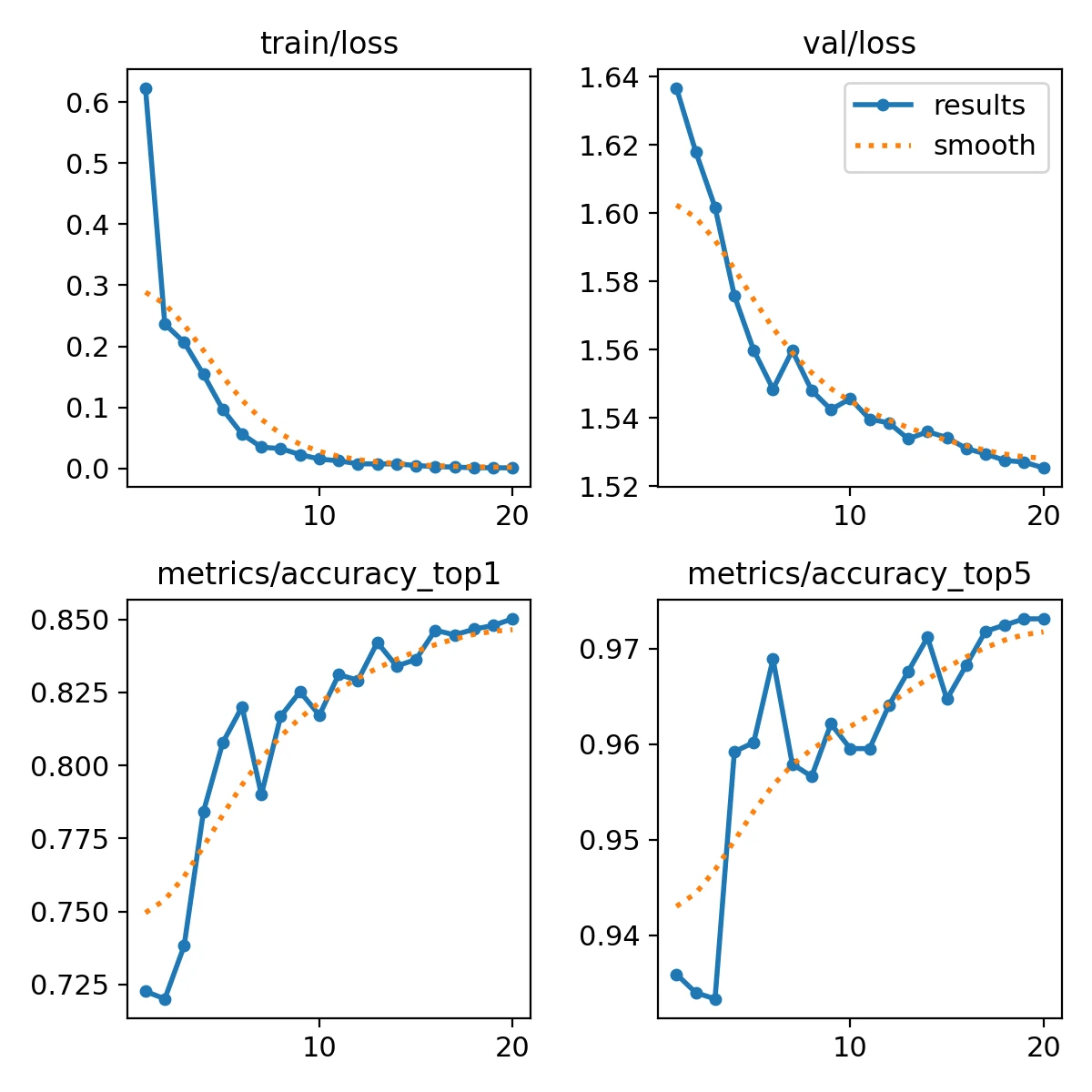

YOLOv8n

model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

# Train the model

results = model.train(data='./data/Flower_Dataset', epochs=20, imgsz=64)

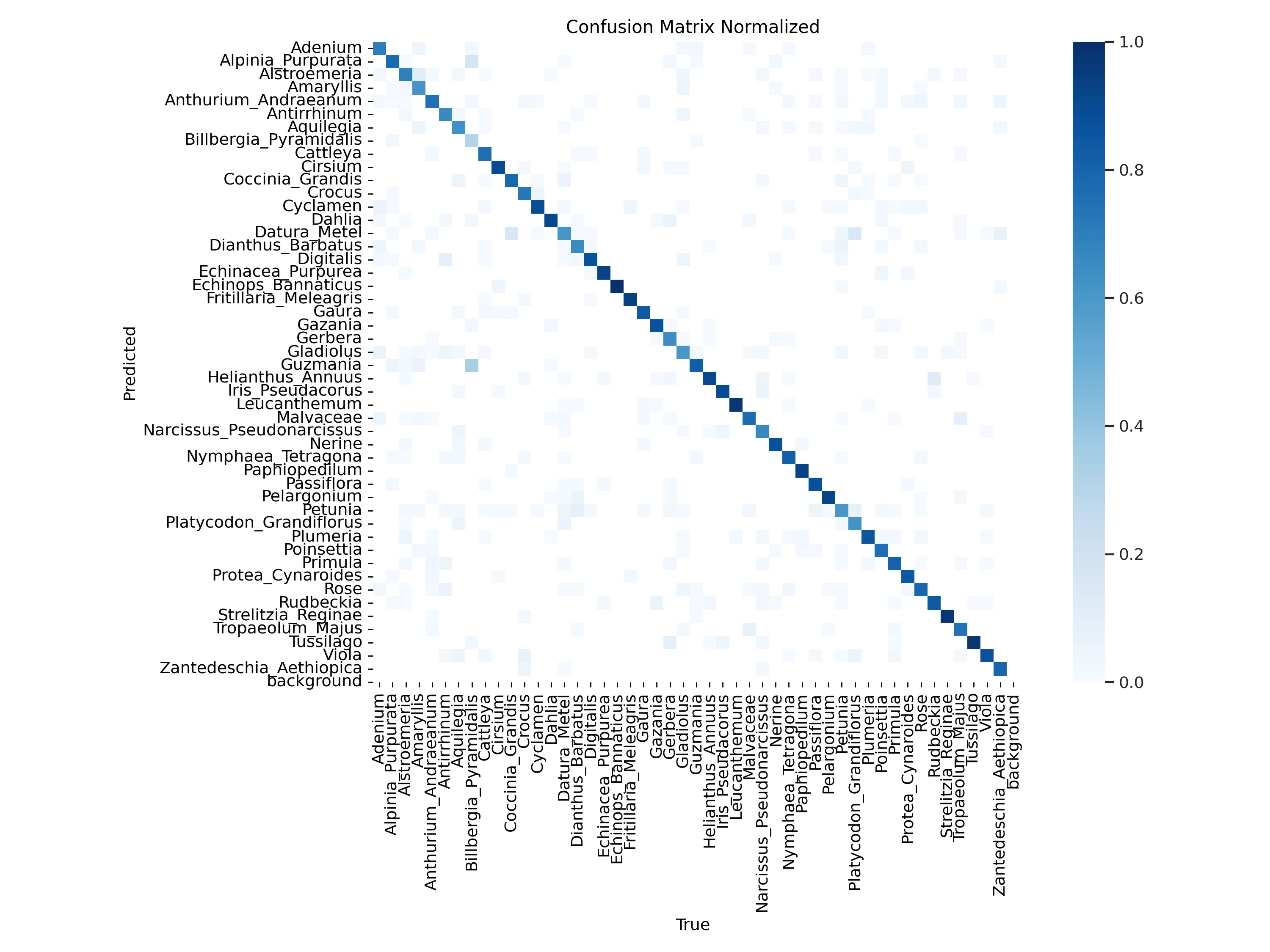

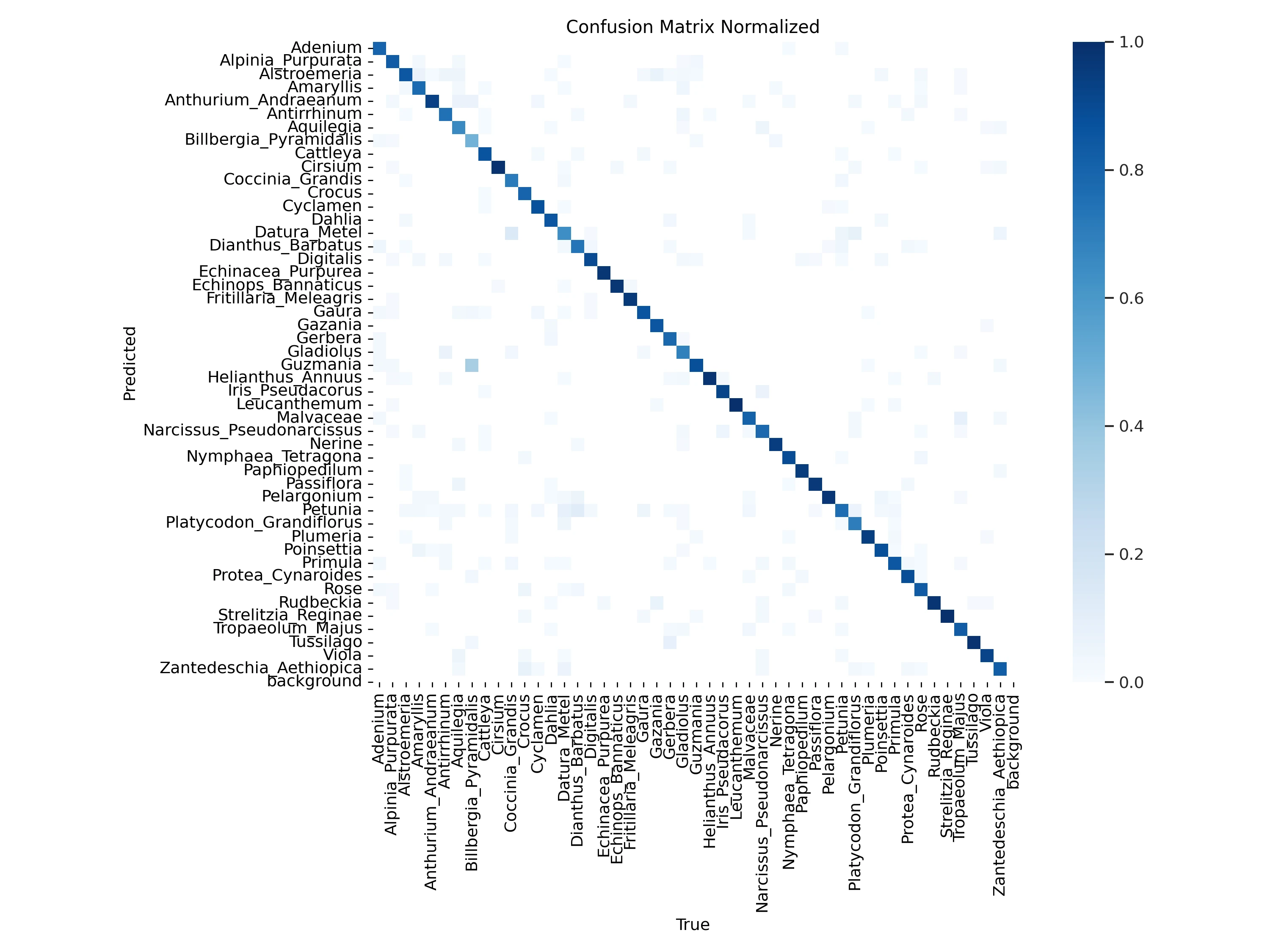

Model Evaluation

# Load a model

model_n = YOLO('./runs/classify/train_yolov8n/weights/last.pt') # load a custom model

# Validate the model

metrics_n = model_n.val() # no arguments needed, dataset and settings remembered

print(metrics_n.top1) # top1 accuracy: 0.7915857434272766

print(metrics_n.top5) # top5 accuracy: 0.9543688893318176

Model Predictions

# Predict with the model

results_n = model_n('./assets/snapshots/Viola_Tricolor.jpg')

# image 1/1 /opt/app/assets/snapshots/Viola_Tricolor.jpg: 64x64 Viola 1.00, Aquilegia 0.00, Malvaceae 0.00, Helianthus_Annuus 0.00, Plumeria 0.00, 3.6ms

# Speed: 0.6ms preprocess, 3.6ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

results_n = model_n('./assets/snapshots/Strelitzia.jpg')

# image 1/1 /opt/app/assets/snapshots/Strelitzia.jpg: 64x64 Strelitzia_Reginae 1.00, Alstroemeria 0.00, Guzmania 0.00, Rose 0.00, Anthurium_Andraeanum 0.00, 2.5ms

# Speed: 7.1ms preprocess, 2.5ms inference, 0.0ms postprocess per image at shape (1, 3, 64, 64)

results_n = model_n('./assets/snapshots/Water_Lilly.jpg')

# image 1/1 /opt/app/assets/snapshots/Water_Lilly.jpg: 64x64 Nymphaea_Tetragona 0.78, Dahlia 0.20, Rose 0.01, Alstroemeria 0.01, Antirrhinum 0.00, 3.1ms

# Speed: 6.6ms preprocess, 2.1ms inference, 0.0ms postprocess per image at shape (1, 3, 64, 64)

Model Export

| Format | format Argument | Model | Metadata | Arguments |

|---|---|---|---|---|

| PyTorch | - | yolov8n-cls.pt | ✅ | - |

| TorchScript | torchscript | yolov8n-cls.torchscript | ✅ | imgsz, optimize |

| ONNX | onnx | yolov8n-cls.onnx | ✅ | imgsz, half, dynamic, simplify, opset |

| OpenVINO | openvino | yolov8n-cls_openvino_model/ | ✅ | imgsz, half |

| TensorRT | engine | yolov8n-cls.engine | ✅ | imgsz, half, dynamic, simplify, workspace |

| CoreML | coreml | yolov8n-cls.mlpackage | ✅ | imgsz, half, int8, nms |

| TF SavedModel | saved_model | yolov8n-cls_saved_model/ | ✅ | imgsz, keras |

| TF GraphDef | pb | yolov8n-cls.pb | ❌ | imgsz |

| TF Lite | tflite | yolov8n-cls.tflite | ✅ | imgsz, half, int8 |

| TF Edge TPU | edgetpu | yolov8n-cls_edgetpu.tflite | ✅ | imgsz |

| TF.js | tfjs | yolov8n-cls_web_model/ | ✅ | imgsz |

| PaddlePaddle | paddle | yolov8n-cls_paddle_model/ | ✅ | imgsz |

| ncnn | ncnn | yolov8n-cls_ncnn_model/ | ✅ | imgsz, half |

model_n.export(format='onnx')

model_n.export(format='engine')

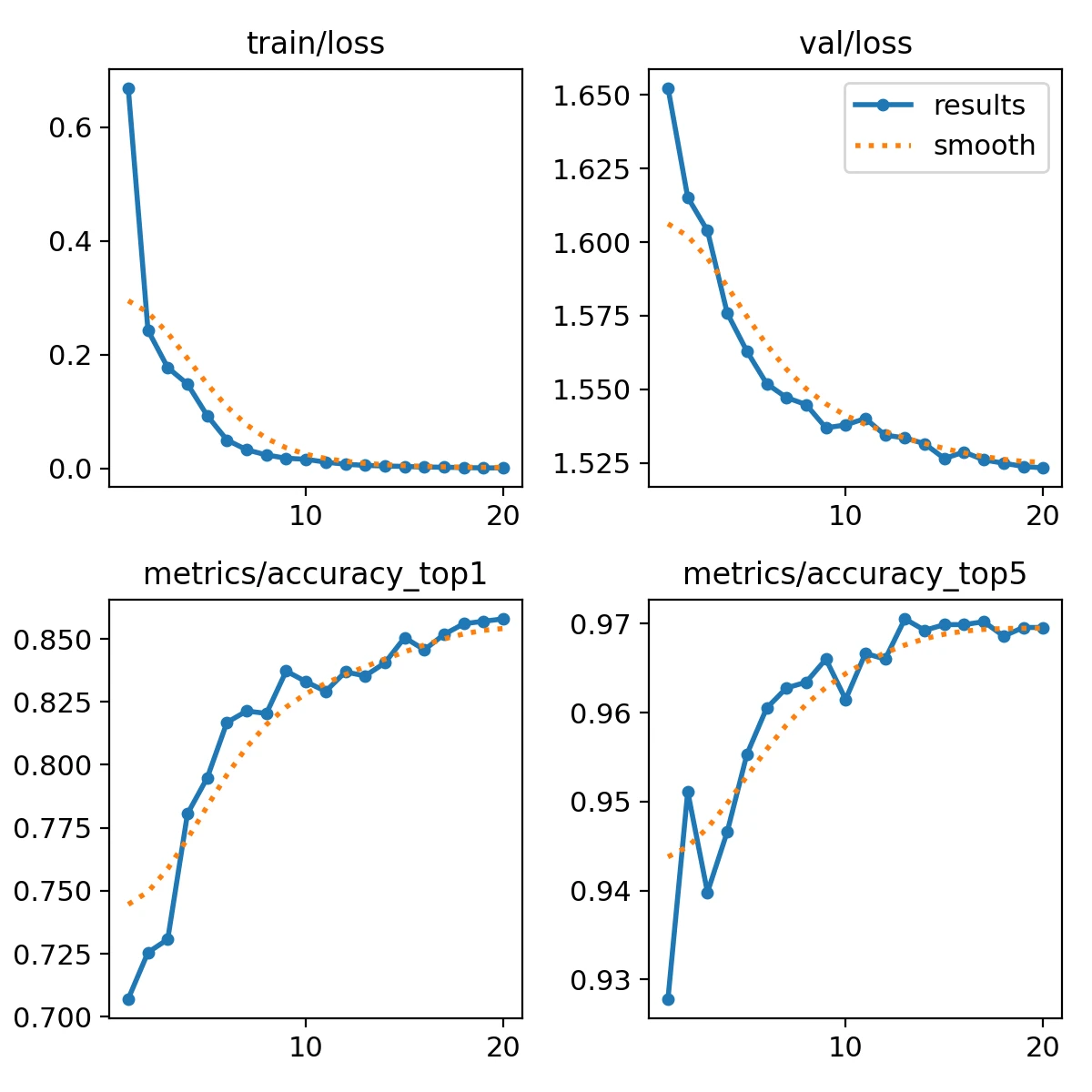

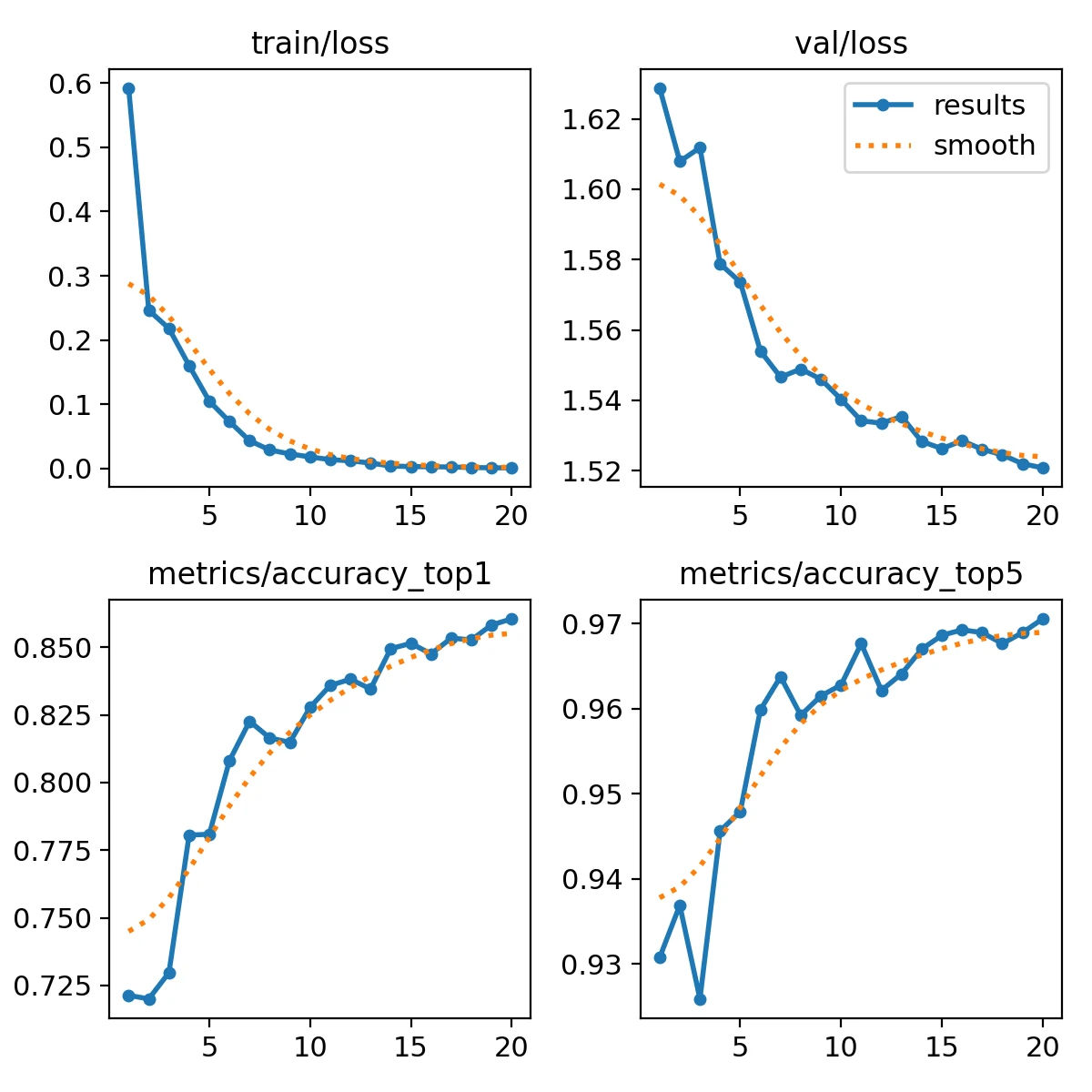

YOLOv8s

model = YOLO('yolov8s-cls.pt')

results = model.train(data='./data/Flower_Dataset', epochs=20, imgsz=64)

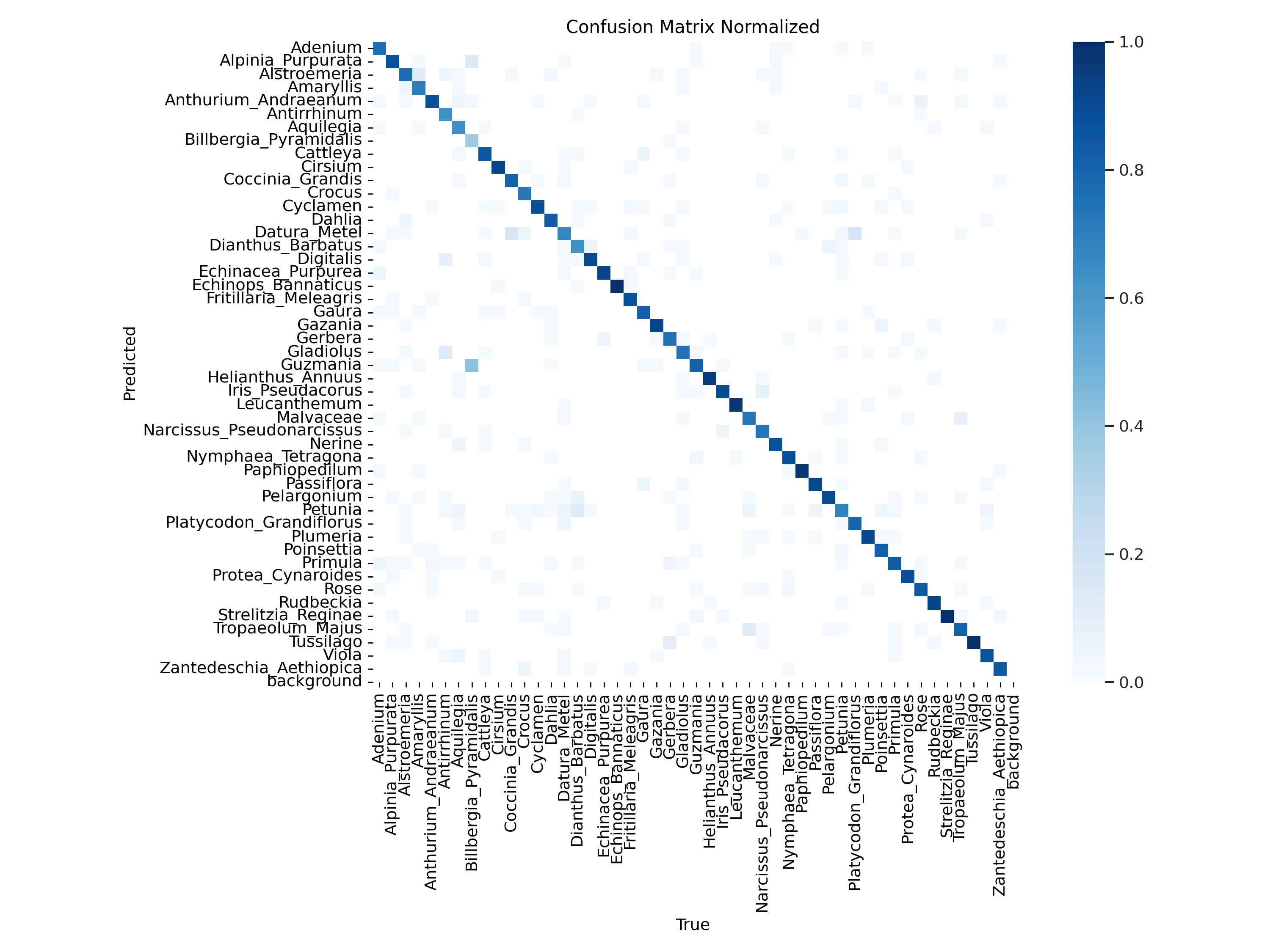

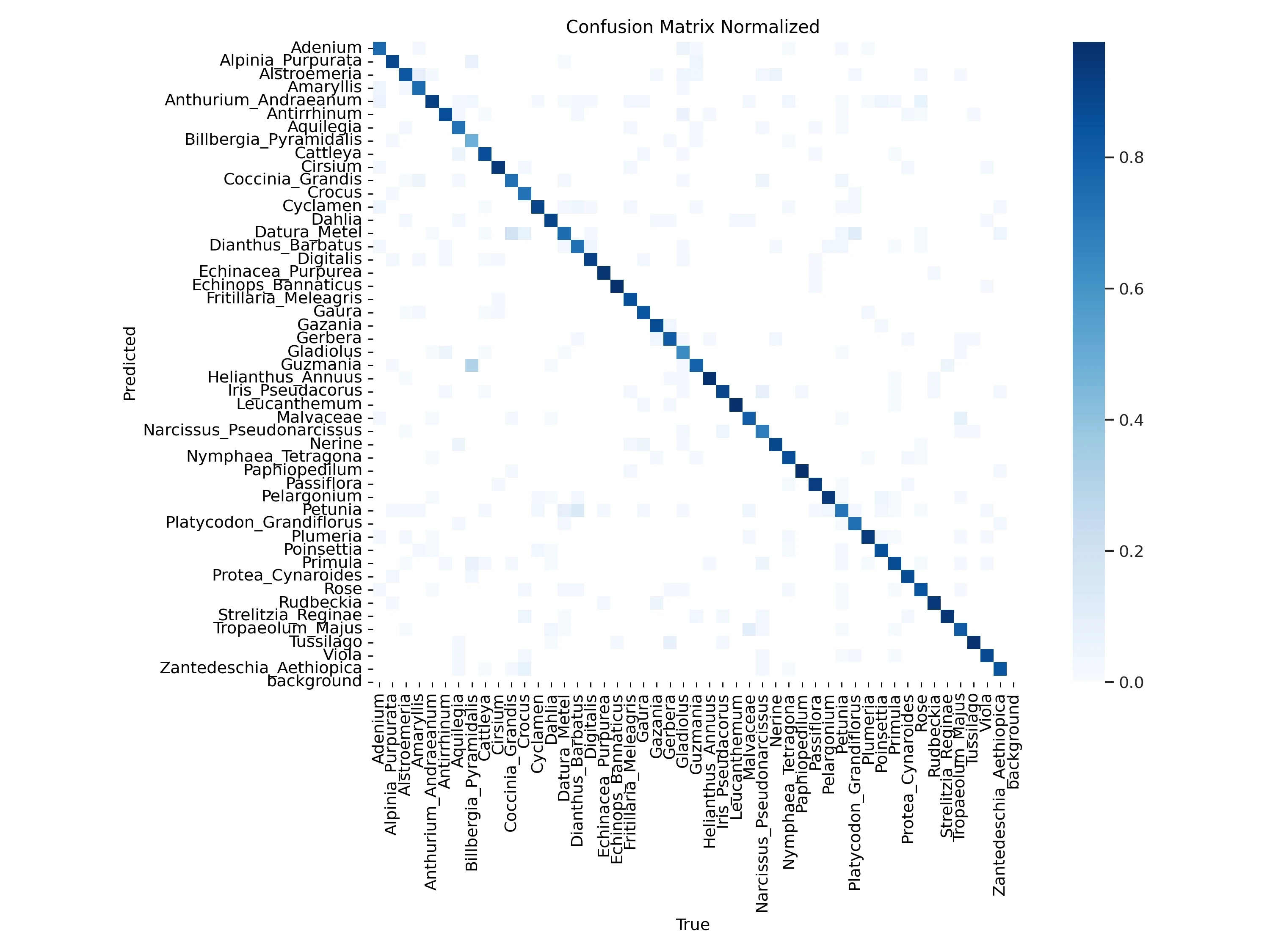

Model Evaluation

# Load a model

model_s = YOLO('./runs/classify/train_yolov8s/weights/last.pt') # load a custom model

# Validate the model

metrics_s = model_s.val() # no arguments needed, dataset and settings remembered

print(metrics_s.top1) # top1 accuracy: 0.8323624134063721

print(metrics_s.top5) # top5 accuracy: 0.9650484919548035

Model Predictions

# Predict with the model

results_s = model_s('./assets/snapshots/Viola_Tricolor.jpg')

# image 1/1 /opt/app/assets/snapshots/Viola_Tricolor.jpg: 64x64 Viola 1.00, Primula 0.00, Malvaceae 0.00, Plumeria 0.00, Datura_Metel 0.00, 2.7ms

# Speed: 0.5ms preprocess, 2.7ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

results_s = model_s('./assets/snapshots/Strelitzia.jpg')

# image 1/1 /opt/app/assets/snapshots/Strelitzia.jpg: 64x64 Strelitzia_Reginae 1.00, Helianthus_Annuus 0.00, Nymphaea_Tetragona 0.00, Plumeria 0.00, Crocus 0.00, 7.6ms

# Speed: 15.1ms preprocess, 7.6ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

results_s = model_s('./assets/snapshots/Water_Lilly.jpg')

# image 1/1 /opt/app/assets/snapshots/Water_Lilly.jpg: 64x64 Nymphaea_Tetragona 0.99, Alstroemeria 0.01, Passiflora 0.00, Billbergia_Pyramidalis 0.00, Protea_Cynaroides 0.00, 3.2ms

# Speed: 0.4ms preprocess, 3.2ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

Model Export

model_s.export(format='onnx')

model_s.export(format='engine')

YOLOv8m

model = YOLO('yolov8m-cls.pt')

results = model.train(data='./data/Flower_Dataset', epochs=20, imgsz=64)

Model Evaluation

# Load a model

model_m = YOLO('./runs/classify/train_yolov8m/weights/last.pt') # load a custom model

# Validate the model

metrics_m = model_m.val() # no arguments needed, dataset and settings remembered

print(metrics_m.top1) # top1 accuracy: 0.8579287528991699

print(metrics_m.top5) # top5 accuracy: 0.9692556262016296

Model Predictions

# Predict with the model

results_m = model_m('./assets/snapshots/Viola_Tricolor.jpg')

# image 1/1 /opt/app/assets/snapshots/Viola_Tricolor.jpg: 64x64 Viola 1.00, Iris_Pseudacorus 0.00, Primula 0.00, Cattleya 0.00, Helianthus_Annuus 0.00, 4.0ms

# Speed: 0.4ms preprocess, 4.0ms inference, 0.0ms postprocess per image at shape (1, 3, 64, 64)

results_m = model_m('./assets/snapshots/Strelitzia.jpg')

# image 1/1 /opt/app/assets/snapshots/Strelitzia.jpg: 64x64 Strelitzia_Reginae 1.00, Alpinia_Purpurata 0.00, Zantedeschia_Aethiopica 0.00, Helianthus_Annuus 0.00, Plumeria 0.00, 6.0ms

# Speed: 0.5ms preprocess, 6.0ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

results_m = model_m('./assets/snapshots/Water_Lilly.jpg')

# image 1/1 /opt/app/assets/snapshots/Water_Lilly.jpg: 64x64 Nymphaea_Tetragona 1.00, Dahlia 0.00, Alstroemeria 0.00, Passiflora 0.00, Guzmania 0.00, 5.0ms

# Speed: 0.5ms preprocess, 5.0ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

Model Export

model_m.export(format='onnx')

model_m.export(format='engine')

YOLOv8l

model = YOLO('yolov8l-cls.pt')

results = model.train(data='./data/Flower_Dataset', epochs=20, imgsz=64)

Model Evaluation

# Load a model

model_l = YOLO('./runs/classify/train_yolov8l/weights/last.pt') # load a custom model

# Validate the model

metrics_l = model_l.val() # no arguments needed, dataset and settings remembered

print(metrics_l.top1) # top1 accuracy: 0.849838137626648

print(metrics_l.top5) # top5 accuracy: 0.9731391072273254

Model Predictions

# Predict with the model

results_l = model_l('./assets/snapshots/Viola_Tricolor.jpg')

# image 1/1 /opt/app/assets/snapshots/Viola_Tricolor.jpg: 64x64 Viola 1.00, Malvaceae 0.00, Iris_Pseudacorus 0.00, Primula 0.00, Paphiopedilum 0.00, 6.3ms

# Speed: 0.5ms preprocess, 6.3ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

results_l = model_l('./assets/snapshots/Strelitzia.jpg')

# image 1/1 /opt/app/assets/snapshots/Strelitzia.jpg: 64x64 Strelitzia_Reginae 1.00, Anthurium_Andraeanum 0.00, Tropaeolum_Majus 0.00, Alstroemeria 0.00, Zantedeschia_Aethiopica 0.00, 8.0ms

# Speed: 0.6ms preprocess, 8.0ms inference, 0.0ms postprocess per image at shape (1, 3, 64, 64)

results_l = model_l('./assets/snapshots/Water_Lilly.jpg')

# image 1/1 /opt/app/assets/snapshots/Water_Lilly.jpg: 64x64 Nymphaea_Tetragona 1.00, Rose 0.00, Primula 0.00, Dahlia 0.00, Alstroemeria 0.00, 7.6ms

# Speed: 0.4ms preprocess, 7.6ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

Model Export

model_l.export(format='onnx')

model_l.export(format='engine')

YOLOv8x

model = YOLO('yolov8x-cls.pt')

results = model.train(data='./data/Flower_Dataset', epochs=20, imgsz=64)

Model Evaluation

# Load a model

model_x = YOLO('./runs/classify/train_yolov8x/weights/last.pt') # load a custom model

# Validate the model

metrics_x = model_x.val() # no arguments needed, dataset and settings remembered

print(metrics_x.top1) # top1 accuracy: 0.8605177402496338

print(metrics_x.top5) # top5 accuracy: 0.9708737730979919

### Model Predictions

```python

# Predict with the model

results_x = model_x('./assets/snapshots/Viola_Tricolor.jpg')

# image 1/1 /opt/app/assets/snapshots/Viola_Tricolor.jpg: 64x64 Viola 1.00, Dahlia 0.00, Tropaeolum_Majus 0.00, Rose 0.00, Primula 0.00, 10.1ms

# Speed: 0.5ms preprocess, 10.1ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

results_x = model_x('./assets/snapshots/Strelitzia.jpg')

# image 1/1 /opt/app/assets/snapshots/Strelitzia.jpg: 64x64 Strelitzia_Reginae 1.00, Tropaeolum_Majus 0.00, Alstroemeria 0.00, Rose 0.00, Helianthus_Annuus 0.00, 24.0ms

# Speed: 5.5ms preprocess, 24.0ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

results_x = model_x('./assets/snapshots/Water_Lilly.jpg')

# image 1/1 /opt/app/assets/snapshots/Water_Lilly.jpg: 64x64 Nymphaea_Tetragona 0.99, Rose 0.00, Alstroemeria 0.00, Primula 0.00, Protea_Cynaroides 0.00, 9.1ms

# Speed: 0.5ms preprocess, 9.1ms inference, 0.1ms postprocess per image at shape (1, 3, 64, 64)

Model Export

model_x.export(format='onnx')

model_x.export(format='engine')