See also:

- Fun, fun, tensors: Tensor Constants, Variables and Attributes, Tensor Indexing, Expanding and Manipulations, Matrix multiplications, Squeeze, One-hot and Numpy

- Tensorflow 2 - Neural Network Regression: Building a Regression Model, Model Evaluation, Model Optimization, Working with a "Real" Dataset, Feature Scaling

- Tensorflow 2 - Neural Network Classification: Non-linear Data and Activation Functions, Model Evaluation and Performance Improvement, Multiclass Classification Problems

- Tensorflow 2 - Convolutional Neural Networks: Binary Image Classification, Multiclass Image Classification

- Tensorflow 2 - Transfer Learning: Feature Extraction, Fine-Tuning, Scaling

- Tensorflow 2 - Unsupervised Learning: Autoencoder Feature Detection, Autoencoder Super-Resolution, Generative Adverserial Networks

Tensorflow Convolutional Neural Networks

Multiclass Image Classification

- cd datasets

- wget https://storage.googleapis.com/ztm_tf_course/food_vision/10_food_classes_all_data.zip

- unzip 10_food_classes_all_data.zip && rm 10_food_classes_all_data.zip

10_food_classes_all_data

├── test

│ ├── chicken_curry

│ ├── chicken_wings

│ ├── fried_rice

│ ├── grilled_salmon

│ ├── hamburger

│ ├── ice_cream

│ ├── pizza

│ ├── ramen

│ ├── steak

│ └── sushi

└── train

├── chicken_curry

├── chicken_wings

├── fried_rice

├── grilled_salmon

├── hamburger

├── ice_cream

├── pizza

├── ramen

├── steak

└── sushi

23 directories, 0 files

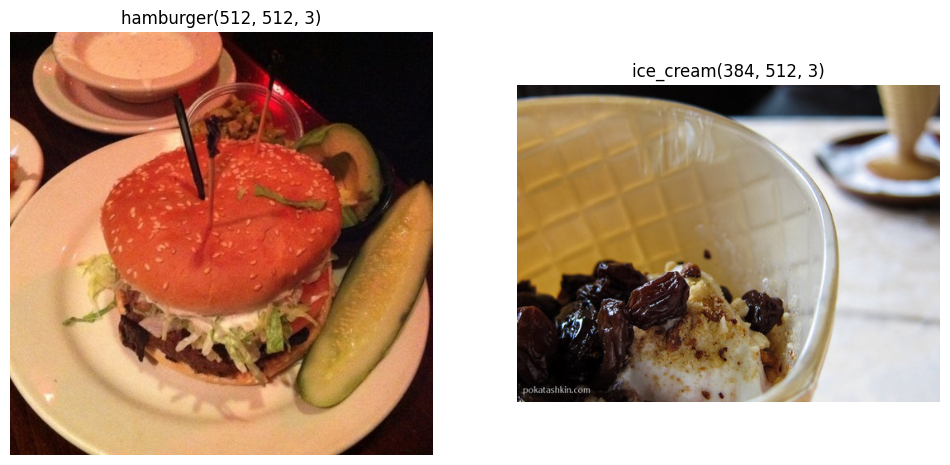

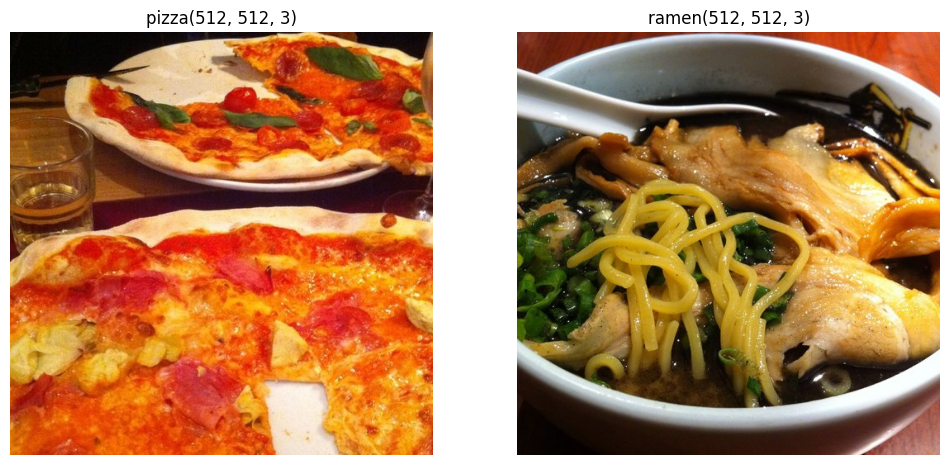

Visualizing the Data

# set directories

training_directory = "../datasets/10_food_classes_all_data/train/"

testing_directory = "../datasets/10_food_classes_all_data/test/"

# get class names

data_dir = pathlib.Path(training_directory)

class_names = np.array(sorted([item.name for item in data_dir.glob('*')]))

len(class_names), class_names

# the data set has 10 classes:

# (10,

# array(['chicken_curry', 'chicken_wings', 'fried_rice', 'grilled_salmon',

# 'hamburger', 'ice_cream', 'pizza', 'ramen', 'steak', 'sushi'],

# dtype='<U14'))

# visualizing the dataset

## display random images

def view_random_image(target_dir, target_class):

target_folder = str(target_dir) + "/" + target_class

random_image = random.sample(os.listdir(target_folder), 1)

img = mpimg.imread(target_folder + "/" + random_image[0])

plt.imshow(img)

plt.title(str(target_class) + str(img.shape))

plt.axis("off")

return tf.constant(img)

fig = plt.figure(figsize=(12, 6))

plot1 = fig.add_subplot(1, 2, 1)

plot1.title.set_text(f'Class: {class_names[0]}')

pizza_image = view_random_image(target_dir = training_directory, target_class=class_names[0])

plot2 = fig.add_subplot(1, 2, 2)

plot2.title.set_text(f'Class: {class_names[1]}')

steak_image = view_random_image(target_dir = training_directory, target_class=class_names[1])

fig = plt.figure(figsize=(12, 6))

plot3 = fig.add_subplot(1, 2, 1)

plot3.title.set_text(f'Class: {class_names[2]}')

pizza_image = view_random_image(target_dir = training_directory, target_class=class_names[2])

plot4 = fig.add_subplot(1, 2, 2)

plot4.title.set_text(f'Class: {class_names[3]}')

steak_image = view_random_image(target_dir = training_directory, target_class=class_names[3])

fig = plt.figure(figsize=(12, 6))

plot5 = fig.add_subplot(1, 2, 1)

plot5.title.set_text(f'Class: {class_names[4]}')

pizza_image = view_random_image(target_dir = training_directory, target_class=class_names[4])

plot6 = fig.add_subplot(1, 2, 2)

plot6.title.set_text(f'Class: {class_names[5]}')

steak_image = view_random_image(target_dir = training_directory, target_class=class_names[5])

fig = plt.figure(figsize=(12, 6))

plot7 = fig.add_subplot(1, 2, 1)

plot7.title.set_text(f'Class: {class_names[6]}')

pizza_image = view_random_image(target_dir = training_directory, target_class=class_names[6])

plot8 = fig.add_subplot(1, 2, 2)

plot8.title.set_text(f'Class: {class_names[7]}')

steak_image = view_random_image(target_dir = training_directory, target_class=class_names[7])

Preprocessing the Data

seed = 42

batch_size = 32

img_height = 224

img_width = 224

tf.random.set_seed(seed)

training_data_multi = image_dataset_from_directory(training_directory,

labels='inferred',

label_mode='categorical',

seed=seed,

shuffle=True,

image_size=(img_height, img_width),

batch_size=batch_size)

testing_data_multi = image_dataset_from_directory(testing_directory,

labels='inferred',

label_mode='categorical',

seed=seed,

shuffle=True,

image_size=(img_height, img_width),

batch_size=batch_size)

# Found 7500 files belonging to 10 classes.

# Found 2500 files belonging to 10 classes.

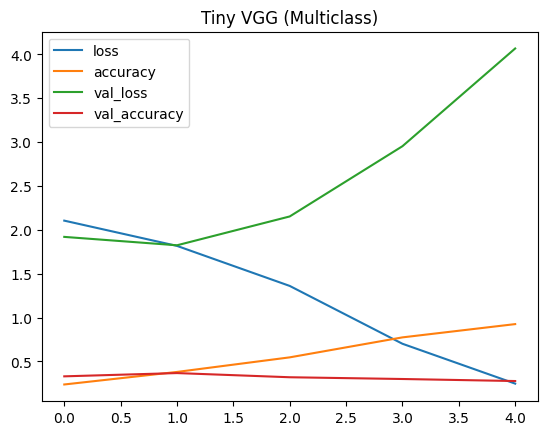

Building the Model

tf.random.set_seed(seed)

# building the model based on the tiny vgg architecture

vgg_model_multiclass = Sequential([

Rescaling(1./255),

Conv2D(filters=10,

kernel_size=3,

activation="relu",

input_shape=(img_height, img_width, 3)),

Conv2D(10, 3, activation="relu"),

MaxPool2D(pool_size=2, padding="valid"),

Conv2D(10, 3, activation="relu"),

Conv2D(10, 3, activation="relu"),

MaxPool2D(2, padding="valid"),

Flatten(),

Dense(len(class_names), activation="softmax")

])

# compile the model

vgg_model_multiclass.compile(loss="categorical_crossentropy",

optimizer=Adam(learning_rate=1e-3),

metrics=["accuracy"])

# fitting the model

history_vgg_model_multiclass = vgg_model_multiclass.fit(training_data_multi, epochs=5,

steps_per_epoch=len(training_data_multi),

validation_data=testing_data_multi,

validation_steps=len(testing_data_multi))

# Epoch 5/5

# 235/235 [==============================] - 12s 52ms/step - loss: 0.2465 - accuracy: 0.9251 - val_loss: 4.0673 - val_accuracy: 0.2760

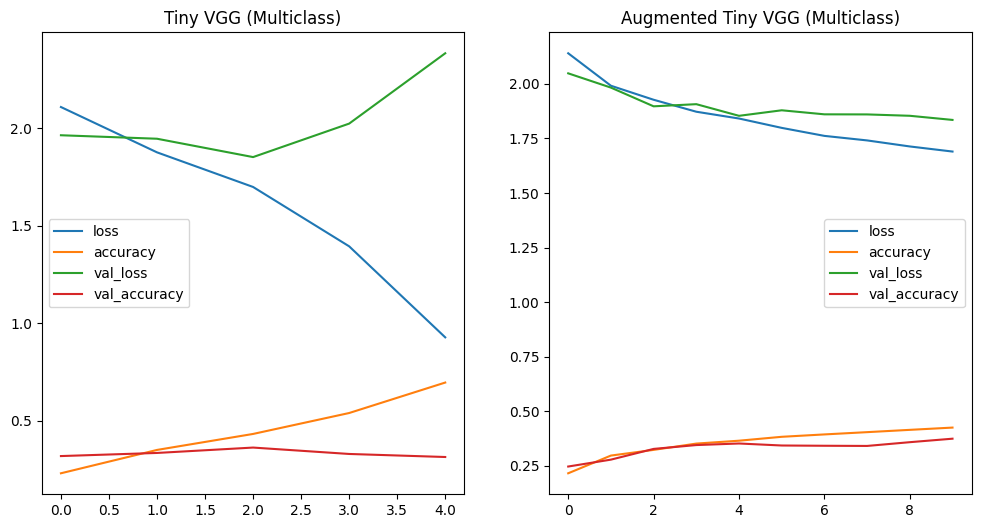

pd.DataFrame(history_vgg_model_multiclass.history).plot(title="Tiny VGG (Multiclass)")

# The training loss and accuracy are getting close to being perfect

# while the validation loss is running away => overfitting

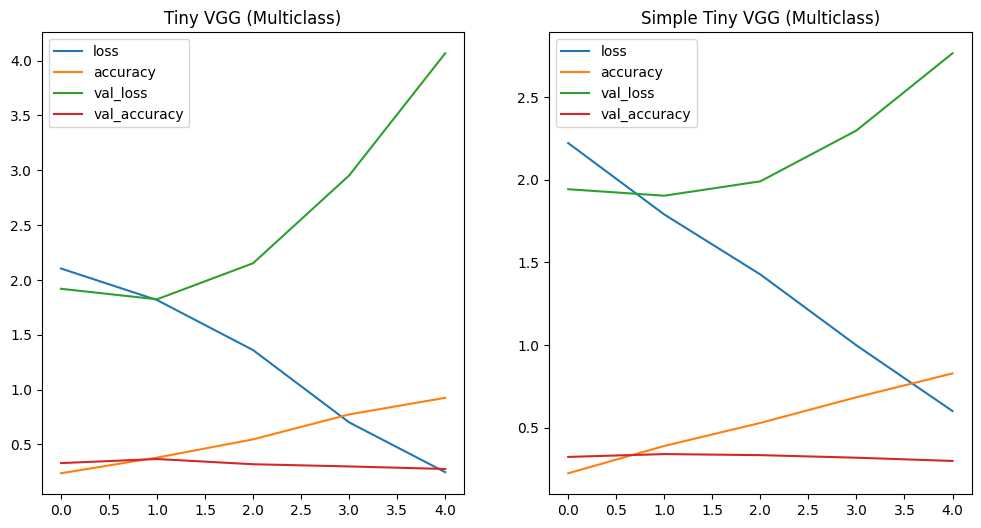

Reduce Overfitting

tf.random.set_seed(seed)

# reduce the complexity of our model to minimize the overfit

vgg_model_multiclass_simplified = Sequential([

Rescaling(1./255),

Conv2D(filters=10,

kernel_size=3,

activation="relu",

input_shape=(img_height, img_width, 3)),

MaxPool2D(pool_size=2, padding="valid"),

Conv2D(10, 3, activation="relu"),

MaxPool2D(2, padding="valid"),

Flatten(),

Dense(len(class_names), activation="softmax")

])

# compile the model

vgg_model_multiclass_simplified.compile(loss="categorical_crossentropy",

optimizer=Adam(learning_rate=1e-3),

metrics=["accuracy"])

# fitting the model

history_vgg_model_multiclass_simplified = vgg_model_multiclass_simplified.fit(

training_data_multi, epochs=5,

steps_per_epoch=len(training_data_multi),

validation_data=testing_data_multi,

validation_steps=len(testing_data_multi))

# Epoch 5/5

# 235/235 [==============================] - 12s 52ms/step - loss: 0.2465 - accuracy: 0.9251 - val_loss: 4.0673 - val_accuracy: 0.2760

fig, axes = plt.subplots(nrows=1, ncols=2, figsize=(12, 6))

pd.DataFrame(history_vgg_model_multiclass.history).plot(ax=axes[0], title="Tiny VGG (Multiclass)")

pd.DataFrame(history_vgg_model_multiclass_simplified.history).plot(ax=axes[1], title="Simple Tiny VGG (Multiclass)")

# that did not help at all...

tf.random.set_seed(seed)

# adding data augmentations

# i experimented a little bit with this

# things can go horrible wrong if you

# add too much :)

data_augmentation = Sequential([

RandomFlip("horizontal_and_vertical"),

RandomRotation(0.2),

# RandomZoom(0.1),

# RandomContrast(0.2),

# RandomBrightness(0.2)

])

# building the model

vgg_model_multiclass_augmentation = Sequential([

data_augmentation,

Rescaling(1./255, input_shape=(img_height, img_width, 3)),

Conv2D(16, 3, activation="relu"),

Conv2D(10, 3, activation="relu"),

MaxPool2D(pool_size=2, padding="same"),

Conv2D(16, 3, activation="relu"),

Conv2D(10, 3, activation="relu"),

MaxPool2D(2, padding="same"),

Flatten(),

Dense(len(class_names), activation="softmax")

])

# compile the model

vgg_model_multiclass_augmentation.compile(loss="categorical_crossentropy",

optimizer=Adam(learning_rate=1e-3),

metrics=["accuracy"])

# fitting the model

history_vgg_model_multiclass_augmentation = vgg_model_multiclass_augmentation.fit(

training_data_multi, epochs=25,

steps_per_epoch=len(training_data_multi),

validation_data=testing_data_multi,

validation_steps=len(testing_data_multi))

# Epoch 10/10

# 235/235 [==============================] - 23s 99ms/step - loss: 1.6899 - accuracy: 0.4245 - val_loss: 1.8349 - val_accuracy: 0.3736

fig, axes = plt.subplots(nrows=1, ncols=2, figsize=(12, 6))

pd.DataFrame(history_vgg_model_multiclass.history).plot(ax=axes[0], title="Tiny VGG (Multiclass)")

pd.DataFrame(history_vgg_model_multiclass_augmentation.history).plot(ax=axes[1], title="Augmented Tiny VGG (Multiclass)")

# this looks already alot better - but the improvement is very slow...

# try adding a few more epochs to get those curves closer

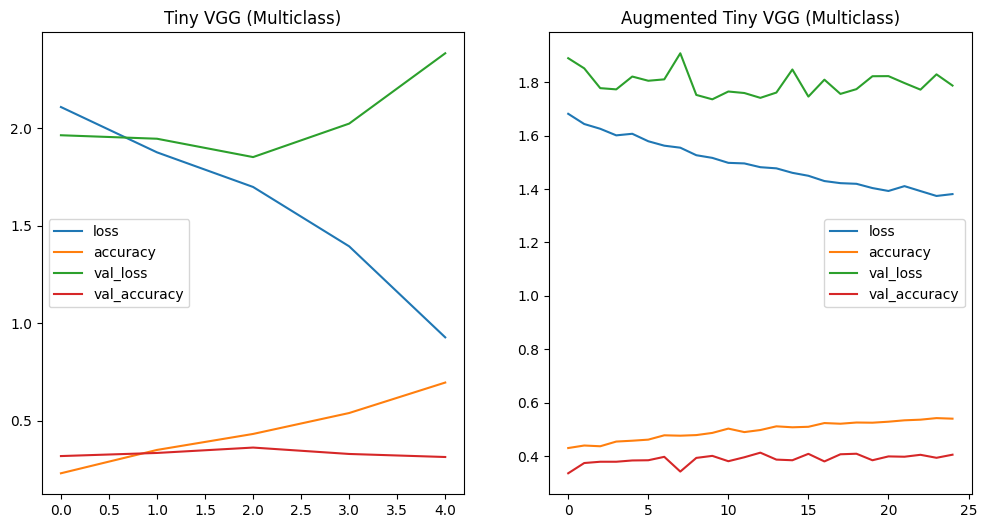

history_vgg_model_multiclass_augmentation = vgg_model_multiclass_augmentation.fit(

training_data_multi, epochs=25,

steps_per_epoch=len(training_data_multi),

validation_data=testing_data_multi,

validation_steps=len(testing_data_multi))

# as expected - running the training for longer - slowly - improves the accuracy

# as well as validation accuracy for the model. The validation loss, however, remains

# stubbornly high:

# Epoch 25/25

# 235/235 [==============================] - 23s 97ms/step - loss: 1.3810 - accuracy: 0.5397 - val_loss: 1.7875 - val_accuracy: 0.4048

fig, axes = plt.subplots(nrows=1, ncols=2, figsize=(12, 6))

pd.DataFrame(history_vgg_model_multiclass.history).plot(ax=axes[0], title="Tiny VGG (Multiclass)")

pd.DataFrame(history_vgg_model_multiclass_augmentation.history).plot(ax=axes[1], title="Augmented Tiny VGG (Multiclass)")

Making Predictions

img_height = 224

img_width = 224

# helper function to pre-process images for predictions

def prepare_image(file_name, img_height=img_height, img_width=img_width):

# read in image

img = tf.io.read_file(file_name)

# image array => tensor

img = tf.image.decode_image(img)

# reshape to training size

img = tf.image.resize(img, size=[img_height, img_width])

# we don't need to normalize the image this is done by the model itself

# img = img/255

# add a dimension in front for batch size => shape=(1, 224, 224, 3)

img = tf.expand_dims(img, axis=0)

return img

# adapt plot function for multiclass predictions

def predict_and_plot_multi(model, file_name, class_names):

# load the image and preprocess

img = prepare_image(file_name)

# run prediction

prediction = model.predict(img)

# check for multiclass

if len(prediction[0]) > 1:

# pick class with highest probability

pred_class = class_names[tf.argmax(prediction[0])]

else:

# binary classifications only return 1 probability value

pred_class = class_names[int(tf.round(prediction))]

# plot image & prediction

plt.imshow(mpimg.imread(file_name))

plt.title(f"Prediction: {pred_class}")

plt.axis(False)

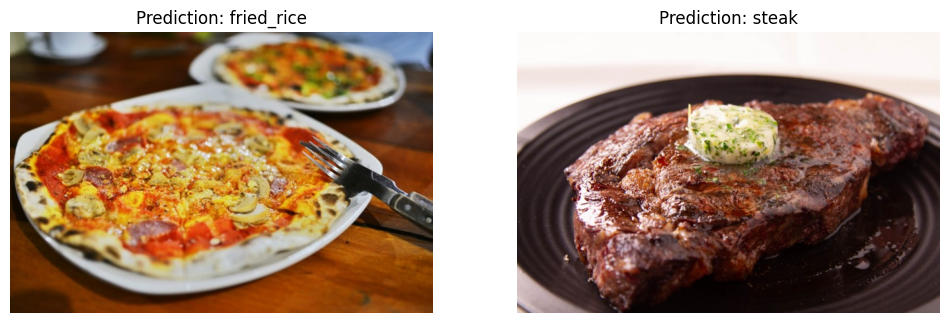

fig = plt.figure(figsize=(12, 6))

plot7 = fig.add_subplot(1, 2, 1)

pizza_image = predict_and_plot_multi(model=vgg_model_multiclass_augmentation, file_name=pizza_path, class_names=class_names)

plot8 = fig.add_subplot(1, 2, 2)

steak_image = predict_and_plot_multi(model=vgg_model_multiclass_augmentation, file_name=steak_path, class_names=class_names)

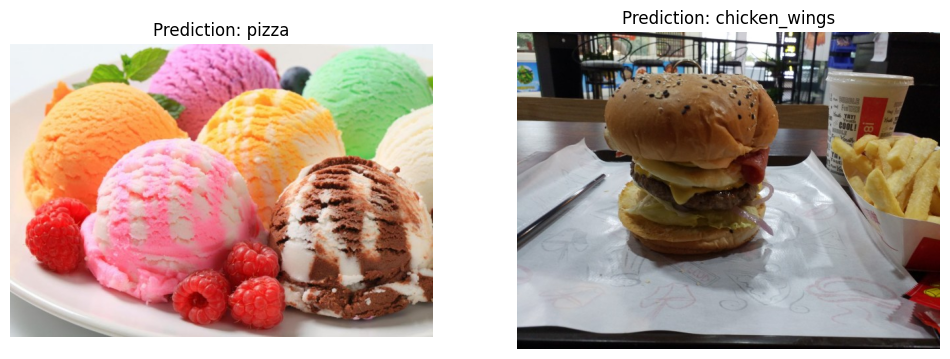

# a few more images to test with

ice_cream_path = "../assets/ice_cream.jpg"

hamburger_path = "../assets/hamburger.jpg"

fig = plt.figure(figsize=(12, 6))

plot7 = fig.add_subplot(1, 2, 1)

pizza_image = predict_and_plot_multi(model=vgg_model_multiclass_augmentation, file_name=ice_cream_path, class_names=class_names)

plot8 = fig.add_subplot(1, 2, 2)

steak_image = predict_and_plot_multi(model=vgg_model_multiclass_augmentation, file_name=hamburger_path, class_names=class_names)

This pretty much sums up the val_accuracy: 0.4048 value I gut during the training. With 10 I would get an ~ accuracy of 10% if the model was guessing randomly. The training got us factor 4 improvement. But it is far from perfect.

The validation accuracy was still increasing - so if I kept training the model I would get better results. But the increase was very slow - it might take a long time. The validation loss was running away in the beginning. But adding image augmentations pulled it back down nicely. I have been experimenting with different augmentations and their effect ranged from good to terrible :) - there is still some room for improvement adding or adjusting augmentations.

The next thing - or maybe the first - would be to consolidate the dataset. Some of the images in it are terrible. They have plenty of clutter in the background. There is grilled salmon that looks like sushi. Or close-up chicken curry that could be a pizza. Removing some of those images, or preferably replacing them with higher quality images, will improve our model performance.

We could also check out the confuion matrix to see if there are classes that perform particularly bad. If there are we can concentrate our efforts on them. (see below - sushi and ramen seems to perform OK)

Saving & Loading the Model

# save a model

vgg_model_multiclass_augmentation.save("../saved_models/vgg_model_multiclass_augmentation")

# INFO:tensorflow:Assets written to: ../saved_models/vgg_model_multiclass_augmentation/assets

# load a trained model

loaded_model = tf.keras.models.load_model("../saved_models/vgg_model_multiclass_augmentation")

# test if the model was loaded successful

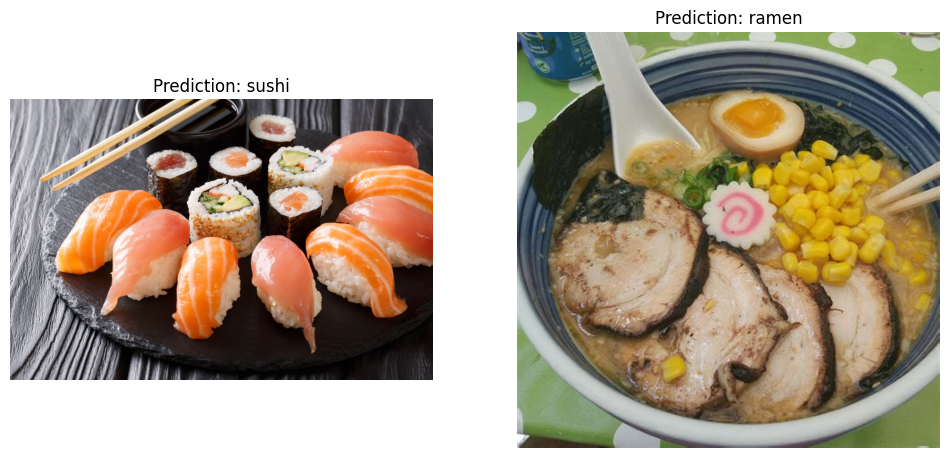

sushi_path = "../assets/sushi.jpg"

ramen_path = "../assets/ramen.jpg"

fig = plt.figure(figsize=(12, 6))

plot7 = fig.add_subplot(1, 2, 1)

pizza_image = predict_and_plot_multi(model=loaded_model, file_name=sushi_path, class_names=class_names)

plot8 = fig.add_subplot(1, 2, 2)

steak_image = predict_and_plot_multi(model=loaded_model, file_name=ramen_path, class_names=class_names)

To fast forward -> Next step Transfer Learning