Human Emotion Detection with Tensorflow

- Human Emotion Detection with Tensorflow

Building a Basic Model

import cv2 as cv

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

from sklearn.metrics import (

classification_report,

confusion_matrix,

ConfusionMatrixDisplay)

import seaborn as sns

import tensorflow as tf

# import tensorflow_dataset as tfds

# import tensorflow_probability as tfp

from tensorflow.keras import Sequential

from tensorflow.keras.callbacks import (

Callback,

CSVLogger,

EarlyStopping,

LearningRateScheduler,

ModelCheckpoint

)

from tensorflow.keras.layers import (

Layer,

GlobalAveragePooling2D,

Conv2D,

MaxPool2D,

Dense,

Flatten,

InputLayer,

BatchNormalization,

Input,

Dropout,

RandomFlip,

RandomRotation,

Resizing,

Rescaling

)

from tensorflow.keras.losses import BinaryCrossentropy, CategoricalCrossentropy

from tensorflow.keras.metrics import CategoricalAccuracy, TopKCategoricalAccuracy

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.regularizers import L2, L1

from tensorflow.keras.utils import image_dataset_from_directory

BATCH = 32

SIZE = 256

SEED = 444

EPOCHS = 30

LR = 0.001

FILTERS = 6

KERNEL = 3

STRIDES = 1

REGRATE = 0.0

POOL = 2

DORATE = 0.05

NLABELS = 3

DENSE1 = 100

DENSE2 = 10

Dataset

train_directory = './dataset/Emotions Dataset/Emotions Dataset/train'

test_directory = './dataset/Emotions Dataset/Emotions Dataset/test'

LABELS = ['angry', 'happy', 'sad']

train_dataset = image_dataset_from_directory(

train_directory,

labels='inferred',

label_mode='categorical',

class_names=LABELS,

color_mode='rgb',

batch_size=BATCH,

image_size=(SIZE, SIZE),

shuffle=True,

seed=SEED,

# validation_split=0.2,

# subset='validation',

interpolation='bilinear',

follow_links=False,

crop_to_aspect_ratio=False

)

# Found 6799 images belonging to 3 classes.

test_dataset = image_dataset_from_directory(

test_directory,

labels='inferred',

label_mode='categorical',

class_names=LABELS,

color_mode='rgb',

batch_size=BATCH,

image_size=(SIZE, SIZE),

shuffle=True,

seed=SEED

)

# Found 2278 images belonging to 3 classes.

plt.figure(figsize=(16,16))

for images, labels in train_dataset.take(1):

for i in range(16):

ax = plt.subplot(4,4,i+1)

plt.title(LABELS[tf.argmax(labels[i], axis=0).numpy()])

plt.imshow(images[i]/255.)

plt.axis('off')

plt.savefig('assets/tf_Emotion_Detection_01.webp', bbox_inches='tight')

training_dataset = (

train_dataset.prefetch(

tf.data.AUTOTUNE

)

)

testing_dataset = (

test_dataset.prefetch(

tf.data.AUTOTUNE

)

)

LeNet 5 Model

resize_rescale_layers = Sequential([

Resizing(SIZE, SIZE),

Rescaling(1./255)

])

model_lenet = Sequential([

InputLayer(input_shape=(None, None, 3)),

resize_rescale_layers,

Conv2D(

filters = FILTERS,

kernel_size = KERNEL,

strides = STRIDES,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

MaxPool2D(

pool_size = POOL,

strides = STRIDES*2

),

Dropout(rate=DORATE),

Conv2D(

filters = FILTERS*2+4,

kernel_size = KERNEL,

strides = STRIDES,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

MaxPool2D(

pool_size = POOL,

strides = STRIDES*2

),

Flatten(),

Dense(

DENSE1,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

Dropout(rate=DORATE),

Dense(

DENSE2,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

Dense(

NLABELS,

activation = 'softmax',

name = 'Output'

)

])

model_lenet.build(input_shape=(SIZE, SIZE, 3))

model_lenet.summary()

# Total params: 4,668,319

# Trainable params: 4,668,055

# Non-trainable params: 264

loss_function = CategoricalCrossentropy(

from_logits = False,

label_smoothing = 0.0,

axis = -1,

name = 'categorical_crossentropy'

)

metrics = [

CategoricalAccuracy(name='accuracy'),

TopKCategoricalAccuracy(k=2,name='topk-accuracy')

]

model_lenet.compile(

optimizer = Adam(learning_rate = LR),

loss = loss_function,

metrics = metrics

)

Model Training

history_lenet = model_lenet.fit(

training_dataset,

validation_data = testing_dataset,

epochs = EPOCHS,

verbose = 1

)

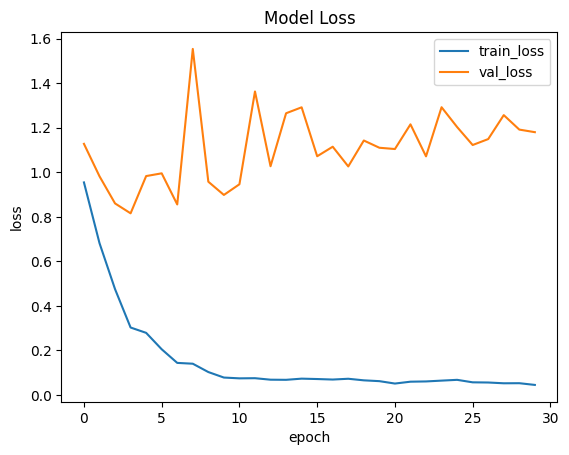

# loss: 0.0454

# accuracy: 0.9803

# topk-accuracy: 0.9982

# val_loss: 1.1798

# val_accuracy: 0.7441

# val_topk-accuracy: 0.8955

plt.plot(history_lenet.history['loss'])

plt.plot(history_lenet.history['val_loss'])

plt.title('Model Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train_loss', 'val_loss'])

plt.show()

plt.plot(history_lenet.history['accuracy'])

plt.plot(history_lenet.history['val_accuracy'])

plt.title('Model Accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train_accuracy', 'val_accuracy'])

plt.show()

Model Evaluation

model_lenet.evaluate(testing_dataset)

# loss: 1.1798 - accuracy: 0.7441 - topk-accuracy: 0.8955

test_image = cv.imread('./dataset/happy.jpg')

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = model_lenet(img).numpy()

print(label)

# [[0.1078622 0.8603977 0.03174012]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# happy

test_image = cv.imread('./dataset/sad.jpg')

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = model_lenet(img).numpy()

print(label)

# [[9.1999680e-02 2.9016874e-04 9.0771013e-01]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# sad

test_image = cv.imread('./dataset/angry.jpg')

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = model_lenet(img).numpy()

print(label)

# [[9.9998641e-01 1.3307266e-06 1.2283378e-05]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# angry

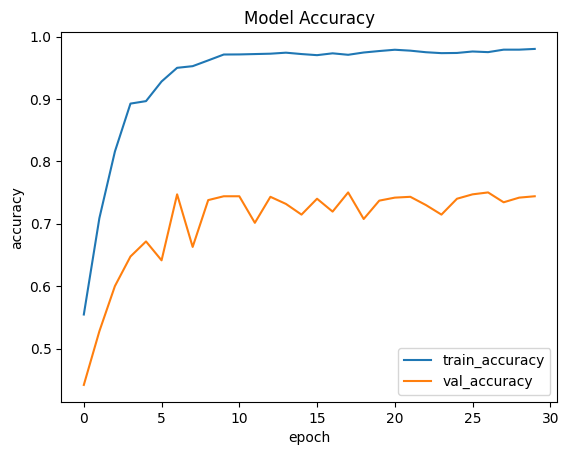

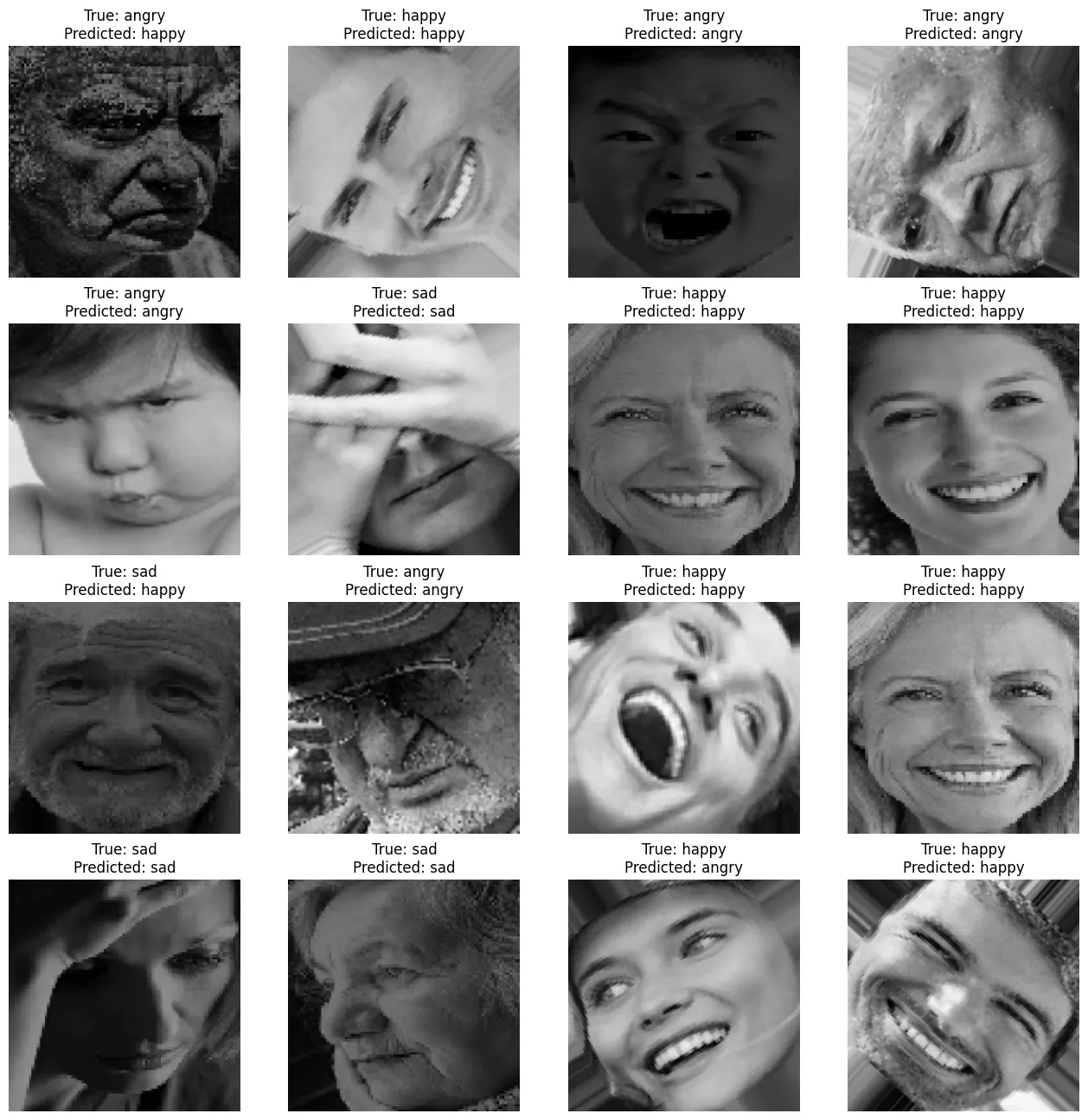

plt.figure(figsize=(16,16))

for images, labels in test_dataset.take(1):

for i in range(16):

ax = plt.subplot(4,4,i+1)

true = "True: " + LABELS[tf.argmax(labels[i], axis=0).numpy()]

pred = "Predicted: " + LABELS[

tf.argmax(model_lenet(tf.expand_dims(images[i], axis=0)).numpy(), axis=1).numpy()[0]

]

plt.title(

true + "\n" + pred

)

plt.imshow(images[i]/255.)

plt.axis('off')

plt.savefig('assets/tf_Emotion_Detection_04.webp', bbox_inches='tight')

y_pred = []

y_test = []

for img, label in testing_dataset:

y_pred.append(model_lenet(img))

y_test.append(label.numpy())

conf_mtx = ConfusionMatrixDisplay(

confusion_matrix=confusion_matrix(

np.argmax(y_test[:-1], axis=-1).flatten(),

np.argmax(y_pred[:-1], axis=-1).flatten()

),

display_labels=LABELS

)

fig, ax = plt.subplots(figsize=(16,12))

conf_mtx.plot(ax=ax, cmap='plasma', include_values=False)

plt.savefig('assets/tf_Emotion_Detection_05.webp', bbox_inches='tight')

Add Data Augmentation to prevent Overfitting

resize_rescale_layers = Sequential([

Resizing(SIZE, SIZE),

Rescaling(1./255)

])

data_augmentation = Sequential([

RandomRotation(factor=0.25),

RandomFlip(mode='horizontal',),

RandomContrast(factor=0.1),

# RandomBrightness(0.1)

])

training_dataset = (

train_dataset

.map(lambda image, label: (data_augmentation(image), label))

.prefetch(tf.data.AUTOTUNE)

)

testing_dataset = (

test_dataset.prefetch(

tf.data.AUTOTUNE

)

)

model_lenet = Sequential([

InputLayer(input_shape=(None, None, 3)),

resize_rescale_layers,

Conv2D(

filters = FILTERS,

kernel_size = KERNEL,

strides = STRIDES,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

MaxPool2D(

pool_size = POOL,

strides = STRIDES*2

),

Dropout(rate=DORATE),

Conv2D(

filters = FILTERS*2+4,

kernel_size = KERNEL,

strides = STRIDES,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

MaxPool2D(

pool_size = POOL,

strides = STRIDES*2

),

Flatten(),

Dense(

DENSE1,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

Dropout(rate=DORATE),

Dense(

DENSE2,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

Dense(

NLABELS,

activation = 'softmax',

name = 'Output'

)

])

model_lenet.build(input_shape=(SIZE, SIZE, 3))

model_lenet.summary()

# Total params: 4,668,319

# Trainable params: 4,668,055

# Non-trainable params: 264

loss_function = CategoricalCrossentropy(

from_logits = False,

label_smoothing = 0.0,

axis = -1,

name = 'categorical_crossentropy'

)

metrics = [

CategoricalAccuracy(name='accuracy'),

TopKCategoricalAccuracy(k=2,name='topk-accuracy')

]

model_lenet.compile(

optimizer = Adam(learning_rate = LR),

loss = loss_function,

metrics = metrics

)

Model Training

history_lenet = model_lenet.fit(

training_dataset,

validation_data = testing_dataset,

epochs = EPOCHS,

verbose = 1

)

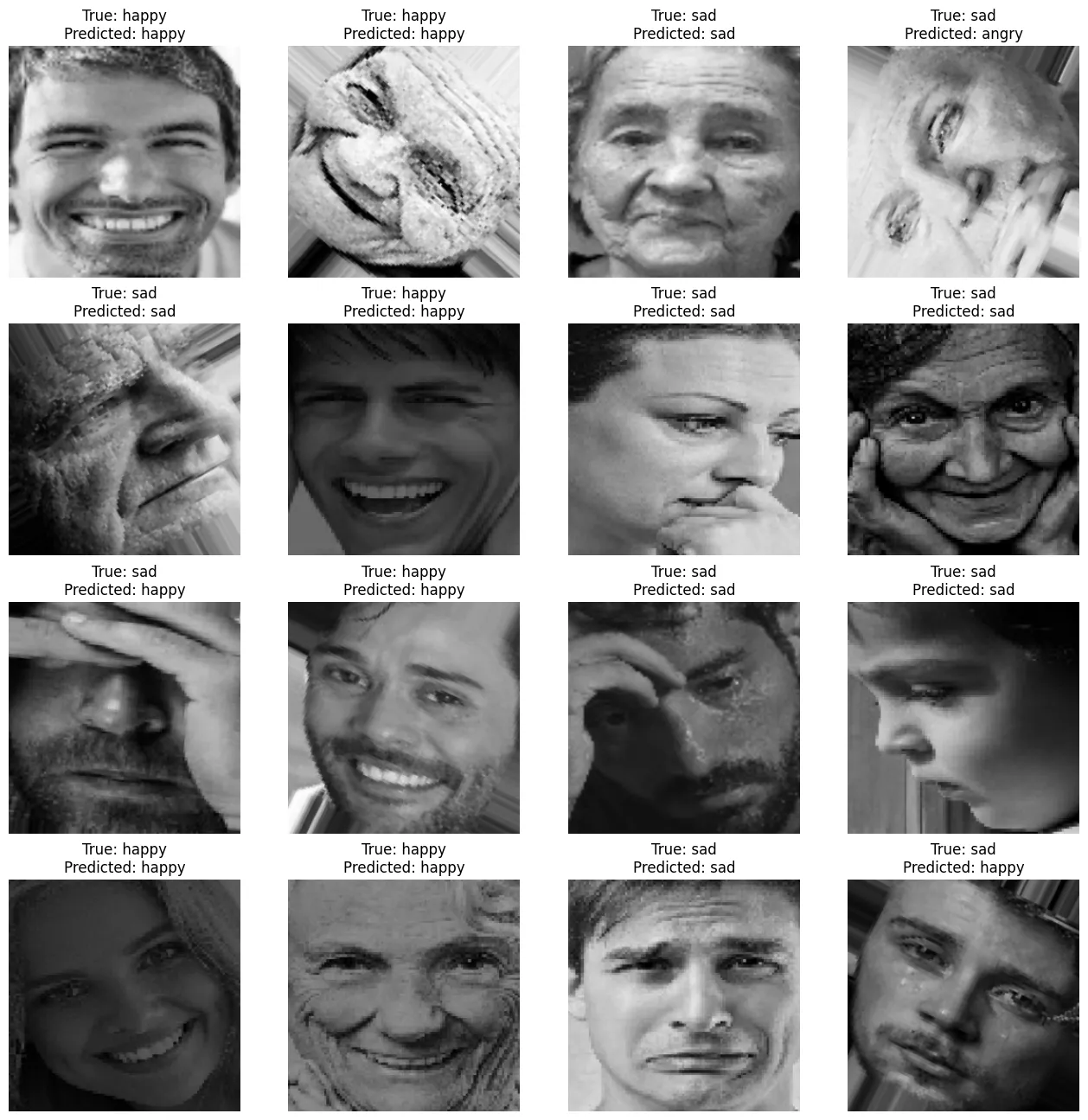

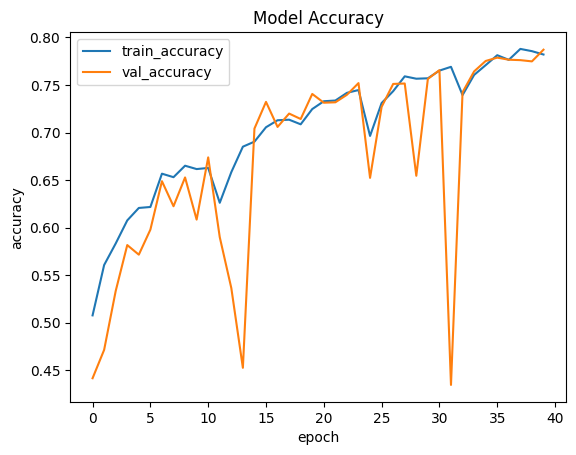

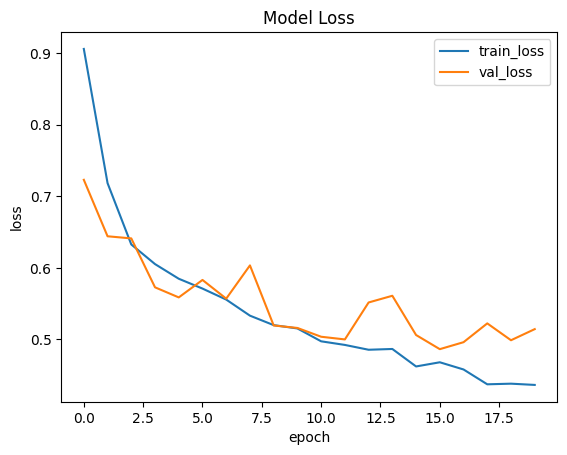

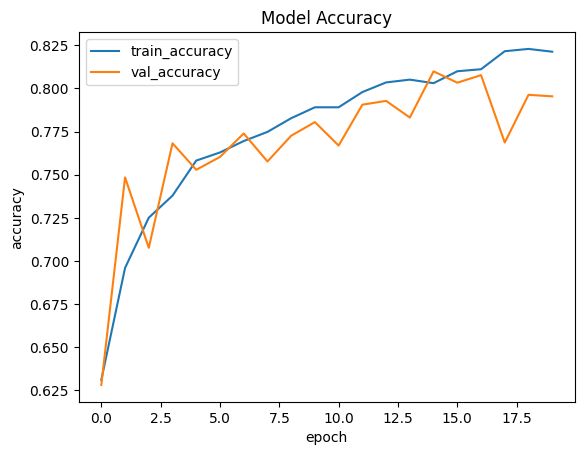

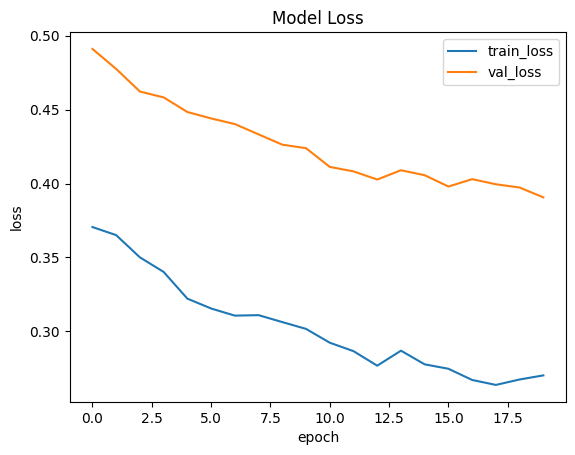

# loss: 0.5282 - accuracy: 0.7820

# topk-accuracy: 0.9394

# val_loss: 0.5216

# val_accuracy: 0.7871

# val_topk-accuracy: 0.9407

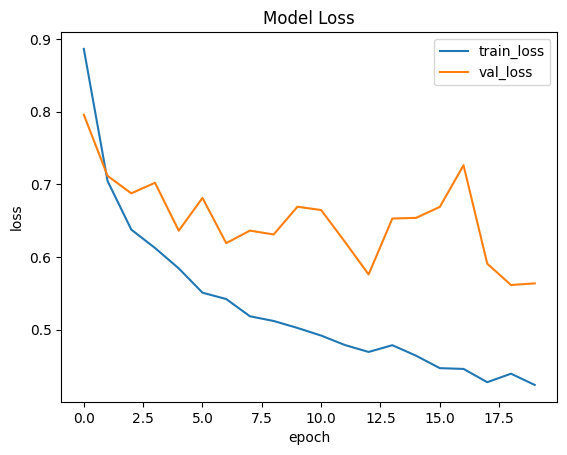

plt.plot(history_lenet.history['loss'])

plt.plot(history_lenet.history['val_loss'])

plt.title('Model Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train_loss', 'val_loss'])

plt.show()

plt.plot(history_lenet.history['accuracy'])

plt.plot(history_lenet.history['val_accuracy'])

plt.title('Model Accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train_accuracy', 'val_accuracy'])

plt.show()

Model Evaluation

model_lenet.evaluate(testing_dataset)

# loss: 0.5216 - accuracy: 0.7871 - topk-accuracy: 0.9407

test_image = cv.imread('./dataset/happy.jpg')

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = model_lenet(img).numpy()

print(label)

# [[0.1078622 0.8603977 0.03174012]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# [[1.0409105e-03 3.4608482e-04 9.9861300e-01]]

# sad

test_image = cv.imread('./dataset/sad.jpg')

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = model_lenet(img).numpy()

print(label)

# [[9.1999680e-02 2.9016874e-04 9.0771013e-01]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# [[0.00754709 0.09368072 0.89877224]]

# sad

test_image = cv.imread('./dataset/angry.jpg')

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = model_lenet(img).numpy()

print(label)

# [[9.9998641e-01 1.3307266e-06 1.2283378e-05]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# [[0.17887418 0.5655646 0.25556126]]

# happy

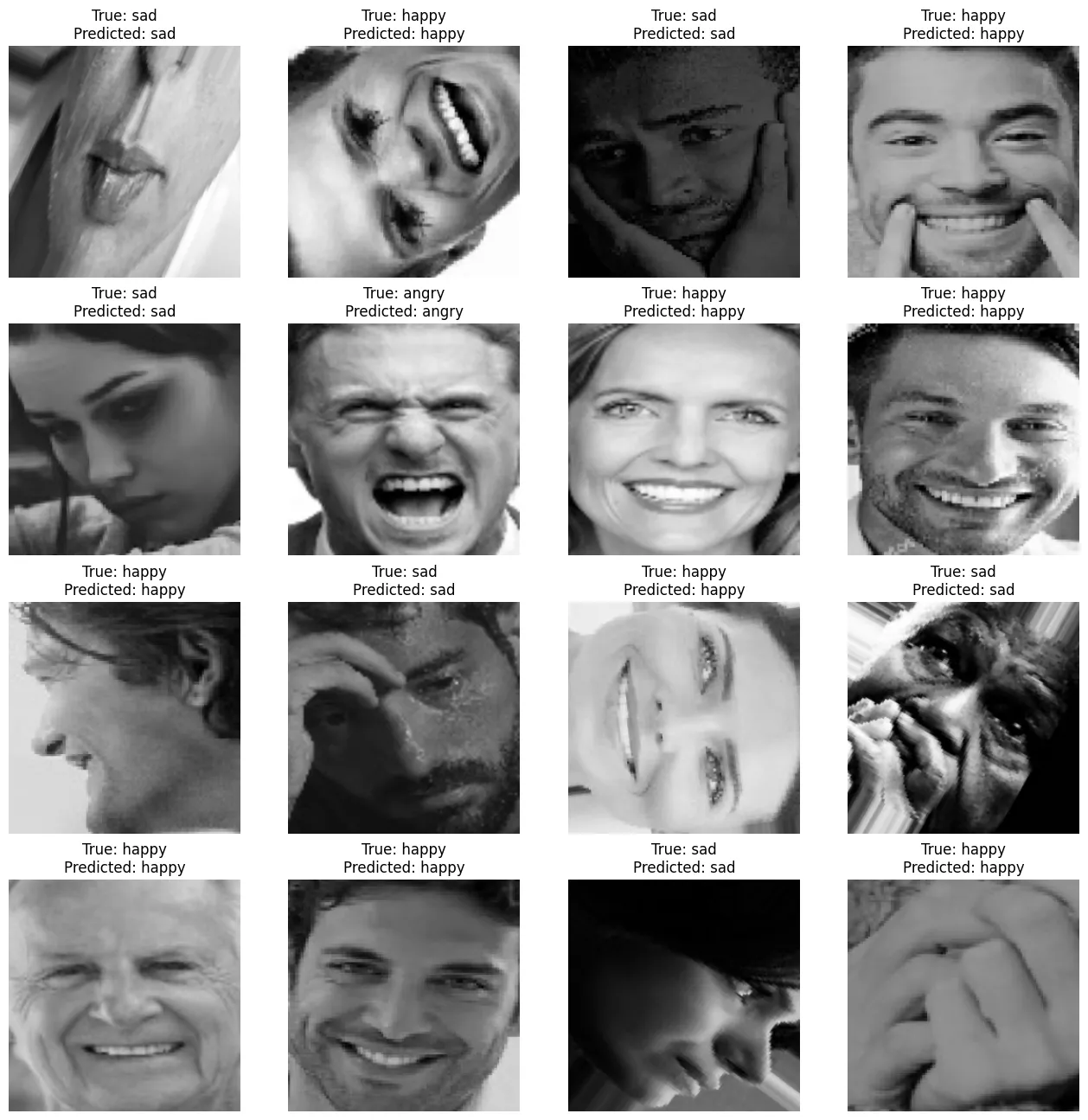

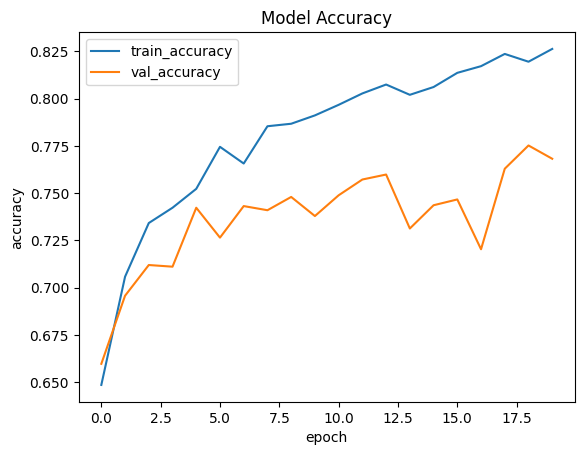

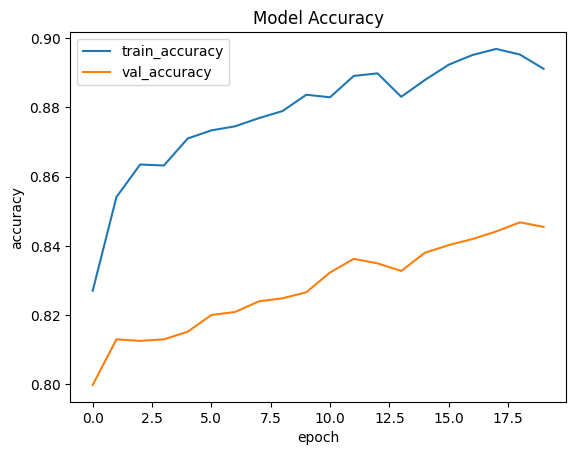

plt.figure(figsize=(16,16))

for images, labels in test_dataset.take(1):

for i in range(16):

ax = plt.subplot(4,4,i+1)

true = "True: " + LABELS[tf.argmax(labels[i], axis=0).numpy()]

pred = "Predicted: " + LABELS[

tf.argmax(model_lenet(tf.expand_dims(images[i], axis=0)).numpy(), axis=1).numpy()[0]

]

plt.title(

true + "\n" + pred

)

plt.imshow(images[i]/255.)

plt.axis('off')

plt.savefig('assets/tf_Emotion_Detection_08.webp', bbox_inches='tight')

y_pred = []

y_test = []

for img, label in testing_dataset:

y_pred.append(model_lenet(img))

y_test.append(label.numpy())

conf_mtx = ConfusionMatrixDisplay(

confusion_matrix=confusion_matrix(

np.argmax(y_test[:-1], axis=-1).flatten(),

np.argmax(y_pred[:-1], axis=-1).flatten()

),

display_labels=LABELS

)

fig, ax = plt.subplots(figsize=(16,12))

conf_mtx.plot(ax=ax, cmap='plasma', include_values=False)

plt.savefig('assets/tf_Emotion_Detection_09.webp', bbox_inches='tight')

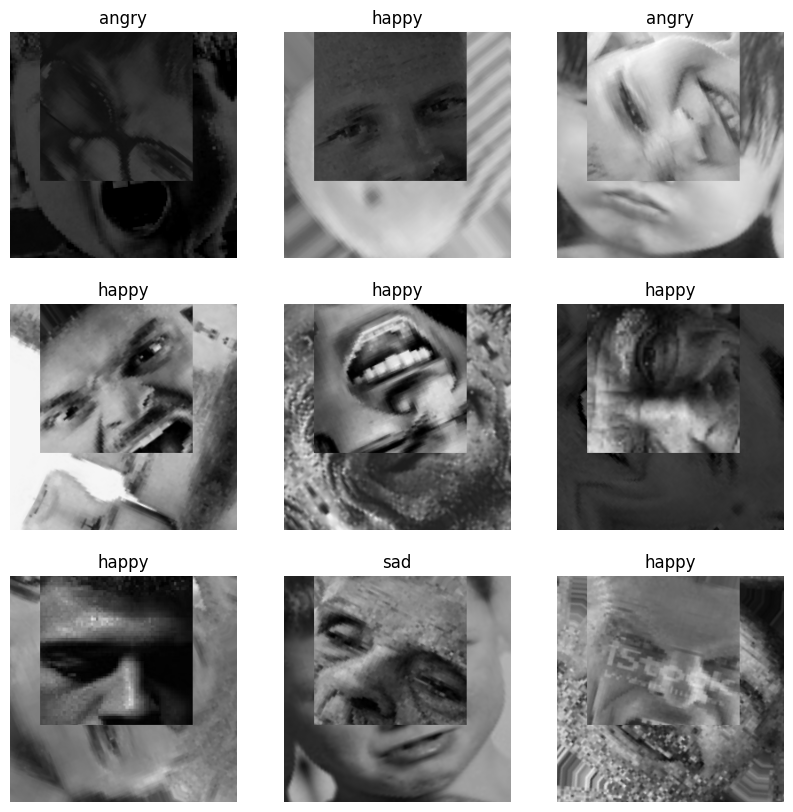

Cutmix Data Augmentation

def sample_beta_distribution(size, concentration_0=0.2, concentration_1=0.2):

gamma_1_sample = tf.random.gamma(shape=[size], alpha=concentration_1)

gamma_2_sample = tf.random.gamma(shape=[size], alpha=concentration_0)

return gamma_1_sample / (gamma_1_sample + gamma_2_sample)

data_augmentation = Sequential([

RandomRotation(factor = (0.25),),

RandomFlip(mode='horizontal',),

RandomContrast(factor=0.1),

RandomBrightness(0.1)

])

# CutMix data augmentation function

train_ds_one = train_dataset.map(lambda image, label: (data_augmentation(image), label))

train_ds_two = train_dataset.map(lambda image, label: (data_augmentation(image), label))

train_ds = tf.data.Dataset.zip((train_ds_two, train_ds_two))

@tf.function

def get_box(lambda_value):

cut_rat = tf.math.sqrt(1.0 - lambda_value)

cut_w = SIZE * cut_rat # rw

cut_w = tf.cast(cut_w, tf.int32)

cut_h = SIZE * cut_rat # rh

cut_h = tf.cast(cut_h, tf.int32)

cut_x = tf.random.uniform((1,), minval=0, maxval=SIZE, dtype=tf.int32) # rx

cut_y = tf.random.uniform((1,), minval=0, maxval=SIZE, dtype=tf.int32) # ry

boundaryx1 = tf.clip_by_value(cut_x[0] - cut_w // 2, 0, SIZE)

boundaryy1 = tf.clip_by_value(cut_y[0] - cut_h // 2, 0, SIZE)

bbx2 = tf.clip_by_value(cut_x[0] + cut_w // 2, 0, SIZE)

bby2 = tf.clip_by_value(cut_y[0] + cut_h // 2, 0, SIZE)

target_h = bby2 - boundaryy1

if target_h == 0:

target_h += 1

target_w = bbx2 - boundaryx1

if target_w == 0:

target_w += 1

return boundaryx1, boundaryy1, target_h, target_w

@tf.function

def cutmix(train_ds_one, train_ds_two):

(image1, label1), (image2, label2) = train_ds_one, train_ds_two

alpha = [0.25]

beta = [0.25]

# Get a sample from the Beta distribution

lambda_value = sample_beta_distribution(1, alpha, beta)

# Define Lambda

lambda_value = lambda_value[0][0]

# Get the bounding box offsets, heights and widths

boundaryx1, boundaryy1, target_h, target_w = get_box(lambda_value)

# Get a patch from the second image (`image2`)

crop2 = tf.image.crop_to_bounding_box(

image2, boundaryy1, boundaryx1, target_h, target_w

)

# Pad the `image2` patch (`crop2`) with the same offset

image2 = tf.image.pad_to_bounding_box(

crop2, boundaryy1, boundaryx1, SIZE, SIZE

)

# Get a patch from the first image (`image1`)

crop1 = tf.image.crop_to_bounding_box(

image1, boundaryy1, boundaryx1, target_h, target_w

)

# Pad the `image1` patch (`crop1`) with the same offset

img1 = tf.image.pad_to_bounding_box(

crop1, boundaryy1, boundaryx1, SIZE, SIZE

)

# Modify the first image by subtracting the patch from `image1`

# (before applying the `image2` patch)

image1 = image1 - img1

# Add the modified `image1` and `image2` together to get the CutMix image

image = image1 + image2

# Adjust Lambda in accordance to the pixel ration

lambda_value = 1 - (target_w * target_h) / (SIZE * SIZE)

lambda_value = tf.cast(lambda_value, tf.float32)

# Combine the labels of both images

label = lambda_value * label1 + (1 - lambda_value) * label2

return image, label

# Create the new dataset using our `cutmix` utility

train_ds_cmu = (

train_ds.shuffle(1024)

.map(cutmix, num_parallel_calls=tf.data.AUTOTUNE)

.prefetch(tf.data.AUTOTUNE)

)

test_ds = (

test_dataset.prefetch(tf.data.AUTOTUNE)

)

# Let's preview 9 samples from the dataset

image_batch, label_batch = next(iter(train_ds_cmu))

plt.figure(figsize=(10, 10))

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

plt.title(LABELS[np.argmax(label_batch[i])])

plt.imshow(image_batch[i]/255)

plt.axis("off")

resize_rescale_layers = Sequential([

Resizing(SIZE, SIZE),

Rescaling(1./255)

])

Model Building

model_lenet = Sequential([

InputLayer(input_shape=(None, None, 3)),

resize_rescale_layers,

Conv2D(

filters = FILTERS,

kernel_size = KERNEL,

strides = STRIDES,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

MaxPool2D(

pool_size = POOL,

strides = STRIDES*2

),

Dropout(rate=DORATE),

Conv2D(

filters = FILTERS*2+4,

kernel_size = KERNEL,

strides = STRIDES,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

MaxPool2D(

pool_size = POOL,

strides = STRIDES*2

),

Flatten(),

Dense(

DENSE1,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

Dropout(rate=DORATE),

Dense(

DENSE2,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

Dense(

NLABELS,

activation = 'softmax',

name = 'Output'

)

])

model_lenet.build()

model_lenet.summary()

# Total params: 6,153,119

# Trainable params: 6,152,855

# Non-trainable params: 264

loss_function = CategoricalCrossentropy(

from_logits = False,

label_smoothing = 0.0,

axis = -1,

name = 'categorical_crossentropy'

)

metrics = [

CategoricalAccuracy(name='accuracy'),

TopKCategoricalAccuracy(k=2,name='topk-accuracy')

]

model_lenet.compile(

optimizer = Adam(learning_rate = LR),

loss = loss_function,

metrics = metrics

)

Model Training

history_lenet = model_lenet.fit(

train_ds_cmu,

validation_data = test_ds,

epochs = EPOCHS,

verbose = 1

)

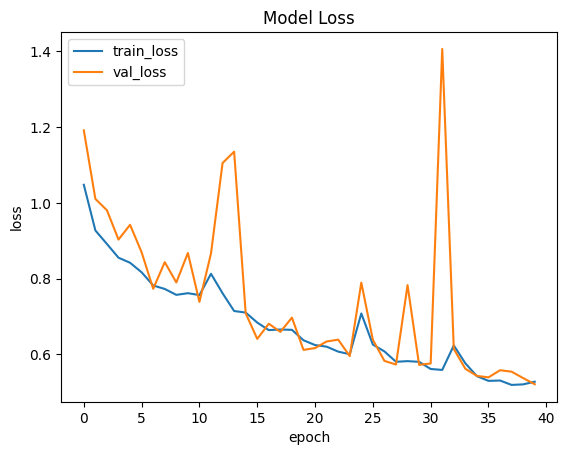

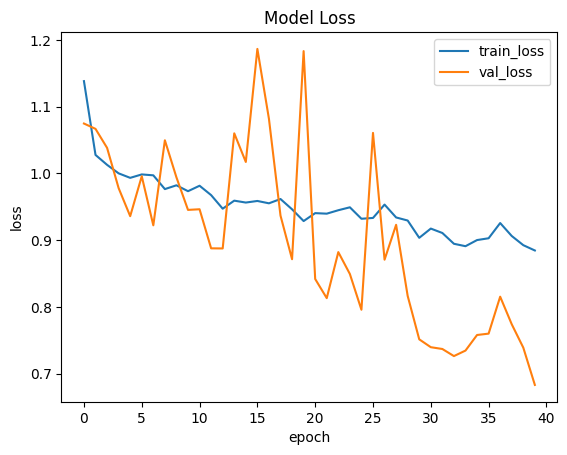

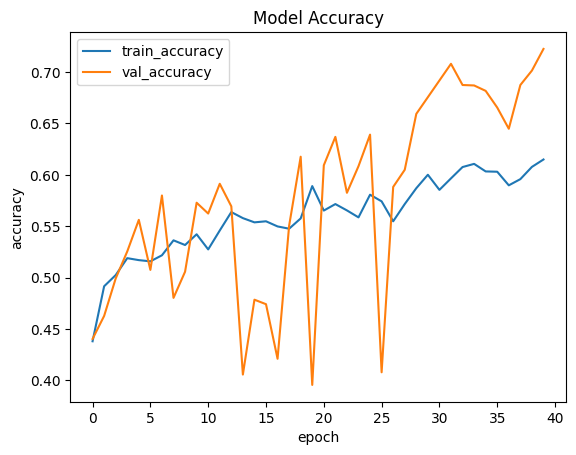

# loss: 0.8846

# accuracy: 0.6149

# topk-accuracy: 0.8550

# val_loss: 0.6832

# val_accuracy: 0.7226

# val_topk-accuracy: 0.9083

plt.plot(history_lenet.history['loss'])

plt.plot(history_lenet.history['val_loss'])

plt.title('Model Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train_loss', 'val_loss'])

plt.show()

plt.plot(history_lenet.history['accuracy'])

plt.plot(history_lenet.history['val_accuracy'])

plt.title('Model Accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train_accuracy', 'val_accuracy'])

plt.show()

Model Evaluation

model_lenet.evaluate(test_ds)

# loss: 0.6832 - accuracy: 0.7226 - topk-accuracy: 0.9083

test_image = cv.imread('./dataset/happy.jpg')

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = model_lenet(img).numpy()

print(label)

# [[0.1078622 0.8603977 0.03174012]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# [[0.1541878 0.37400016 0.47181207]]

# sad

test_image = cv.imread('./dataset/sad.jpg')

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = model_lenet(img).numpy()

print(label)

# [[9.1999680e-02 2.9016874e-04 9.0771013e-01]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# [[0.20370252 0.60639006 0.1899074 ]]

# happy

test_image = cv.imread('./dataset/angry.jpg')

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = model_lenet(img).numpy()

print(label)

# [[9.9998641e-01 1.3307266e-06 1.2283378e-05]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# [[0.07486102 0.8021671 0.1229719 ]]

# happy

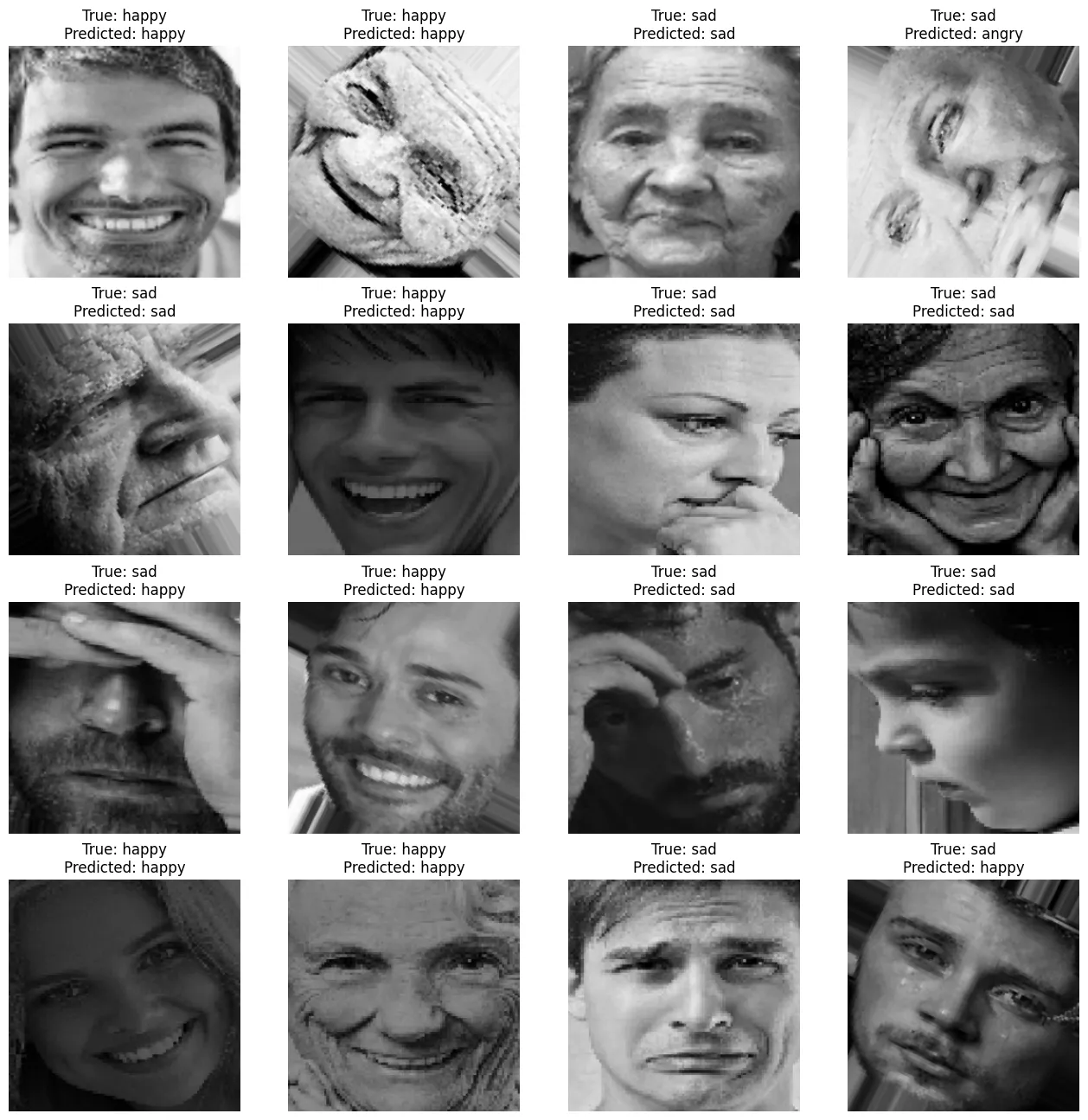

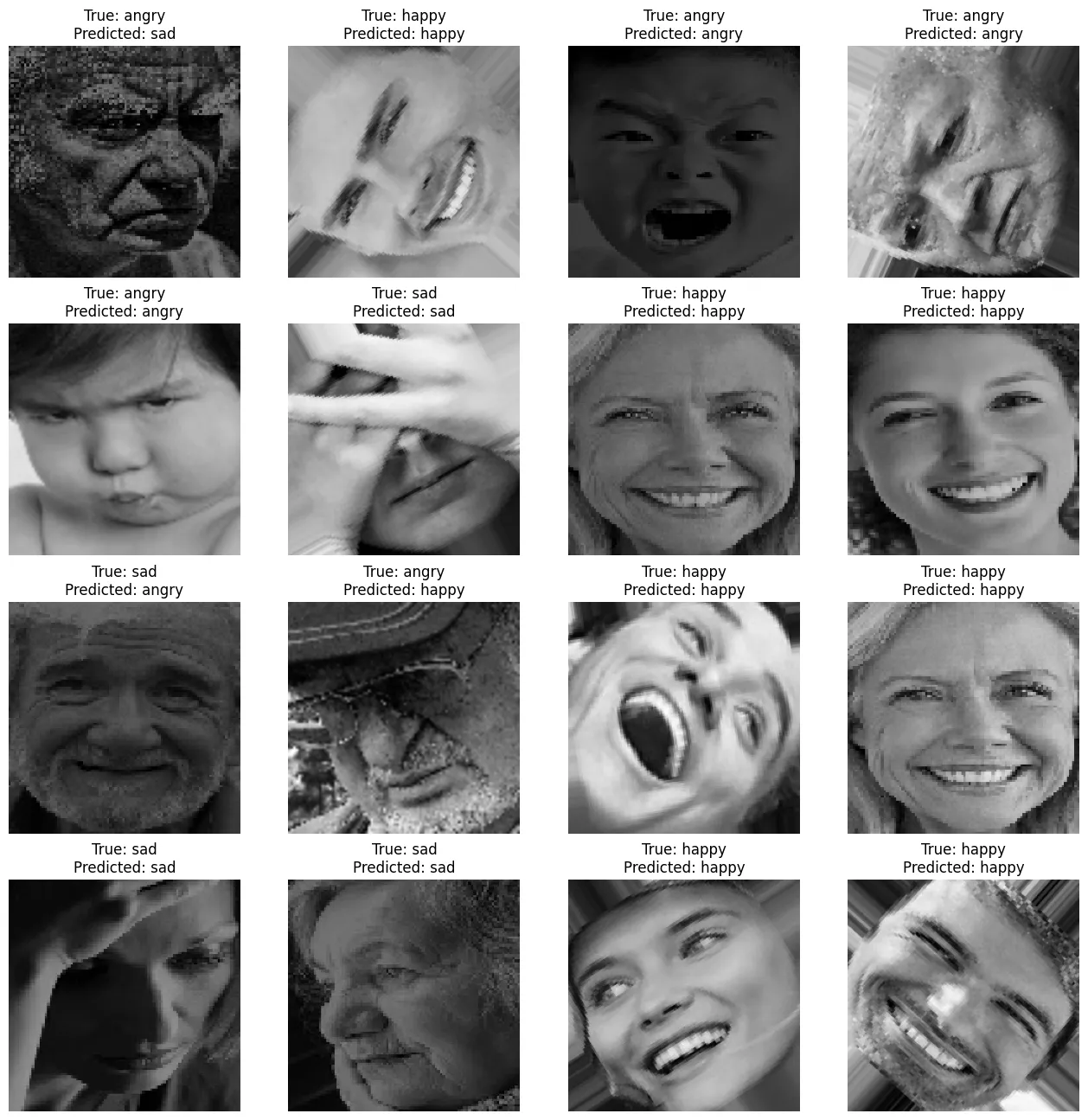

plt.figure(figsize=(16,16))

for images, labels in test_dataset.take(1):

for i in range(16):

ax = plt.subplot(4,4,i+1)

true = "True: " + LABELS[tf.argmax(labels[i], axis=0).numpy()]

pred = "Predicted: " + LABELS[

tf.argmax(model_lenet(tf.expand_dims(images[i], axis=0)).numpy(), axis=1).numpy()[0]

]

plt.title(

true + "\n" + pred

)

plt.imshow(images[i]/255.)

plt.axis('off')

plt.savefig('assets/tf_Emotion_Detection_13.webp', bbox_inches='tight')

y_pred = []

y_test = []

for img, label in test_ds:

y_pred.append(model_lenet(img))

y_test.append(label.numpy())

conf_mtx = ConfusionMatrixDisplay(

confusion_matrix=confusion_matrix(

np.argmax(y_test[:-1], axis=-1).flatten(),

np.argmax(y_pred[:-1], axis=-1).flatten()

),

display_labels=LABELS

)

fig, ax = plt.subplots(figsize=(16,12))

conf_mtx.plot(ax=ax, cmap='plasma', include_values=False)

plt.savefig('assets/tf_Emotion_Detection_14.webp', bbox_inches='tight')

Saving the Model

# Save the weights

model_lenet.save_weights('./saved_weights/cutmix_weights')

# # Create a new model instance

# model_lenet = create_model()

# # Restore the weights

# model_lenet.load_weights('./checkpoints/my_checkpoint')

model_lenet.save('saved_model/cutmix_model')

restored_model = tf.keras.models.load_model('saved_model/cutmix_model')

# Check its architecture

restored_model.summary()

restored_model.evaluate(test_ds)

# loss: 0.6832 - accuracy: 0.7226 - topk-accuracy: 0.9083

Saving the Augmented Dataset

The TFRecord format is a simple format for storing a sequence of binary records. Protocol buffers are a cross-platform, cross-language library for efficient serialization of structured data.

training_unbatched = (

train_ds_cmu.unbatch()

)

testing_unbatched = (

test_ds.unbatch()

)

def create_example(image, label):

bytes_feature = Feature(

bytes_list = BytesList(value=[image]))

int_feature = Feature(

int64_list = Int64List(value=[label]))

example = Example(

features=Features(feature={

'images': bytes_feature,

'labels': int_feature,

}))

return example.SerializeToString()

def encode_image(image, label):

image = tf.image.convert_image_dtype(image, dtype=tf.uint8)

image = tf.io.encode_jpeg(image)

return image, tf.argmax(label)

encoded_ds = (

training_unbatched.map(encode_image)

)

for shard_number in range(SHARDS):

sharded_ds = (

encoded_ds

.shard(SHARDS, shard_number)

.as_numpy_iterator()

)

with TFRecordWriter(PATH.format(shard_number)) as file_writer:

for image, label in sharded_ds:

file_writer.write(create_example(image, label))

Reconstructing the Augmented Dataset (This part does not work yet!)

shards_list = [PATH.format(i) for i in range(SHARDS-2)]

loaded_training_ds = tf.data.TFRecordDataset(filenames = shards_list)

def parse_tfrecords(example):

feature_description = {

'images': tf.io.FixedLenFeature([], tf.string),

'labels': tf.io.FixedLenFeature([], tf.int64)

}

example = tf.io.parse_single_example(example, feature_description)

example['images'] = tf.image.convert_image_dtype(

tf.io.decode_image(

example['images'], channels = 3

), dtype = tf.float32

)

return example['images'], example['labels']

parsed_ds = (

loaded_training_ds

.map(parse_tfrecords)

.batch(BATCH)

.prefetch(tf.data.AUTOTUNE)

)

for i in train_ds_cmu.take(1):

print(i)

# tf.Tensor: shape=(32, 256, 256, 3)

for i in parsed_ds.take(1):

print(i)

# tf.Tensor: shape=(32, 256, 256, 3)

model_lenet2 = Sequential([

InputLayer(input_shape=(None, None, 3)),

resize_rescale_layers,

Conv2D(

filters = FILTERS,

kernel_size = KERNEL,

strides = STRIDES,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

MaxPool2D(

pool_size = POOL,

strides = STRIDES*2

),

Dropout(rate=DORATE),

Conv2D(

filters = FILTERS*2+4,

kernel_size = KERNEL,

strides = STRIDES,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

MaxPool2D(

pool_size = POOL,

strides = STRIDES*2

),

Flatten(),

Dense(

DENSE1,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

Dropout(rate=DORATE),

Dense(

DENSE2,

activation = 'relu',

kernel_regularizer = L2(REGRATE)

),

BatchNormalization(),

Dense(

NLABELS,

activation = 'softmax',

name = 'Output'

)

])

model_lenet2.build()

model_lenet2.summary()

# Total params: 4,668,319

# Trainable params: 4,668,055

# Non-trainable params: 264

loss_function2 = SparseCategoricalCrossentropy()

metrics2 = [SparseCategoricalAccuracy(name="accuracy")]

model_lenet2.compile(

optimizer = Adam(learning_rate = LR),

loss = loss_function2,

metrics = metrics2

)

history_lenet2 = model_lenet2.fit(

parsed_ds,

epochs = EPOCHS,

verbose = 1

)

Transfer Learning

Building the Efficient TF Model

# # transfer learning

# backbone = tf.keras.applications.efficientnet.EfficientNetB4(

# include_top=False,

# weights='imagenet',

# input_shape=(SIZE, SIZE, 3)

# )

# ERROR - EfficientNetv1 cannot be saved:

# ValueError: Unable to create a Keras model from SavedModel at saved_model/efficient_model. This SavedModel was exported with `tf.saved_model.save`, and lacks the Keras metadata file. Please save your Keras model by calling `model.save` or `tf.keras.models.save_model`. Note that you can still load this SavedModel with `tf.saved_model.load`.

Since I was unable to save the above model I checked the Keras Documentation for an alternative:

| Model | Size (MB) | Top-1 Accuracy | Top-5 Accuracy | Parameters | Depth | Time (ms) per inference step (CPU) | Time (ms) per inference step (GPU) |

|---|---|---|---|---|---|---|---|

| MobileNetV2 | 14 | 71.3% | 90.1% | 3.5M | 105 | 25.9 | 3.8 |

| EfficientNetB4 | 75 | 82.9% | 96.4% | 19.5M | 258 | 308.3 | 15.1 |

| EfficientNetV2S | 88 | 83.9% | 96.7% | 21.6M | - | - | - |

- Training Scores:

- EfficientNetB4: loss:

0.5003- accuracy:0.7954- topk_accuracy:0.9451~80MB - EfficientNetV2S: loss:

0.5144- accuracy:0.7968- topk_accuracy:0.9359104.6MB - MobileNetV3SM: loss:

0.5638- accuracy:0.7682- topk_accuracy:0.932812.8MB

- EfficientNetB4: loss:

# transfer learning

backbone = tf.keras.applications.efficientnet_v2.EfficientNetV2S(

include_top=False,

weights='imagenet',

input_shape=(SIZE, SIZE, 3),

include_preprocessing=True

)

backbone.trainable = False

efficient_model = tf.keras.Sequential([

Input(shape=(SIZE, SIZE, 3)),

backbone,

GlobalAveragePooling2D(),

Dense(DENSE1, activation='relu'),

BatchNormalization(),

Dense(DENSE2, activation='relu'),

Dense(NLABELS, activation='softmax')

])

efficient_model.summary()

checkpoint_callback = ModelCheckpoint(

'best_weights',

monitor='val_accuracy',

mode='max',

verbose=1,

save_best_only=True

)

loss_function = CategoricalCrossentropy()

metrics = [CategoricalAccuracy(name='accuracy'), TopKCategoricalAccuracy(k=2, name='topk_accuracy')]

efficient_model.compile(

optimizer = Adam(learning_rate=LR),

loss = loss_function,

metrics = metrics

)

Model Training

efficient_history = efficient_model.fit(

training_dataset,

validation_data = testing_dataset,

epochs = EPOCHS,

verbose = 1

)

Model Evaluation

efficient_model.evaluate(testing_dataset)

# loss: 0.5144 - accuracy: 0.7968 - topk_accuracy: 0.9359

plt.plot(efficient_history.history['loss'])

plt.plot(efficient_history.history['val_loss'])

plt.title('Model Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train_loss', 'val_loss'])

plt.show()

plt.plot(efficient_history.history['accuracy'])

plt.plot(efficient_history.history['val_accuracy'])

plt.title('Model Accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train_accuracy', 'val_accuracy'])

plt.show()

test_image = cv.imread('./dataset/happy.jpg')

test_image = cv.resize(test_image, (SIZE, SIZE))

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = efficient_model(img).numpy()

print(label)

# [[7.2473467e-06 4.2083409e-02 9.5790941e-01]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# sad

test_image = cv.imread('./dataset/sad.jpg')

test_image = cv.resize(test_image, (SIZE, SIZE))

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = efficient_model(img).numpy()

print(label)

# [[0.06096885 0.03196466 0.90706646]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# sad

test_image = cv.imread('./dataset/angry.jpg')

test_image = cv.resize(test_image, (SIZE, SIZE))

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = efficient_model(img).numpy()

print(label)

# [[0.87592113 0.08582695 0.03825196]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# angry

plt.figure(figsize=(16,16))

for images, labels in testing_dataset.take(1):

for i in range(16):

ax = plt.subplot(4,4,i+1)

true = "True: " + LABELS[tf.argmax(labels[i], axis=0).numpy()]

pred = "Predicted: " + LABELS[

tf.argmax(efficient_model(tf.expand_dims(images[i], axis=0)).numpy(), axis=1).numpy()[0]

]

plt.title(

true + "\n" + pred

)

plt.imshow(images[i]/255.)

plt.axis('off')

plt.savefig('assets/tf_Emotion_Detection_17.webp', bbox_inches='tight')

y_pred = []

y_test = []

for img, label in testing_dataset:

y_pred.append(efficient_model(img))

y_test.append(label.numpy())

conf_mtx = ConfusionMatrixDisplay(

confusion_matrix=confusion_matrix(

np.argmax(y_test[:-1], axis=-1).flatten(),

np.argmax(y_pred[:-1], axis=-1).flatten()

),

display_labels=LABELS

)

fig, ax = plt.subplots(figsize=(16,12))

conf_mtx.plot(ax=ax, cmap='plasma', include_values=False)

plt.savefig('assets/tf_Emotion_Detection_18.webp', bbox_inches='tight')

Saving the Model

tf.keras.saving.save_model(

efficient_model, 'saved_model/efficient_model', overwrite=True, save_format='tf'

)

# restore the model

restored_model = tf.keras.saving.load_model('saved_model/efficient_model')

# Check its architecture

restored_model.summary()

restored_model.evaluate(testing_dataset)

# loss: 0.5144 - accuracy: 0.7968 - topk_accuracy: 0.9359

Building the MobileNet TF Model

# transfer learning

backbone2 = tf.keras.applications.MobileNetV3Small(

input_shape=(SIZE, SIZE, 3),

alpha=1.0,

minimalistic=True,

include_top=False,

weights='imagenet',

dropout_rate=0.2,

include_preprocessing=True

)

backbone2.trainable = False

mobilenet_model = tf.keras.Sequential([

Input(shape=(SIZE, SIZE, 3)),

backbone2,

GlobalAveragePooling2D(),

Dense(DENSE1, activation='relu'),

BatchNormalization(),

Dense(DENSE2, activation='relu'),

Dense(NLABELS, activation='softmax')

])

mobilenet_model.summary()

checkpoint_callback = ModelCheckpoint(

'best_weights',

monitor='val_accuracy',

mode='max',

verbose=1,

save_best_only=True

)

loss_function = CategoricalCrossentropy()

metrics = [CategoricalAccuracy(name='accuracy'), TopKCategoricalAccuracy(k=2, name='topk_accuracy')]

mobilenet_model.compile(

optimizer = Adam(learning_rate=LR),

loss = loss_function,

metrics = metrics

)

Model Training

tf.config.run_functions_eagerly(True)

mobilenet_history = mobilenet_model.fit(

training_dataset,

validation_data = testing_dataset,

epochs = EPOCHS,

verbose = 1

)

# loss: 0.4242

# accuracy: 0.8263

# topk_accuracy: 0.9597

# val_loss: 0.5638

# val_accuracy: 0.7682

# val_topk_accuracy: 0.9328

Model Evaluation

mobilenet_model.evaluate(testing_dataset)

# loss: 0.5144 - accuracy: 0.7968 - topk_accuracy: 0.9359

plt.plot(mobilenet_history.history['loss'])

plt.plot(mobilenet_history.history['val_loss'])

plt.title('Model Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train_loss', 'val_loss'])

plt.show()

plt.plot(mobilenet_history.history['accuracy'])

plt.plot(mobilenet_history.history['val_accuracy'])

plt.title('Model Accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train_accuracy', 'val_accuracy'])

plt.show()

test_image = cv.imread('./dataset/happy.jpg')

test_image = cv.resize(test_image, (SIZE, SIZE))

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = mobilenet_model(img).numpy()

print(label)

# [[2.512952e-04 9.718944e-01 2.785430e-02]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# happy

test_image = cv.imread('./dataset/sad.jpg')

test_image = cv.resize(test_image, (SIZE, SIZE))

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = mobilenet_model(img).numpy()

print(label)

# [[4.4993469e-03 9.9456584e-01 9.3488337e-04]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# happy

test_image = cv.imread('./dataset/angry.jpg')

test_image = cv.resize(test_image, (SIZE, SIZE))

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = mobilenet_model(img).numpy()

print(label)

# [[9.8563331e-01 1.4297843e-02 6.8810325e-05]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# angry

plt.figure(figsize=(16,16))

for images, labels in testing_dataset.take(1):

for i in range(16):

ax = plt.subplot(4,4,i+1)

true = "True: " + LABELS[tf.argmax(labels[i], axis=0).numpy()]

pred = "Predicted: " + LABELS[

tf.argmax(mobilenet_model(tf.expand_dims(images[i], axis=0)).numpy(), axis=1).numpy()[0]

]

plt.title(

true + "\n" + pred

)

plt.imshow(images[i]/255.)

plt.axis('off')

plt.savefig('assets/tf_Emotion_Detection_21.webp', bbox_inches='tight')

y_pred = []

y_test = []

for img, label in testing_dataset:

y_pred.append(mobilenet_model(img))

y_test.append(label.numpy())

conf_mtx = ConfusionMatrixDisplay(

confusion_matrix=confusion_matrix(

np.argmax(y_test[:-1], axis=-1).flatten(),

np.argmax(y_pred[:-1], axis=-1).flatten()

),

display_labels=LABELS

)

fig, ax = plt.subplots(figsize=(16,12))

conf_mtx.plot(ax=ax, cmap='plasma', include_values=False)

plt.savefig('assets/tf_Emotion_Detection_22.webp', bbox_inches='tight')

Saving the Model

tf.keras.saving.save_model(

mobilenet_model, 'saved_model/mobilenet_model', overwrite=True, save_format='tf'

)

# restore the model

restored_model2 = tf.keras.saving.load_model('saved_model/mobilenet_model')

# Check its architecture

restored_model2.summary()

restored_model2.evaluate(testing_dataset)

# loss: 0.5638 - accuracy: 0.7682 - topk_accuracy: 0.9328

TFLite Conversion

# Convert the model into TF Lite.

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model/mobilenet_model')

tflite_model = converter.convert()

# Save the model.

with open('saved_model/mobilenet_model.tflite', 'wb') as f:

f.write(tflite_model)

Finetuning the MobileNet TF Model

# transfer learning

backbone = tf.keras.applications.MobileNetV3Small(

input_shape=(SIZE, SIZE, 3),

alpha=1.0,

minimalistic=True,

include_top=False,

weights='imagenet',

dropout_rate=0.2,

include_preprocessing=True

)

backbone.trainable = False

# mobilenet_model = tf.keras.Sequential([

# Input(shape=(SIZE, SIZE, 3)),

# backbone2,

# GlobalAveragePooling2D(),

# Dense(DENSE1, activation='relu'),

# BatchNormalization(),

# Dense(DENSE2, activation='relu'),

# Dense(NLABELS, activation='softmax')

# ])

input = Input(shape=(SIZE,SIZE,3))

x = backbone(input, training=False)

x = GlobalAveragePooling2D()(x)

x = Dense(DENSE1, activation='relu')(x)

x = BatchNormalization()(x)

x = Dense(DENSE2, activation='relu')(x)

output = Dense(NLABELS, activation='softmax')(x)

mobilenet_model = Model(input, output)

mobilenet_model.summary()

checkpoint_callback = ModelCheckpoint(

'best_weights',

monitor='val_accuracy',

mode='max',

verbose=1,

save_best_only=True

)

early_stopping_callback = EarlyStopping(

monitor='val_accuracy',

patience=10,

restore_best_weights=True

)

loss_function = CategoricalCrossentropy()

metrics = [CategoricalAccuracy(name='accuracy'), TopKCategoricalAccuracy(k=2, name='topk_accuracy')]

mobilenet_model.compile(

optimizer = Adam(learning_rate=LR),

loss = loss_function,

metrics = metrics

)

Model Training

tf.config.run_functions_eagerly(True)

mobilenet_history = mobilenet_model.fit(

training_dataset,

validation_data = testing_dataset,

epochs = EPOCHS,

verbose = 1,

callbacks=[checkpoint_callback, early_stopping_callback]

)

# loss: 0.4372

# accuracy: 0.8188

# topk_accuracy: 0.9547

# val_loss: 0.5934

# val_accuracy: 0.7643

# val_topk_accuracy: 0.9320

Model Evaluation

mobilenet_model.evaluate(testing_dataset)

# loss: 0.5934 - accuracy: 0.7643 - topk_accuracy: 0.9320

Model Finetuning

backbone.trainable = True

mobilenet_model.compile(

optimizer = Adam(learning_rate=LR/100),

loss = loss_function,

metrics = metrics

)

mobilenet_history = mobilenet_model.fit(

training_dataset,

validation_data = testing_dataset,

epochs = EPOCHS,

verbose = 1,

callbacks=[checkpoint_callback, early_stopping_callback]

)

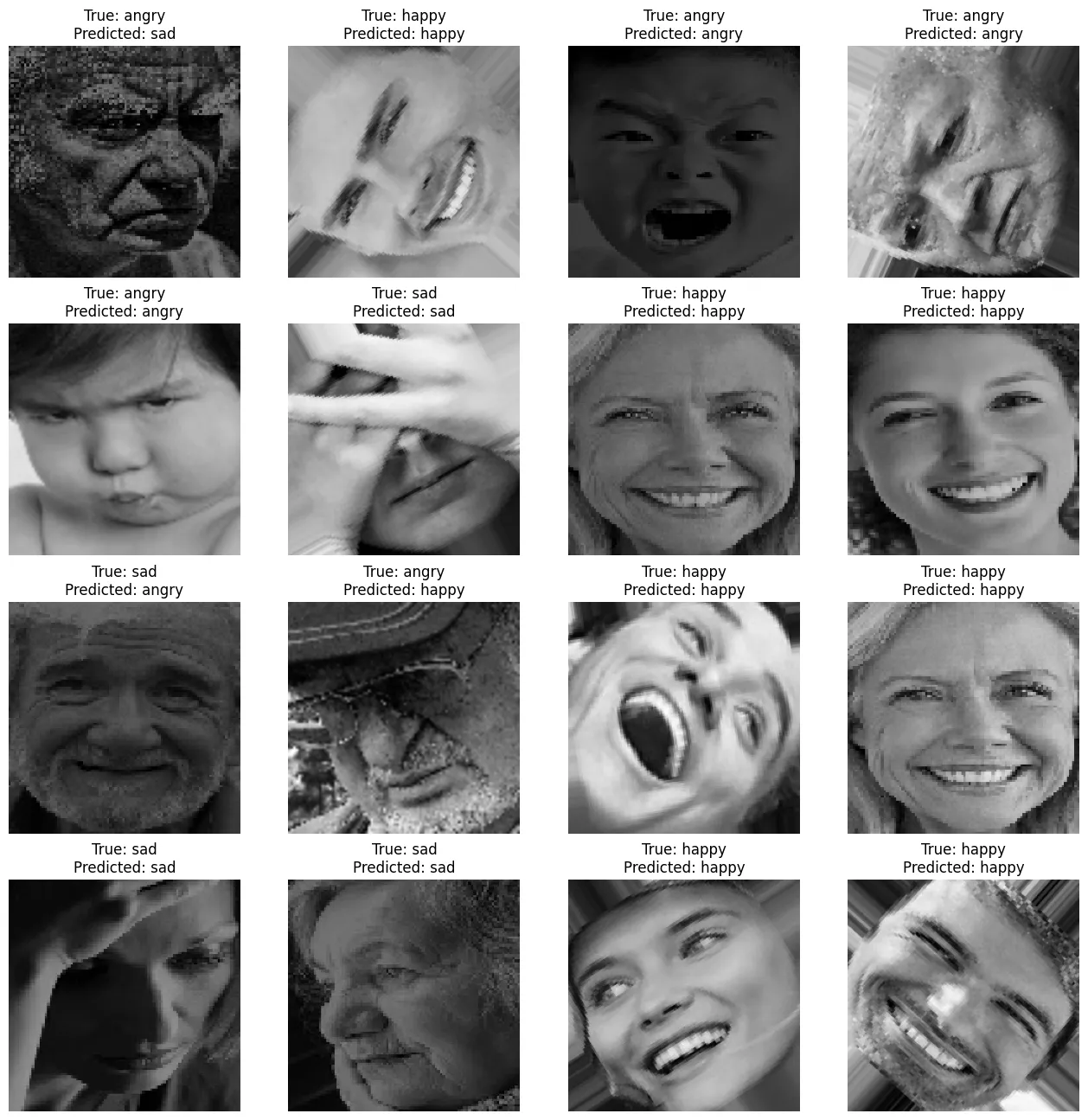

# loss: 0.2703

# accuracy: 0.8912

# topk_accuracy: 0.9763

# val_loss: 0.3906

# val_accuracy: 0.8455

# val_topk_accuracy: 0.9627

Model Evaluation

mobilenet_model.evaluate(testing_dataset)

# loss: 0.3906 - accuracy: 0.8455 - topk_accuracy: 0.9627

plt.plot(mobilenet_history.history['loss'])

plt.plot(mobilenet_history.history['val_loss'])

plt.title('Model Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train_loss', 'val_loss'])

plt.show()

plt.plot(mobilenet_history.history['accuracy'])

plt.plot(mobilenet_history.history['val_accuracy'])

plt.title('Model Accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train_accuracy', 'val_accuracy'])

plt.show()

test_image = cv.imread('./dataset/happy.jpg')

test_image = cv.resize(test_image, (SIZE, SIZE))

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = mobilenet_model(img).numpy()

print(label)

# [[0.01388984 0.95678276 0.02932744]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# happy

test_image = cv.imread('./dataset/sad.jpg')

test_image = cv.resize(test_image, (SIZE, SIZE))

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = mobilenet_model(img).numpy()

print(label)

# [[5.297706e-04 9.994018e-01 6.839229e-05]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# happy

test_image = cv.imread('./dataset/angry.jpg')

test_image = cv.resize(test_image, (SIZE, SIZE))

img = tf.constant(test_image, dtype=tf.float32)

img = tf.expand_dims(img, axis=0)

label = mobilenet_model(img).numpy()

print(label)

# [[9.6875304e-01 3.1238725e-02 8.2295473e-06]]

print(LABELS[tf.argmax(label, axis=1).numpy()[0]])

# angry

plt.figure(figsize=(16,16))

for images, labels in testing_dataset.take(1):

for i in range(16):

ax = plt.subplot(4,4,i+1)

true = "True: " + LABELS[tf.argmax(labels[i], axis=0).numpy()]

pred = "Predicted: " + LABELS[

tf.argmax(mobilenet_model(tf.expand_dims(images[i], axis=0)).numpy(), axis=1).numpy()[0]

]

plt.title(

true + "\n" + pred

)

plt.imshow(images[i]/255.)

plt.axis('off')

plt.savefig('assets/tf_Emotion_Detection_25.webp', bbox_inches='tight')

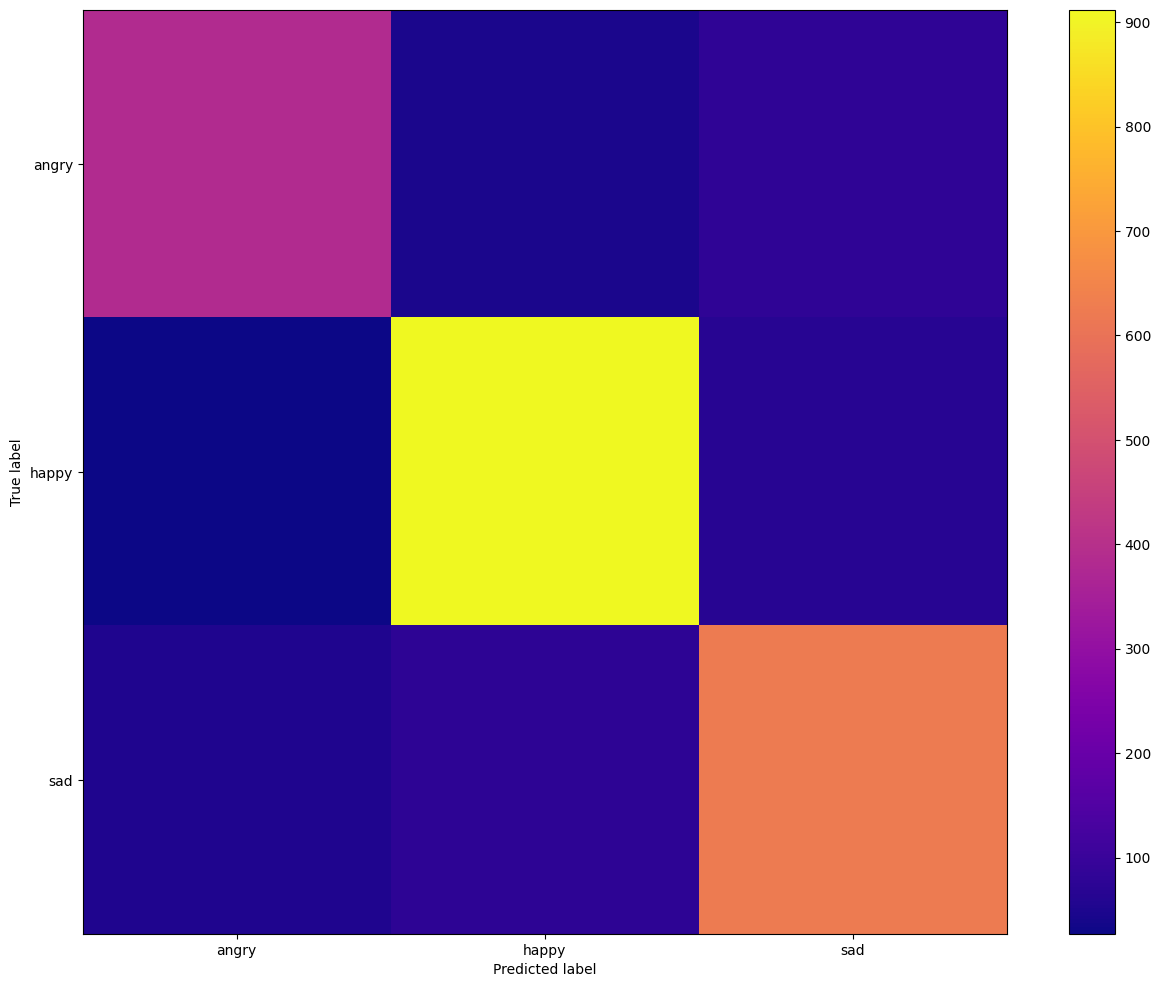

y_pred = []

y_test = []

for img, label in testing_dataset:

y_pred.append(mobilenet_model(img))

y_test.append(label.numpy())

conf_mtx = ConfusionMatrixDisplay(

confusion_matrix=confusion_matrix(

np.argmax(y_test[:-1], axis=-1).flatten(),

np.argmax(y_pred[:-1], axis=-1).flatten()

),

display_labels=LABELS

)

fig, ax = plt.subplots(figsize=(16,12))

conf_mtx.plot(ax=ax, cmap='plasma', include_values=False)

plt.savefig('assets/tf_Emotion_Detection_26.webp', bbox_inches='tight')

Saving the Model

tf.keras.saving.save_model(

mobilenet_model, 'saved_model/mobilenet_model', overwrite=True, save_format='tf'

)

# restore the model

restored_model2 = tf.keras.saving.load_model('saved_model/mobilenet_model')

# Check its architecture

restored_model2.summary()

restored_model2.evaluate(testing_dataset)

# loss: 0.3906 - accuracy: 0.8455 - topk_accuracy: 0.9627

TFLite Conversion

# Convert the model into TF Lite.

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model/mobilenet_model')

tflite_model = converter.convert()

# Save the model.

with open('saved_model/mobilenet_model.tflite', 'wb') as f:

f.write(tflite_model)