OpenCV Count My Money

based on Murtaza's Workshop - Robotics and AI

The border to Hongkong just "kinda" opened after three years of pandemic. I am planing to go and visit as soon as the situation normalizes. All this time I had a pile of change coins from HK lying around on my fridge. Let's learn how to use OpenCV to count them. Maybe it is enough for a breakfast at the border 7/11 :)

Let's first check if OpenCV is up-to-date:

pip install --upgrade opencv-python

Successfully installed opencv-python-4.7.0.68

RTSP Videostream

I will use an INSTAR IP camera's RTSP Stream to feed OpenCV the information:

import cv2

import os

RTSP_URL = 'rtsp://admin:instar@192.168.2.120/livestream/11'

os.environ['OPENCV_FFMPEG_CAPTURE_OPTIONS'] = 'rtsp_transport;udp'

cap = cv2.VideoCapture(RTSP_URL, cv2.CAP_FFMPEG)

if not cap.isOpened():

print('ERROR :: Cannot open RTSP stream')

exit(-1)

while True:

success, img = cap.read()

cv2.imshow(RTSP_URL, img)

if cv2.waitKey(1) & 0xFF == ord('q'): # Keep running until you press `q`

break

Detecting Contours

I am going to use CVZone a Computer vision package that makes its easy to run Image processing and AI functions. At the core it uses OpenCV and Mediapipe libraries. We use it to find the object contours for us:

pip install cvzone

Successfully installed cvzone-1.5.6

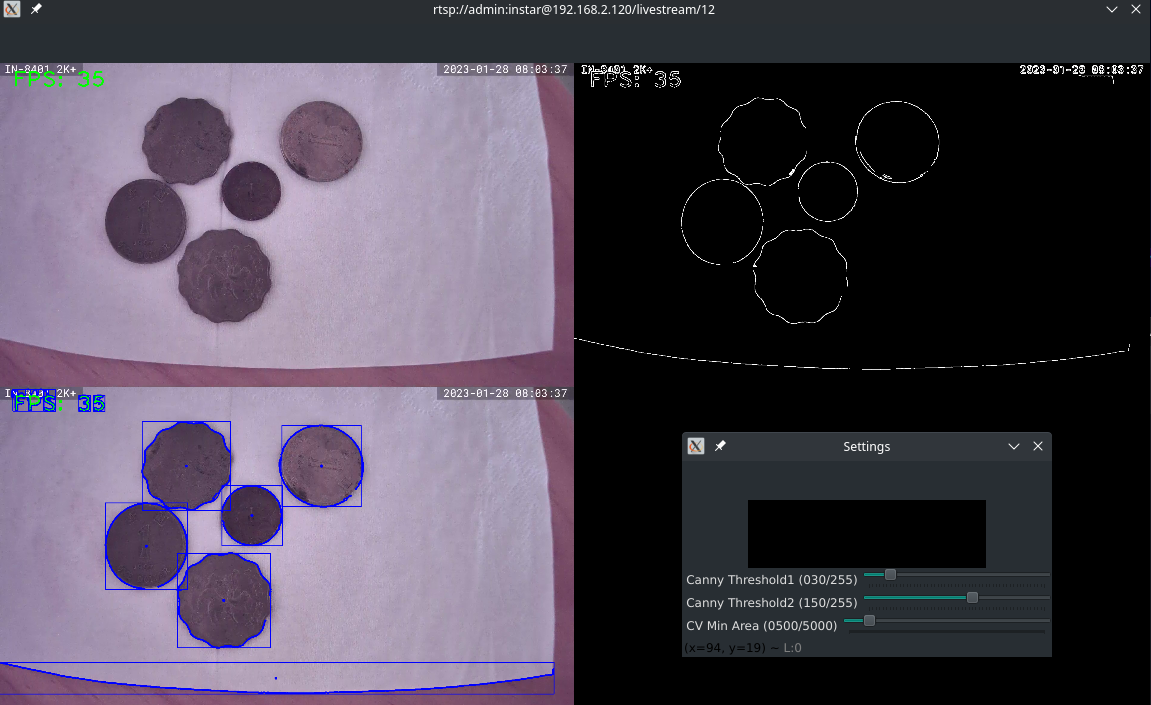

To allow us to detect shapes we first need to simplify the image first. So instead of using the raw image we will call a function that returns a modified version of it:

# while the stream runs do detection

while True:

success, img = cap.read()

# pre-process each image

img_prep = preProcessing(img)

And the pre-processing function should emphasize the contours of object within the image helping us to detect them. We can also add some sliders allowing us to find the optimal thresholds for the Canny function:

def empty(a):

pass

# create two sliders to adjust canny filter

# thresholds on the fly

cv2.namedWindow("Settings")

cv2.resizeWindow("Settings", 640, 240)

cv2.createTrackbar("Threshold1", "Settings", 65, 255, empty)

cv2.createTrackbar("Threshold2", "Settings", 150, 255, empty)

# prepare the image for detection

def preProcessing(img):

# add some blur to reduce noise

img_prep = cv2.GaussianBlur(img, (5, 5), 3)

# use canny filter to enhance contours

# make thresholds changeable by sliders

threshold1 = cv2.getTrackbarPos("Threshold1", "Settings")

threshold2 = cv2.getTrackbarPos("Threshold2", "Settings")

img_prep = cv2.Canny(img_prep, threshold1, threshold2)

# make features more prominent by dilations

kernel = np.ones((2, 2), np.uint8)

img_prep = cv2.dilate(img_prep, kernel, iterations=1)

# morph detected features to close gaps in geometries

img_prep = cv2.morphologyEx(img_prep, cv2.MORPH_CLOSE, kernel)

return img_prep

Processing

We can now use cvZone to detect the contours of every object in our live video and display them for us:

# min area slider to filter noise

cvMinArea = cv2.getTrackbarPos("CV Min Area", "Settings")

# findContours returns the processed image and found contours

imgContours, conFound = cvzone.findContours(img, img_prep, cvMinArea)

# show original vs pre-processed image

# show all streams in 2 columns at half size

output = cvzone.stackImages([img, img_prep, imgContours], 2, 0.3)

Since the environment I am testing in has some clutter and sub-ideal lighting I am getting a lot of contours I am not interested in. cvZone allows us to filter the results by counting the corners of the object contour. We can access those information with:

contour["cnt"]: Contourcontour["bbox"]: Bounding box of contourcontour["area"]: Area of contour encircled by contourcontour["center"]: Center point of contour

We can loop over the results and only take contours that have a high enough number of corner points to most likely be a circle:

# conFound will contain all contours found

# we can limit it to circles for our coins

if conFound:

for contour in conFound:

# get the arc length of the contour

perimeter = cv2.arcLength(contour["cnt"], True)

# calculate approx polygon count / corner points

polycount = cv2.approxPolyDP(contour["cnt"], 0.02 * perimeter, True)

# print no of corner points in contour

print(len(polycount))

The printout I am getting here shows me that I have a fe objects that have a small amount of corners that I can filter - 8 corners might be a good cut-off:

8, 8, 8, 9, 13, 8, 10, 6, 8, 6, 10, 3, 8, 10, 9, 2, 8, 6, 11, 2, 2, 8, 6, 10, 7, 2, 11, 7, 2, 8, 7, 8, 7, 4, 6, 11, 9, 13, 10, 6, 6, 3, 8, 10, 9, 2, 6, 12, 2, 8, 2, 6, 6, 10, 2, 11, 2, 7, 8, 7, 8, 7, 6, 11, 8, 8, 8, 8, 8, 9, 13, 8, 10, 6, 6, 3, 8, 10, 9, 2, 6, 12, 2, 8, 2, 6, 2, 7, 10, 2, 7, 10, 8, 7, 8, 7, 6, 11, 8, 8, 8, 8, 9, 13, 8, 8, 8, 6, 6, 3, 8, 10, 9, 2, 2, 6, 11, 8, 2, 6, 2, 7, 2, 7, 10, 8, 5, 7, 8, 7, 6, 11, 8, 8, 8, 8, 9, 13, 8, 8, 8, 6, 6, 3, 8, 10, 9, 2, 2

So let's remove everything with less than 8 corners and take a look at the area of each unique coin:

if len(polycount) >= 8:

print(contour['area'])

Issues: I noticed that a black background gives a lot better results than a white piece of paper. But the contours are far from stable. The main issue will be that I am using my monitor as a light source. I will retry this in sunlight later and see if this will yield more stable results.

Every time the displayed contour closed around the coin I start to get stable readings for the surface area:

- 10 Cents:

47343.5,47340.0,47338.5,47331.0,47335.5,47333.5,47349.5 - 20 Cents:

49283.0,49272.0,49272.5,49272.0,49271.0,49268.0,49263.5 - 50 Cents:

71451.0,71454.5,71410.5,71413.5,71429.0,71425.0,71428.0 - 1 Dollar:

97560.5,97555.5,97546.5,97541.5,97537.5,97565.0,97563.0 - 2 Dollar:

104829.0,104825.0,104818.0,104813.0,104810.0,104808.0,104812.0 - 5 Dollar:

113931.0,113936.0,113934.0,113934.0,113937.0,113933.0,113928.5

So we can now differentiate between those coins just by their surface area:

| Area | Coin |

|---|---|

| < 48000 | 10 Cents |

| > 48000 < 50000 | 20 Cents |

| > 50000 < 72000 | 50 Cents |

| > 72000 < 98000 | 1 Dollar |

| > 98000 < 105000 | 2 Dollar |

| > 105000 < 114000 | 5 Dollar |

Issue: I noticed that since my camera was not mounted perfectly parallel to the tabletop I am seeing some deviation from those measurements above when I am moving the coin around. Below I added some additional padding - but I am still seeing some overlap between the 2 and 5 Dollar coins and the 10 and 20 Cents coins. It is Ok if I position them perfectly in the middle of my camera image. It certainly would make sense to move away from a wideangle lense - the image distortion just makes it worse.

if len(polycount) >= 8:

area = contour['area']

# print(area)

if 43000 < area < 49000:

moneyCount += .1

elif 49000 < area < 55000:

moneyCount += .2

elif 55000 < area < 72000:

moneyCount += .5

elif 72000 < area < 104000:

moneyCount += 1

elif 104000 < area < 116000:

moneyCount += 2

elif 116000 < area < 120000:

moneyCount += 5

else:

moneyCount += 0

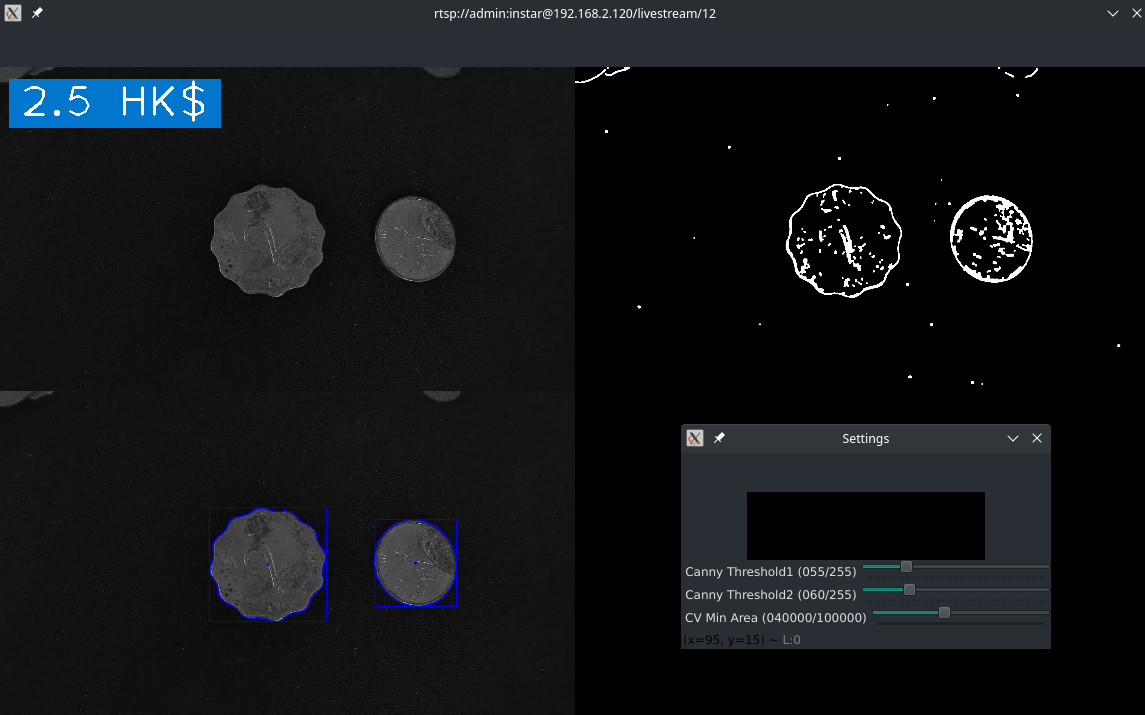

Now when we reset the value of moneyCount every time the loop starts we can print the value of moneyCount to see the value of those coins. In the following example we have a 2 Dollar and a 50 Cent coin - which is summed up to a total of 2,5 Dollar. I am using cvZone to print the value on top of the stacked output:

# add money counter

cvzone.putTextRect(img=imageStack, text=f'{moneyCount} HK$', pos=(20, 50), thickness=2, colorR=(204,119,0))

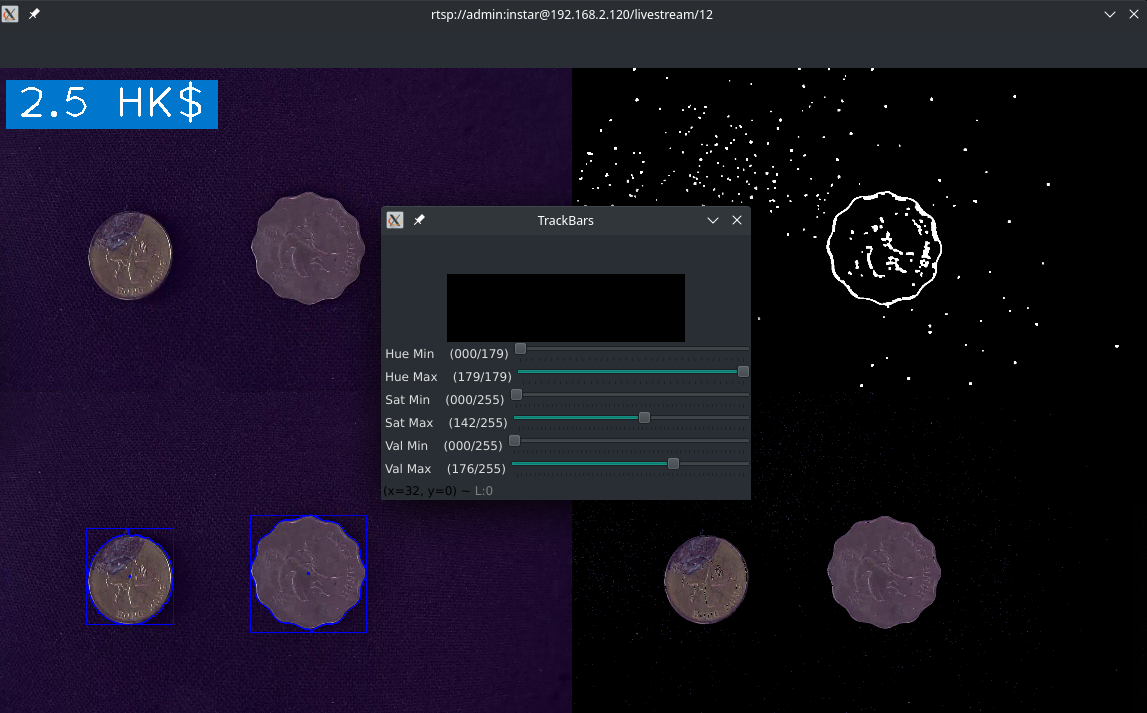

Detecting Colours

Since I was having some difficulties differentiating some of those coins I can add another factor. Right now it will not be very effective due to the bad lighting here - also some of those coins are in dire need of a shine... but let's see.

cvZone comes with a Module called ColorFinder:

from cvzone.ColorModule import ColorFinder

Now we need to configure an instance of the colour finder:

# create instance of colorfinder

# set True to display sliders to adjust search colour

cvColorFinder = ColorFinder(True)

# colour to search for

hsvVals = {'hmin': 0, 'smin': 0, 'vmin': 0, 'hmax': 179, 'smax': 142, 'vmax': 176}

The boolean value True means that cvZone will start the finder in debug mode where we will have sliders to adjust the value for the target colour:

Given the light I am working with I am able to remove the background (image on the lower right). But I cannot differentiate between this golden and silvery coin. This will change once repeated in sun light.

Processing

But we can now take the bounding box provided by our contour, create a cutout and count all pixel that aren't black to get our coins surface area:

# GET AREA BY OBJECT COLOUR

## get location of bounding box

x, y, w, h = contour['bbox']

## crop to bounding box

imgCrop = img[y:y+h, x:x+w]

## show cropped image

## cv2.imshow('Cropped Contour', imgCrop)

## find colour based on hsvVals in imgCrop

imgColour, mask = cvColorFinder.update(imgCrop, hsvVals)

## we adjusted the hsvVals that everything but the coins

## are black. Now we can exclude everything that is black

## and count the pixels that match our coin colour to

## get it's surface area.

colouredArea = cv2.countNonZero(mask)

print(colouredArea)

if 39000 < colouredArea < 51000:

moneyCountByColour += .1

elif 51000 < colouredArea < 55000:

moneyCountByColour += .2

elif 59000 < colouredArea < 72000:

moneyCountByColour += .5

elif 72000 < colouredArea < 98000:

moneyCountByColour += 1

elif 100000 < colouredArea < 110000:

moneyCountByColour += 2

elif 110000 < colouredArea < 112000:

moneyCountByColour += 5

else:

moneyCountByColour += 0