Keras for Tensorflow - VGG16 Network Architecture

Keras is built on top of TensorFlow 2 and provides an API designed for human beings. Keras follows best practices for reducing cognitive load: it offers consistent & simple APIs, it minimizes the number of user actions required for common use cases, and it provides clear & actionable error messages.

See also:

- Keras for Tensorflow - An (Re)Introduction 2023

- Keras for Tensorflow - Artificial Neural Networks

- Keras for Tensorflow - Convolutional Neural Networks

- Keras for Tensorflow - VGG16 Network Architecture

- Keras for Tensorflow - Recurrent Neural Networks

Very Deep Convolutional Networks

Building the VGG16 Model

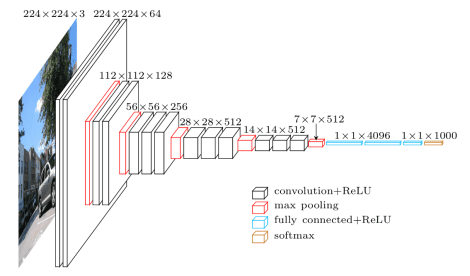

An example convolutional neural network is the VGG16 Architecture. The number 16 in the name VGG refers to the fact that it is 16 layers deep neural network (VGGnet - Image Source).

The VGG16 Model starts with an colour (3 colour channels) image input of 224x224 pixels and keeps applying filters to increase its depth. While using pooling layers to reduce its dimensions. The output layer end with a shape of 1x1x1000 and uses a softmax activation - meaning, the model will assign probabilities for 1000 classes.

To rebuild this model in Keras:

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense, Dropout

# defining the input shape

# starting with a 224x224 colour image

input_shape = (224, 224, 3)

# building the model

model = Sequential()

## convolutional layers with 64 filters + pooling => 224 x 224 x 64

model.add(Conv2D(64, kernel_size=(3, 3), padding='same', activation='relu', input_shape=input_shape))

model.add(Conv2D(64, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

## randomly drop 25% of neurons to prevent overfitting

model.add(Dropout(0.25))

## convolutional layers with 128 filters + pooling => 112 x 112 x 128

model.add(Conv2D(128, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(Conv2D(128, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

## randomly drop 10% of neurons to prevent overfitting

model.add(Dropout(0.10))

## convolutional layers with 256 filters + pooling => 56 x 56 x 256

model.add(Conv2D(256, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(Conv2D(256, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(Conv2D(256, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

## randomly drop 10% of neurons to prevent overfitting

model.add(Dropout(0.10))

## convolutional layers with 256 filters + pooling => 28 x 28 x 512

model.add(Conv2D(512, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(Conv2D(512, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(Conv2D(512, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

## randomly drop 10% of neurons to prevent overfitting

model.add(Dropout(0.10))

## convolutional layers with 256 filters + pooling => 14 x 14 x 512

model.add(Conv2D(512, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(Conv2D(512, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(Conv2D(512, kernel_size=(3, 3), padding='same', activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

## randomly drop 10% of neurons to prevent overfitting

model.add(Dropout(0.10))

## flatten before dense layer => 1 x 1 x 4096

model.add(Flatten())

model.add(Dense(4096, activation='relu'))

model.add(Dropout(0.25))

# output layer assigns probability of 1000 classes

model.add(Dense(1000, activation='softmax'))

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 224, 224, 64) 1792

conv2d_1 (Conv2D) (None, 224, 224, 64) 36928

max_pooling2d (MaxPooling2D) (None, 112, 112, 64) 0

dropout (Dropout) (None, 112, 112, 64) 0

conv2d_2 (Conv2D) (None, 112, 112, 128) 73856

conv2d_3 (Conv2D) (None, 112, 112, 128) 147584

max_pooling2d_1 (MaxPooling2D) (None, 56, 56, 128) 0

dropout_1 (Dropout) (None, 56, 56, 128) 0

conv2d_4 (Conv2D) (None, 56, 56, 256) 295168

conv2d_5 (Conv2D) (None, 56, 56, 256) 590080

conv2d_6 (Conv2D) (None, 56, 56, 256) 590080

max_pooling2d_2 (MaxPooling2D) (None, 28, 28, 256) 0

dropout_2 (Dropout) (None, 28, 28, 256) 0

conv2d_7 (Conv2D) (None, 28, 28, 512) 1180160

conv2d_8 (Conv2D) (None, 28, 28, 512) 2359808

conv2d_9 (Conv2D) (None, 28, 28, 512) 2359808

max_pooling2d_3 (MaxPooling2D) (None, 14, 14, 512) 0

dropout_3 (Dropout) (None, 14, 14, 512) 0

conv2d_10 (Conv2D) (None, 14, 14, 512) 2359808

conv2d_11 (Conv2D) (None, 14, 14, 512) 2359808

conv2d_12 (Conv2D) (None, 14, 14, 512) 2359808

max_pooling2d_4 (MaxPooling2D) (None, 7, 7, 512) 0

dropout_4 (Dropout) (None, 7, 7, 512) 0

flatten (Flatten) (None, 25088) 0

dense (Dense) (None, 4096) 102764544

dropout_5 (Dropout) (None, 4096) 0

dense_1 (Dense) (None, 1000) 4097000

=================================================================

Total params: 121,576,232

Trainable params: 121,576,232

Non-trainable params: 0

_________________________________________________________________

Training the VGG16 Model

Instead of training this fresh model we can use Keras to download a pre-trained version of it, giving us a head start. The following code will download the pre-training weights for the VGG16 model:

# using the pre-trained vgg16 instead of a fresh version

from tensorflow.keras.applications.vgg16 import VGG16

vgg16 = VGG16()

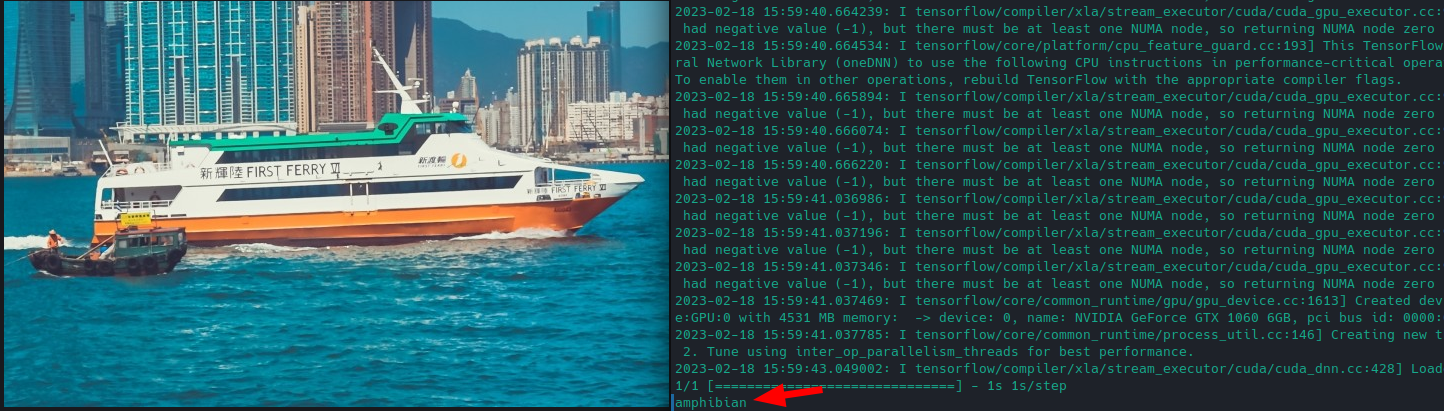

This helped us skipping the training. Now we can go straight to doing predictions. Again, Keras helps us with providing helper functions to load and preprocess a test image and run a prediction on it:

from tensorflow.keras.applications.vgg16 import preprocess_input, decode_predictions

from tensorflow.keras.preprocessing.image import load_img, img_to_array

# using the pre-trained vgg16 instead of a fresh version

vgg16 = VGG16()

# load image for prediction

img = load_img('HK-LR2020_76.jpg', target_size=(224, 224))

img = img_to_array(img)

img = img.reshape(1,224,224,3)

# run prediction

yhat = vgg16.predict(img)

label = decode_predictions(yhat)

prediction = label[0][0]

print(prediction[1])

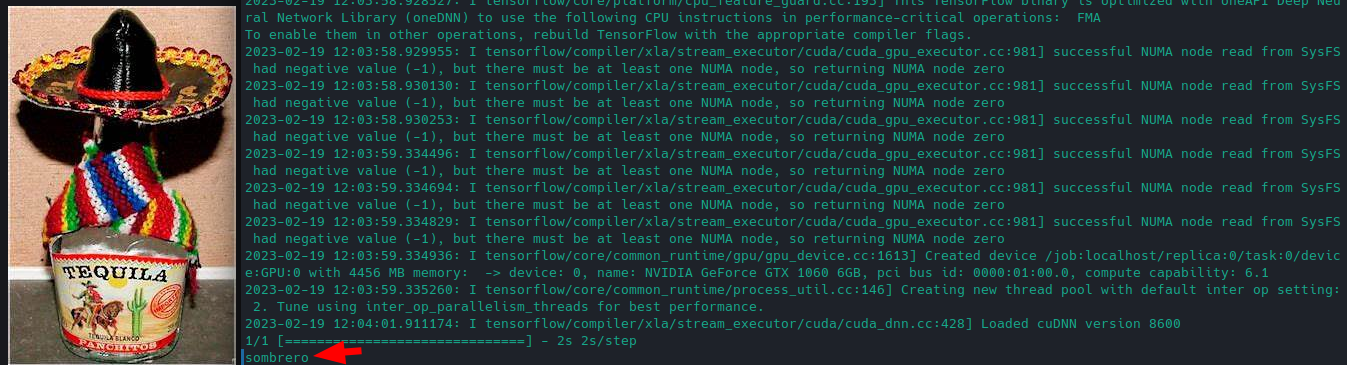

Well, the training went in the right direction. But needs to be optimized for your specific use-case:

The model has been trained on the ImageNet dataset. We can check the included classes by the reading following file:

cat ~/.keras/models/imagenet_class_index.json

One of the included classes is:

"808": [

"n04259630",

"sombrero"

]

So let's put this to a test :)