Using Tensorflow Models in OpenCV

import cv2 as cv

import json

import matplotlib.pyplot as plt

import numpy as np

import random

Model

!cd model && wget http://download.tensorflow.org/models/object_detection/mask_rcnn_inception_v2_coco_2018_01_28.tar.gz

!cd model && tar zxvf mask_rcnn_inception_v2_coco_2018_01_28.tar.gz

!cd model && wget https://github.com/vjgpt/Object-Detection/raw/master/mask_rcnn_inception_v2_coco_2018_01_28.pbtxt

!cd model && wget https://github.com/vjgpt/Object-Detection/blob/master/mscoco_labels.names

# model configuration and weights

cfg_path = './model/mask_rcnn_inception_v2_coco_2018_01_28.pbtxt'

weight_path = './model/mask_rcnn_inception_v2_coco_2018_01_28/frozen_inference_graph.pb'

classes_path = './model/mscoco_labels.names'

with open(classes_path) as json_data:

data = json.load(json_data)

print(data['payload']['blob']['rawLines'])

['person\r', 'bicycle\r', 'car\r', 'motorcycle\r', 'airplane\r', 'bus\r', 'train\r', 'truck\r', 'boat\r', 'traffic light\r', 'fire hydrant\r', '\r', 'stop sign\r', 'parking meter\r', 'bench\r', 'bird\r', 'cat\r', 'dog\r', 'horse\r', 'sheep\r', 'cow\r', 'elephant\r', 'bear\r', 'zebra\r', 'giraffe\r', '\r', 'backpack\r', 'umbrella\r', '\r', '\r', 'handbag\r', 'tie\r', 'suitcase\r', 'frisbee\r', 'skis\r', 'snowboard\r', 'sports ball\r', 'kite\r', 'baseball bat\r', 'baseball glove\r', 'skateboard\r', 'surfboard\r', 'tennis racket\r', 'bottle\r', '\r', 'wine glass\r', 'cup\r', 'fork\r', 'knife\r', 'spoon\r', 'bowl\r', 'banana\r', 'apple\r', 'sandwich\r', 'orange\r', 'broccoli\r', 'carrot\r', 'hot dog\r', 'pizza\r', 'donut\r', 'cake\r', 'chair\r', 'couch\r', 'potted plant\r', 'bed\r', '\r', 'dining table\r', '\r', '\r', 'toilet\r', '\r', 'tv\r', 'laptop\r', 'mouse\r', 'remote\r', 'keyboard\r', 'cell phone\r', 'microwave\r', 'oven\r', 'toaster\r', 'sink\r', 'refrigerator\r', '\r', 'book\r', 'clock\r', 'vase\r', 'scissors\r', 'teddy bear\r', 'hair drier\r', 'toothbrush']

class_names = data['payload']['blob']['rawLines']

len(class_names)

90

model = cv.dnn.readNetFromTensorflow(weight_path, cfg_path)

Preprocessing

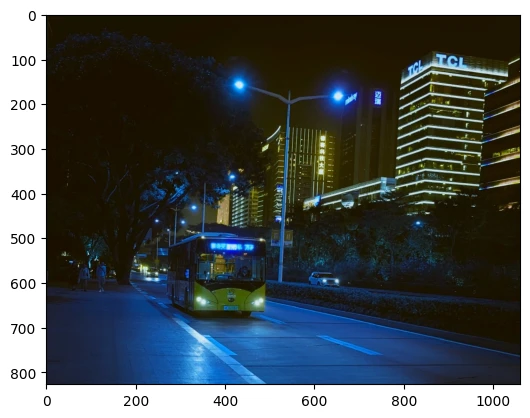

# load test image

img_path = './assets/bus.jpg'

img = cv.imread(img_path)

height, width, channels = img.shape

plt.imshow(img)

blob = cv.dnn.blobFromImage(img)

Model Predictions

def get_predictions(model, blob):

model.setInput(blob)

boxes, masks = model.forward(['detection_out_final', 'detection_masks'])

return boxes, masks

boxes, masks = get_predictions(model, blob)

print(len(boxes), len(masks))

Prediction Visualization

print(height, width, channels)

canvas = np.zeros((height, width, channels))

for j in range(len(masks)):

bbox = boxes[0, 0 , j]

mask = masks[j]

print(bbox)

class_id = bbox[1]

score = bbox[2]

# filter 100 detections by adding a detection confidence threshold

threshold = 0.5

# generate random colours for each class

colours = [(random.randint(0,255), random.randint(0,255), random.randint(0,255)) for j in range(len(class_names))]

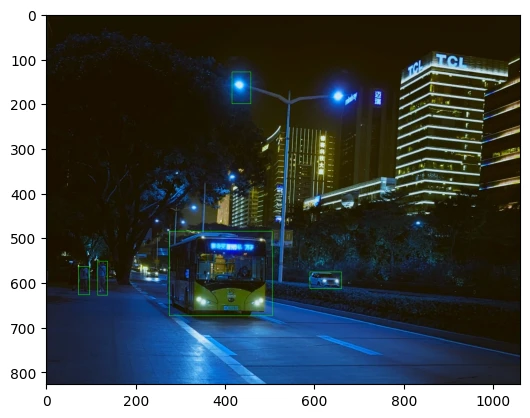

Bounding Boxes

for j in range(len(masks)):

bbox = boxes[0, 0 , j]

mask = masks[j]

class_label = bbox[1]

score = bbox[2]

if score > threshold:

# debug

print(class_label)

print(score)

print(mask.shape)

print(bbox.shape)

# bbox corner positions in relative/normalized coordinates * img dimensions as int = pixel position

x1, y1, x2, y2 = int(bbox[3] * width), int(bbox[4] * height), int(bbox[5] * width), int(bbox[6] * height)

output = cv.rectangle(img, (x1, y1), (x2, y2), (0, 255, 0))

plt.imshow(output)

# only keep mask for detected class label

mask = mask[int(class_label)]

# debug output e.g.

# 2.0 => class label

# 0.9532703 => prediction confidence

# (90, 15, 15) => mask consists of 90 (1 for each class the model was trained with) 15(*image width)x15(*image height) squares

# (7,) => bounding box is drawn with 7 corner points

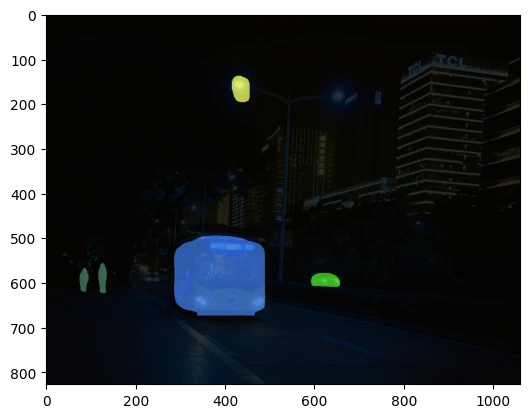

Segmentation Masks

for j in range(len(masks)):

bbox = boxes[0, 0 , j]

score = bbox[2]

if score > threshold:

class_label = bbox[1]

mask = masks[j]

# bbox corner positions in relative/normalized coordinates * img dimensions as int = pixel position

x1, y1, x2, y2 = int(bbox[3] * width), int(bbox[4] * height), int(bbox[5] * width), int(bbox[6] * height)

# only keep mask for detected class label

mask = mask[int(class_label)]

# de-normalize mask

mask = cv.resize(mask, (x2-x1, y2-y1))

# debug

# print(mask.shape)

_, mask = cv.threshold(mask, 0.5, 1, cv.THRESH_BINARY)

for c in range (channels):

# multiply by 255 to get white masks for all classes

canvas[y1:y2, x1:x2, c] = mask * colours[int(class_label)][c]

plt.imshow(canvas)

overlay = ((0.8 * canvas) + (0.2 * img)).astype('uint8')

plt.imshow(overlay)